By Stephen Kaufman, Chief Architect, Microsoft

In a recent McKinsey whitepaper on “What matters most: Eight priorities for CEOs in 2024,” the authors called out Gen AI as #1, calling it “Technology’s generational moment”. SAG-AFTRA, a labor union whose members include some of the most famous faces in the entertainment industry went on strike for almost 4 months in 2023 over the disruptive role of AI in the film and television industry, it’s first in over 40 years. Open AI and Sam Altman grabbed headlines for days at the end of 2023 and every boardroom and every corporate leader’s priority became understanding, deploying and derisking Generative AI. What is probably not known as widely is that while Machine Learning and Artificial intelligence have been around for quite some time, we are currently in the early stages of Generative AI.

Artificial intelligence (AI) is clearly transforming various domains of human activities, from healthcare to entertainment, from education to finance. Businesses are still learning the full potential of AI technologies. They don’t want to wait or can’t wait because of the competitive advantages that are offered. Many expect their Go-To-Market models to be changed forever. Trouble shooting, customer service, business analytics and many other areas are expected to benefit immensely and corporations that can harness the competitive advantage can gain material value. However, along with the benefits and advantages, AI also poses some risks that need to be addressed and mitigated. In this article, we will discuss some of the major AI risks and ways to reduce or eliminate those risks.

In working with AI, we should be helping executives in the companies we are working with to understand these risks and also the potential applications and innovations that can come from Generative AI. That is why it is essential that we take a moment now to develop a strategy for dealing with Generative AI. By developing a strategy, you will be well positioned to reap the benefits from the capabilities, and will be giving your organization a head-start in managing the risks.

When looking at the risks, companies can feel overwhelmed or decide that it represents more trouble than they are willing to accept and may take the stance of banning GenAI. Banning GenAI is not the answer, and will only lead to a bypassing of controls and more shadow IT. So, in the end, they will use the technology but won’t tell you.

So, let’s look at the risks and take a proactive approach. The goal is the same across all industries, no matter where the regulations may differ, the benefits of AI can be realized while minimizing the risks it presents to business and society.

AI risks can be broadly categorized into three types: Technical, Ethical, and Social. Technical risks refer to the potential failures or errors of AI systems, such as bugs, hacking, or adversarial attacks. Ethical risks refer to the moral dilemmas or conflicts that arise from the use or misuse of AI, such as bias, discrimination, or privacy violations. Social risks refer to the impacts of AI on human society and culture, such as unemployment, inequality, or social unrest.

In looking at these categories, you should review your current risk management activities and determine if these are already included or if existing activities need to be amended. As new risks are being introduced, existing risks are being amplified, making it necessary to update current mitigation strategies.

In this article we are going to focus on the following eight most talked about risks:

- Data privacy and confidentiality

- GenAI Provider Breach

- Data Leakage

- Prompt Injection

- Inadequate sandboxing

- Hallucinations (ungrounded outputs and errors)

- DDOS attacks

- Copyright Violations

Privacy and confidentiality: This is the biggest concern when it comes to Generative AI. When you start using this technology, it can (and usually does) result in the processing of sensitive information, like intellectual property, source code, trade secrets and other data through direct user input. As we create a policy, the policy needs to be similar in flavor to the policies of general cloud computing. You need to keep in mind data residency laws, GDPR, HIPAA, PCI, DSS and more. Data sent to these models are considered third party interactions and must have risk mitigations in place. You must understand whether your data will be used to train the AI model or used for testing and determine whether there is an opt out feature. Otherwise, you need to make sure that you are sanitizing any data that is sent (sanitize source code, trade secrets, customer information, etc.) and make sure the users and developers know and understand this. If the third-party model provider keeps any data collected, you must understand how long they will retain that data, and what safeguards they are providing. As you create your policy, keep in mind that the best control is user awareness. Create rules for what is allowed and what isn’t allowed (rules around data leakage, cloud access, or data that isn’t anonymized).

Provider Data breach: This risk is related to the previous, but there is an additional need to understand the process a provider takes in the case of a provider’s breach. In creating your strategy, you need to identify what happens when sensitive information such as customer data, financial information, and proprietary business information is leaked. These platforms are still relatively new, and their security experience and posture may not be as mature. If an event occurs, determine how you will mitigate any fall out. Ensure that you have a security framework and policy around what data can and cannot be used with Large Language Models (LLM’s). Include requirements around anonymizing data or using cloud access security brokers (CASB) as a mediator (Data classification or DLP). If you are using a security broker, additional education will be required for the developers, so they know not to use the direct API calls of the LLM. You will also need to Include threat modeling and extend your existing third-party security checklist to include Generative AI. The SEC now has reporting requirements that companies must report a security breach in 24 hours. Many companies don’t know that they have been breached in that timeframe. – SEC.gov | SEC Adopts Rules on Cybersecurity Risk Management, Strategy, Governance, and Incident Disclosure by Public Companies

Data leakage: This risk goes beyond cloud data leakage such as a misconfigured storage account, but for LLMs, this can happen when sensitive information or confidential data is revealed. This can occur not directly through the LLM but when you create your own chat bots or use your own data.

When creating this policy, understand that there is intentional and unintentional leakage. Unintentional leakage occurs when a user asks a question and sends a prompt to the LLM, without realizing that it could potentially reveal sensitive information. If the proper controls aren’t in place such as input/output filtering or user-based security, the model may generate a response that includes confidential data. Intentional leakage is about data exfiltration. Through prompts or prompt injection one could exploit the LLM capabilities to retrieve specific confidential data. Controls must be in place for prompt injection. This includes monitoring and logging, collection of the prompt and response messages, filtering output, and prompt validation and security monitoring. In addition, you will need to incorporate alerts for malicious inputs.

Prompt Injection: This is a new type of attack and risk, and security professionals need to be aware of this. In this situation, the attacker manipulates the unique way LLMs work by maliciously creating/changing the input so they can bypass the expected AI behavior. Prompt injection vulnerabilities refers to the act of bypassing any filters and manipulating the prompt. By crafting malicious prompts one can cause the LLM to either ignore instruction, which are already present, or execute unintended actions. As you create your policy, you need to have guard rails in place when interacting with the LLM. Use safety meta prompts (system prompts) to augment the prompt, in order to provide instructions such as don’t do X or Y. Incorporate monitoring and use filters such as your provider’s content safety services. Also include monitoring that collects the message body of the prompt and response messages for future review. Ensure the LLM is properly sandboxed, has the proper permissions, and most importantly always make sure that you have a human reviewing and validating the output.

Inadequate sandboxing: This refers to the LLM not being property isolated from it surrounding systems. If the LLM lacks proper isolation when it interacts with external resources or sensitive systems, it creates a potential risk for exploitation, unauthorized access, and an opportunity for prompt injection attacks. Ensure that your policy specifies that the LLM environment must be separated from critical systems or data stores. Put in proper restriction on LLM access and ensure that there is never system level access to other systems. Use the principle of least privilege and conduct regular audits and reviews. Check access controls to verify solutions are being maintained over time. Add security testing and always put in logging and monitoring.

Hallucinations: This is a unique risk to LLMs. Hallucinations are the incorrect or inaccurate responses from the LLM but presented as fact. The risk is the overdependence on LLM generated content. You should never blindly trust the generated content without verification. You need a human reviewer. While LLMs are trained on vast amounts of information which helps the LLM generate the output, there isn’t anything verified as part of the output response. That work must be done by people reviewing the response. When people use the response without verification it can damage reputations, cause misleading or false facts, erode people’s trust, and aid in confusion.

There should be policies in place to promote the practice of verifying and validating everything returned from an LLM. You should also include rules around transparency, ensuring that people know content was machine generated. Lastly, ensure, and audit, that the configuration settings of the LLM are set correctly and the temperature setting is correct for the type of output required (creative vs formal).

DDOS (Distributed Denial of Service) attacks: This is an existing attack which is known to security and risk professionals. However, this where existing policies need to be extended and reviewed. A DDOS attack is where an attacker tries to compromise the availability of the system. The attacker will try flooding the system with requests or other possible ways to make the system unavailable. As you already know, AI systems are increasingly being integrated with business applications which means their criticality is ever increasing. The attacker wins by blackmailing the company or by hitting the service with constant traffic, which overwhelms the service and costs the company money in lost revenue or disruptions. For mitigations, find out what sort of protection or detection the LLM provider has. But more essential, is what you have built into your systems monitoring to shut down traffic. You need to create a threat model of the GenAI system and identify what security controls are in place. Determine what testing is in place for detecting DDOS attacks, and how the application or system will respond.

Copyright Violations: This is not directly a security issue, but it can have serious repercussions and risks. You need to put in proper controls to mitigate violations from the start. With all of the generative capabilities provided, either text or images, you need to ensure that using the output doesn’t potentially open you up to claims of copyright infringement or an inability to copyright generated content. The images from LLMs aren’t’ copyrightable since you aren’t guaranteed uniqueness, nor can they directly be copyrighted as yours. Proper controls need to be put in place to evaluate what is being produced by the system as well as an understanding of how the output will be used. Unfortunately, there no definitive case law in this area to provide clear guidance for legal policies. Policies should be developed according to current intellectual property principles.

Now that we have talked about the risks and mitigations, let’s talk about creating an AI policy for your organization.

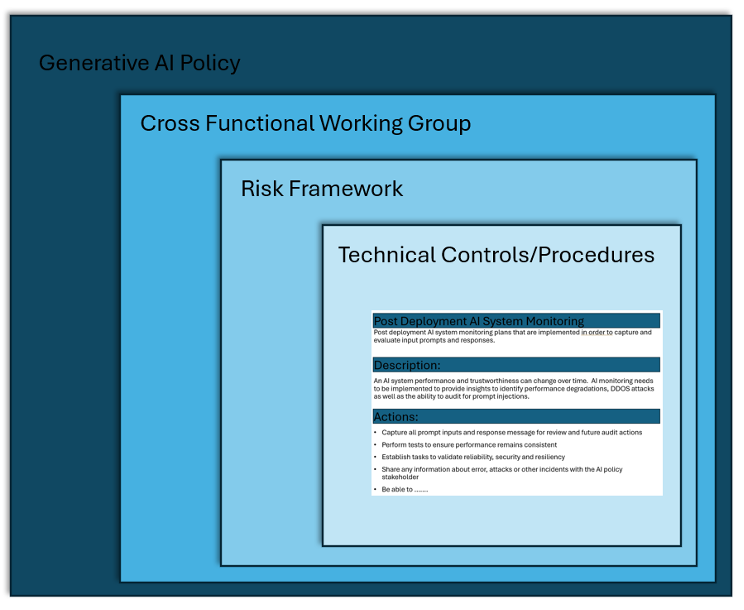

When writing your AI policies, build a framework hierarchy consisting of a GenAI policy, then form a GenAI working group that creates the GenAI risk framework, which will then contain GenAI technical controls.

Start with a Generative AI policy that is approved by the highest stakeholders, the board or CEO. It sets the tone for how AI will be used and controlled within your company. Then create a cross functional working group with multiple stakeholders that will oversee AI risks and outline the go/no-go decision as well as the do’s and don’ts. This then helps create a risk framework that identifies the risks and how they are tracked. This will determine the key activities that occurred and what controls should be in place to mitigate the risks. Finally, you will define the technical controls that will be implemented to mitigate the existing and unique risks.

Exhibit 1: Framework

Don’t make the mistake of jumping directly into technical controls. The first step is the policy. You need to have a Gen AI policy, as this sets the tone for how GenAI will be controlled in the organization. As you build your risk framework, make sure that you have an inventory of all the places where Gen AI is currently utilized. This will help ensure you have covered all the scenarios.

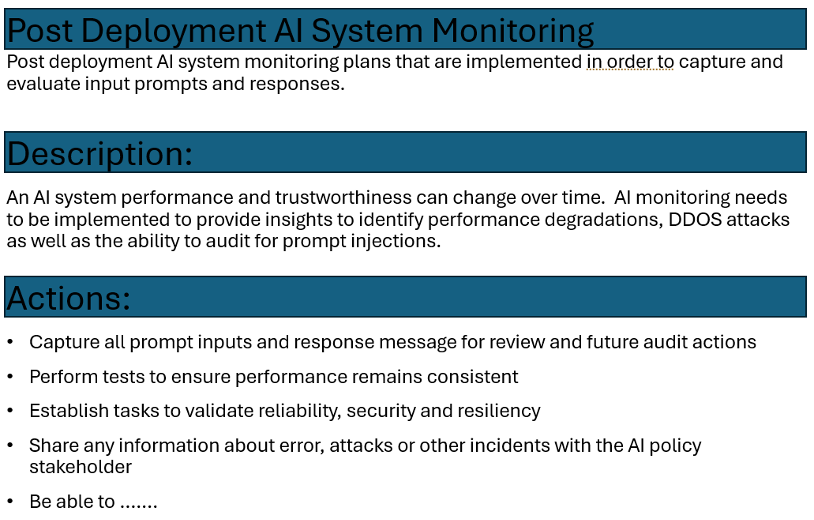

Build your entries by adding a separate entry for each category. If there was one for monitoring, it would have a name and description, a reason, and suggested actions. The following is an example of a policy:

Exhibit 2: AI procedure.

AI is a powerful and promising technology, but it also poses some risks that need to be addressed and mitigated. Regulators are scrambling as fast as they can to understand, control and ensure the safety of the technology. Regulators for every company are trying to define the controls that should be in place to ensure safe operations. We as architects are in a perfect position to help create and shape the controls being created. In this article, we have discussed some of the major AI risks and their possible mitigations. We have also discussed building out the Gen AI framework. We need to make sure that, as architects, we are designing systems that are beneficial to the business while keeping their operations safe. Hopefully this article can help as you design, develop, and deploy AI systems that are not only efficient and effective, but also safe, ethical, and responsible.

Stephen Kaufman serves as Chief Architect in the Microsoft Customer Success Unit Team focusing on AI and Cloud Computing. He brings 30 years of experience across some of our largest enterprise customer helping them understand and utilize AI ranging from initial concepts to specific application architectures, design, development and delivery.

He is a public speaker and has appeared at a variety of industry conferences nationally and internationally (both public as well as internal Microsoft conferences) over the years discussing application development, integration and cloud computing strategies as well as a variety of other related topics. Stephen is also a published author with three books as well as a number of whitepapers and other published content.

In addition, he is a board certified Distinguished Architect (CITA-D – IASA Global) and continues to work with IASA mentoring and creating content.