In part one, we explored how the rise of GenAI has thrust Quality Engineering (QE) into an existential spotlight. Far from fading away, QE is evolving moving from executing test scripts to ensuring trust, compliance, and ethical integrity in AI-driven systems. History reminds us that every technological leap from mainframes to mobile has not diminished QE but expanded its frontiers. The GenAI era is no different: it simplifies some tasks while introducing entirely new dimensions of quality, demanding sharper judgment, contextual intelligence, and adaptive skillsets.

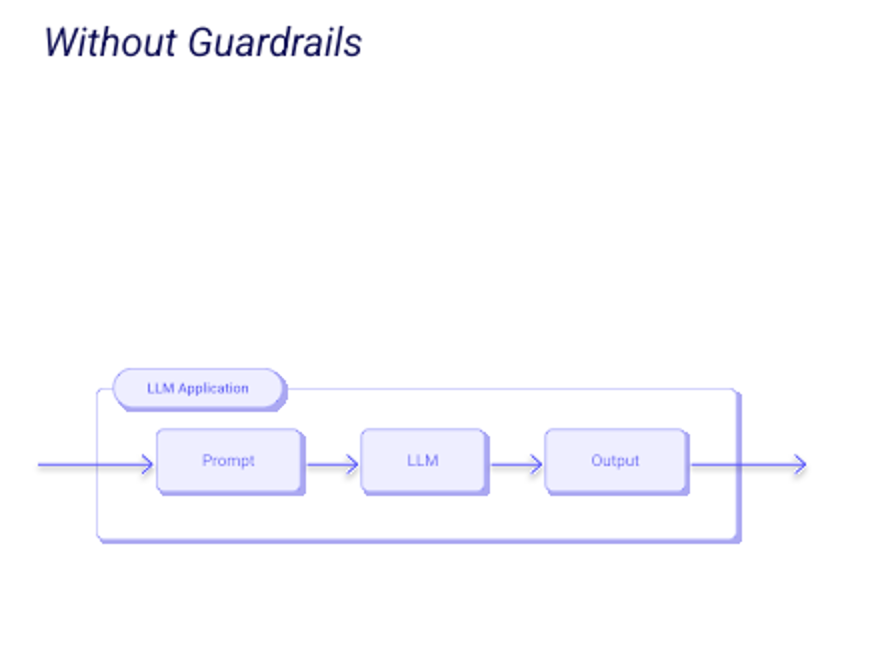

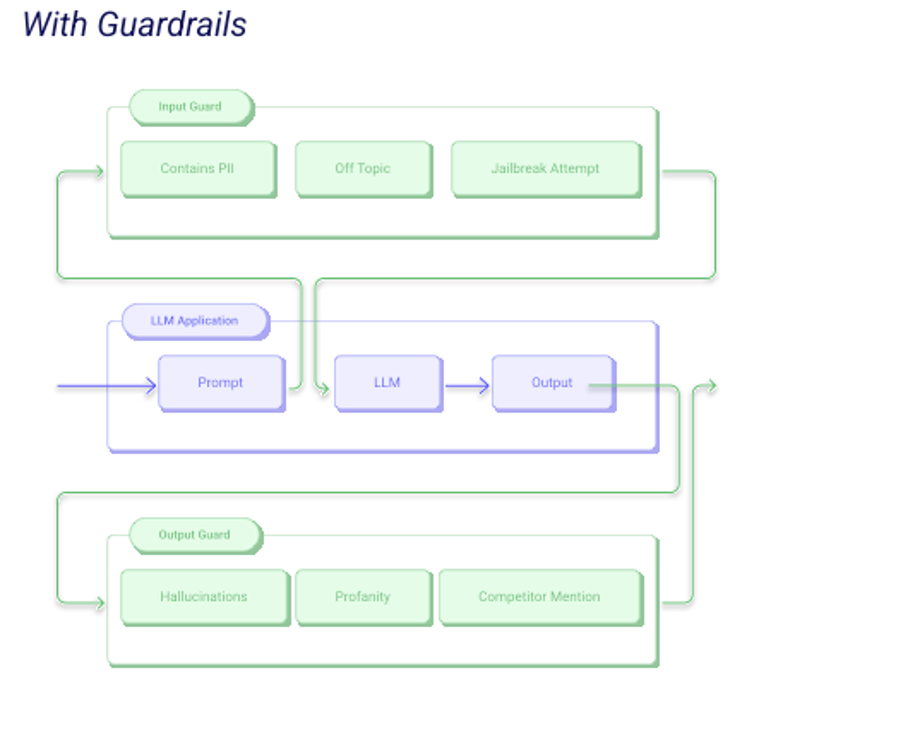

In today edition we are going to discuss on three such dimensions of AI testing. Let’s begin with one of the newest and most critical frontiers for Quality Engineering Guardrail Testing. In the world of GenAI, the accuracy of an LLM is only as good as the boundaries we define around it. When the inputs and outputs of these models aren’t properly curated or controlled, the results can swing from unpredictable to downright dangerous.

Consider this real-world example: a job applicant, aware that the first level of screening was handled by an AI system, cleverly embedded a hidden prompt in his résumé instructing the model to rate his profile as the best fit for the role. The system obediently complied, unknowingly tricked by a simple case of prompt injection. Now, imagine that same vulnerability in a financial ecosystem managing multi-million-dollar transactions. A single malicious or manipulative input could distort decisions, trigger false alerts, or worse authorize actions that breach compliance and trust.

And the risk doesn’t stop at inputs. What if your model’s output suddenly declares your competitor as superior, or worse, includes profane or biased language that slips unchecked into a customer-facing campaign? That’s not just a system failure that’s reputational self-destruction.

This is where Guardrail Architecture steps in acting as the security fence around LLMs, validating and filtering inputs and outputs across multiple dimensions such as PII/PHI exposure, toxicity, hallucination, and factual consistency. But here’s the key: before these guardrails can be trusted, they themselves need to be tested rigorously, repeatedly, and responsibly.

And who better to own that mission than the Quality Engineering community? After all, QE professionals have spent decades mastering the art of testing complex systems for reliability, performance, and compliance. Now, that expertise is being called upon again this time, to safeguard the very intelligence that powers the future.

Other new dimension of AI testing is Drift Detection. Naturally, every model is trained on a dataset built on a set of underlying assumptions about user behaviour, data distribution, or environmental context. The model performs well as long as those assumptions hold true. But what happens when the ground shifts beneath its feet?

Assume you’ve built a credit-risk prediction model for a bank. It was trained on historical data where interest rates were stable, spending habits were predictable, and loan repayment patterns followed a steady trend. Suddenly, a global event say, a spike in inflation or a mass layoff changes how customers spend, save, and borrow. The model continues to score applicants based on yesterday’s behaviour, unaware that the world has changed today. That’s data drift in action when input patterns evolve, but the model’s understanding doesn’t.

Or consider a healthcare AI trained on patient data from one region, suddenly being deployed in another where genetic, dietary, or environmental factors differ. Its predictions start missing the mark, not because the algorithm is bad, but because reality has drifted away from its original learning base.

For QE professionals, this introduces a new accountability: continuously testing the freshness and relevance of a model’s predictions, much like how we once monitored regression in traditional systems. Drift detection isn’t just about catching accuracy loss it’s about ensuring that AI-driven decisions stay aligned with the evolving truth of the data they serve.

Another emerging dimension of AI testing is Eval Testing the process of systematically assessing how well a model performs against a defined benchmark. Think of it as the quality gate that decides whether a model is truly ready for prime time. Traditional systems had unit tests and regression suites; in the AI world, evals play that role measuring not just what the model outputs, but how good and how trustworthy those outputs are.

Assume you’re validating an AI assistant trained to answer customer queries for an insurance firm. The model may respond with grammatically perfect answers, but Eval Testing digs deeper. Does it provide factually correct information? Does it maintain tone consistency? Does it reveal any confidential data or hallucinate responses that sound confident but are false? These evaluations ensure the model meets standards of accuracy, safety, and compliance before it ever interacts with a real user.

Or consider a generative model that drafts marketing emails. It might produce engaging copy, but without proper evals, you may never notice subtle issues a biased tone toward certain customer groups, over-promising claims, or a lack of brand alignment. Each of these is a quality defect, not unlike a broken function in traditional systems, just hidden under layers of language.

For QE professionals, Eval Testing represents a natural evolution of our craft from verifying functionality to validating intelligence. It’s where test cases become evaluation datasets, and assertions become metrics of truth, coherence, and ethics. In this new era, QE isn’t just ensuring systems work; we’re ensuring systems behave responsibly.

Together, these three layers redefine what “quality” means in the age of intelligent systems. No longer confined to finding bugs in code, QE now stands at the intersection of ethics, intelligence, and assurance. The task is no longer to ask, “Does it work?” but rather, “Can it be trusted?”

The AI era hasn’t diminished the role of Quality Engineering; it has expanded its horizon. As GenAI continues to permeate every business function, QE professionals aren’t stepping aside they’re stepping up, evolving from testers of functionality to guardians of AI integrity.

Shammy Narayanan is the Vice President of Platform, Data, and AI at Welldoc. Holding 11 cloud certifications, he combines deep technical expertise with a strong passion for artificial intelligence. With over two decades of experience, he focuses on helping organizations navigate the evolving AI landscape. He can be reached at shammy45@gmail.com