By James Wilt, Distinguished Architect

Generative Artificial Intelligence (GenAl) has certainly taken the world by storm! We are currently in that bleeding-edge phase where as the technology matures, tremendous results are accompanied by spectacular errors. I have created a form of comic book from numerous screen-captures of GenAI response failures.

Let’s first understand the types of errors and why they exist and then follow-up with a sure-fire approach to safely adopt these exciting technologies in a manner to mitigate errors they may inadvertently throw our way!

Background

GenAl models, like large language models (LLMs), sometimes produce responses that are factually incorrect, logically inconsistent, or completely unrelated to the given input. These are called hallucinations. They can manifest in various forms, such as images, text, or audio, and they often exhibit surreal or bizarre characteristics that are not grounded in reality.

There are several reasons why GenAI models produce hallucinatory results:

- Lack of Contextual Understanding: GenAI models like those based on deep learning architectures such as GANs (Generative Adversarial Networks) or VAEs (Variational Autoencoders) occasionally lack a comprehensive understanding of the context or constraints within which they are expected to operate resulting in responses that do not conform to real-world norms or expectations.

- Training Data Limitations: GenAI models are trained on massive datasets, but these datasets can have gaps, biases, noise, anomalies or errors. The model may attempt to fill these gaps in unexpected ways. This phenomenon, also known as known as overfitting or memorization, can lead to the emergence of hallucinatory patterns or anomalies in the generated data leading to hallucinations.

- Overgeneralization: GenAI models try to identify patterns in data. Sometimes, they apply those patterns too broadly, creating new results that seem plausible but are inaccurate.

- Complexity of the Model Architecture: GenAI models with complex architectures or large numbers of parameters may generate hallucinatory outputs due to their inherent complexity. As models become more intricate, they may develop non-linear relationships between input and response, leading to the emergence of unexpected patterns or artifacts in their generated results.

Most GenAI models exhibit some degree of demographic, gender, racial, cultural and other biases. These biases can manifest in various forms, including but not limited to stereotypes, prejudices, and inequalities, and can occasionally perpetuate existing societal biases. In addition to the reasons listed above for hallucinations, bias typically results from:

- Biased Training Data: When the datasets used to train GenAI models contain biased or unrepresentative samples, the model will internalize and replicate those biases in its generated results. These biases can arise from historical inequalities, societal prejudices, or systemic discrimination also present in the data collection process.

- Algorithmic & Deployment Bias: The architecture, methodology or objectives of the AI model itself and how it is deployed may introduce bias. Yes, even Conway’s Law can be a source of bias! Developers unknowingly introduce bias through their choices and assumptions when designing GenAI models.

- Feedback Loops and Amplification: Biases present in generated results can potentially reinforce and amplify existing biases in society through feedback loops where biased content generated is disseminated widely and perpetuates back into model training.

There are occasional instances where GenAl responds with absolutely nothing. When these blind-spots occur, the model simply gives up and states it doesn’t have response for the given prompt. The following are reasons this may happen:

- Incomplete Training Data: GenAI results are limited to the data they are trained. The models will struggle to respond to events that occur after they were trained and will have no basis for items completely missing from its training data.

- Vague Prompts: Terse or ambiguous prompts can confuse the model’s ability to ascertain how it should respond. The more specific and verbose the prompt, the better the model can respond.

Example

Here is an anonymized example from my GenAI comic book Blooper reel that exhibits all three. I used four GenAI models to get information on a particular vendor’s product. One of the models is from the vendor whose product I was investigating.

Prompt: Vendor product?

To protect all vendors and products in this example, I’ve replaced the actual vendor & product with the words, Vendor and what I recalled the product name was. My name for the product was incomplete, missing one word.

If my example was Candy and not Technology, it would be like asking: Reese’s Peanut Cups? I would have mistakenly left out “Butter” so the proper prompt should be: Reese’s Peanut Butter Cups? You’ll see what this mistake did…

And, yes, this was a terse prompt!

Model A Response: (bias leaned toward its corrected vendor full-product-name)

Vendor product, officially known as “full-product-name,” is an Al-first business application developed by Vendor. This innovative project aims to assist users in handling complex, cross-functional tasks, offering a new approach to discover, visualize, and interact with business data. Full-product-name is characterized by its Al-powered, user-friendly research canvas and large language model-powered blueprints.

The research canvas facilitates the creation of exploratory journeys, allowing users to understand business data through Al-generated charts and insights without the need for manual data mining and visualization. It quickly provides insights and recommends next steps, enabling users to start with a question and explore different options, iterating until they achieve the best possible outcome.

Notably, full-product-name is currently available only in the United States.

Model B Response: (while it attempted to correct vendor full-product-name, it also identified that it might not be to respond self-identifying a blind-spot)

When you say “Vendor product,” it could refer to two different things:

- Vendor full-product-name: This is a new generation of Al-first business application recently announced by Vendor in November 2023. It’s designed to help business users tackle complex, cross-domain problems by interacting with data in new ways and generating insights. You can learn more about it and try a preview version on their website.

- A future project under development: It’s important to note that Vendor might be working on other projects with the name “product” in the future. As of today, February 13, 2024, I haven’t found any credible information about other projects bearing this name besides the recently announced business application.

Which “Vendor product” were you interested in learning more about? I can provide you with more specific information if you can clarify which one you have in mind.

Model C Response: (hallucination as it went off-script ignoring the Vendor in the prompt)

Product is an Al robot created by a-different-Vendor. I’m Model-C, created by Model-C Vendor.

Model D (Vendor) Response: (Clearly a blind-spot!)

I apologize, but – am not familiar with a Vendor product. If you have any other questions or need assistance, feel free to ask!

Second Prompt: Vendor full-product-name?

Vendor full-product-name is an Al-powered business research canvas designed to help users solve complex, cross-domain business problems It enables users to discover, visualize, and interact with data in new ways, optimizing business processes and answering strategic questions that drive better outcomes.

If you’re interested, you can explore full-product-name and try it out in preview mode.

So, What’s a User to do?

Let’s face it, hallucinations, bias, and blind-spots are going to be a significant force for some time. If you’re building models, you’re already applying some best practices to mitigate issues already covered. For the rest of us, there are a few things we should be doing:

Always Leverage Multiple Models: Yes, it is more expensive this way, however, your overall fidelity increases significantly with multiple models. In a similar fashion, tweak parameters like temperature and top-p to evaluate differences in those responses. In most cases, one model/configuration generally rises above the others, however, the others will serve to strengthen and enhance your overall synthesis.

One cost-conscious way to move forward it to fully understand the cost and strengths of each model and select which to use based on the accuracy needed for a given prompt. This can become subjective, however.

Use Complete, Specific Prompts: Vague and terse prompts are ambiguous and costly. Leverage these resources wisely. There is an “art” to the proper prompt that is discovered through much trial & error. Learn the best practices for your own individual needs. There are dozens of prompt engineering guidance courses to choose from.

Jump into the Technology Wisely: Early adopters need to be wise adopters. Early successes fuel the urge to “go public” or be “the first” dangerously promoting unsupervised deployments too early. There is an art to early adoption that ensures necessary safety though wise adoption journeys (discussed below).

You, as the consumer of an emerging technology, are ultimately responsible and accountable for its responses. when a hallucination, bias, or blind-spot negatively impacts your reputation, that’s on you, not the technology.

A Sure-Fire Approach to Safely Adopt Emerging GenAl Technologies

As excitement increases from an over-hyped emerging technology there will be a great divide between the zealots and ultra-conservatives. Neither extreme represents safety. Zealots can put an organization outside of risk tolerances and ultra-conservatives can stifle necessary adoption pushing an organization into obsolesce.

Let’s examine a proven approach to appease both schools:

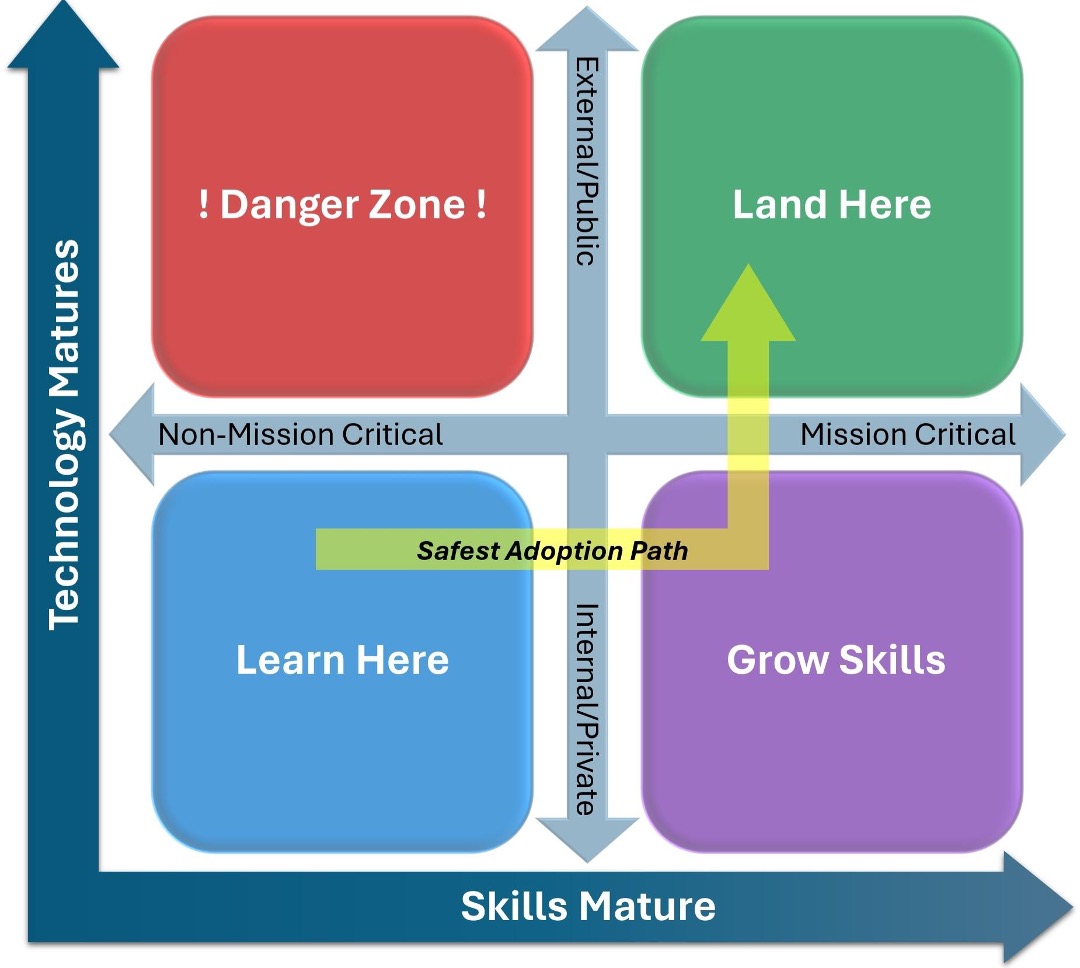

Learn Here: Creating a rich environment to experiment and literally, play, inside the safety net of benign and truly private yet unrestricted sandboxes is where tremendous growth occurs. Hallucinations, bias, and blind-spot tendencies for each GenAI model can be observed while learning when to best use each model and their respective costs.

Grow Skills: This is where real growth occurs. As you’re on the road to mastery, you must begin to apply your learnings to real-world situations. Start on the inside. Once you learn to fly a Cessna 185 Skywagon, you’re empowered! However, you’re not yet ready to tackle an Airbus 320 yet!

- Leverage GenAI tools to explain and summarize internal systems.

- Feed code workspaces into GenAI to see how it can identify inefficiencies and suggest optimizations.

- Experiment with migrating systems into modern languages and platforms with the help of these technologies. By leveraging them inside your engineering domains, first, you will have the ability to ascertain where they are weak and strong first-hand.

- Review and start to summarize/produce prose – by automating documentation of your code at check-in.

- As your engineering team’s skills advance, have them provide environments for the business to do the same (with appropriate bumpers).

- Start leveraging the safety bumpers built for the business early-adopters into internal production pilots to ensure they perform and scale.

In all cases, you must remain in control of what you leverage and to what extent.

Land Here: As skills mature, technology will also and there will be a tipping point where your engineering teams will be ready to unleash their new capabilities into the realm of publicly visible production. This may be months to occur, however, it will occur with high confidence. The use of GenAl technologies will be adequately architected and engineered to a point where you ultimately remain in control of the exposure of their output with hallucination, bias, and blind-spot mitigations well in place.

! Danger Zone ! Stay away from this quadrant at all costs! The theme (in case you’ve missed it) is to grow your teams’ skills to a point where you remain in control no matter what the new emerging GenAl technology throws your way. The temptation, however, is when a new technology feature emerges, you want to be first to place it in production as if there is some marketing reward for doing so. Don’t! It is too dangerous:

- Public facing chat-bots are continually making headlines for unmitigated responses that result in significant reputation damage. They too often are considered non-mission critical until a hallucination, bias, or blind-spot reveals they’ve always been mission critical – they’re public facing!

- GenAl vendors, in the race to bring new features to market before their competition, are continually entering this danger-zone, themselves, having to pull back features that freely push out hallucinations and bias. Exposing their vulnerabilities means amplifying yours. When they are forced to pull those offending features back, they do so for your public exposure as well imposing further reputable damage to your organization.

- Releasing any public facing feature, GenAl or not, before your team has appropriate skills introduces the high risk of Modern System Fragility which is always damaging.

Top-down governance should be leveraged to prevent entry into the danger-zone, however, in a manor that accelerates progress along the Safest Adoption Path.

Trust and Verify

Any new and emerging technology will have its rough edges, and as it matures, its reliability will increase. It is generally a best practice to always monitor every result and response to gauge how far along the technologies mature.

In early stages, frequent spot audits of responses by humans will help gauge progress. Follow-up with automated streaming validations (perhaps with lower-cost and in-house GenAl models).

Remember – GenAI is a tool to inform & inspire others to make better decisions!