Large Language Models (LLMs) have become central to today’s AI revolution, enabling machines to generate human-like text, answer queries, and even perform complex reasoning. Yet, the magic behind these intelligent systems hinges on a critical process known as AI inference. Understanding how inference works, how it differs from approximate inference, and its place among other AI concepts is essential for professionals and enthusiasts seeking to build robust, reliable AI solutions.

Understanding Model Inference

Model inference is the phase where a trained AI model is deployed to make predictions or generate outputs based on new, unseen data. In simpler terms, inference is what happens after the model has learned from its training data—it is the act of “putting the model to work”. For LLMs, this means generating text, answering questions, or performing other language-based tasks.

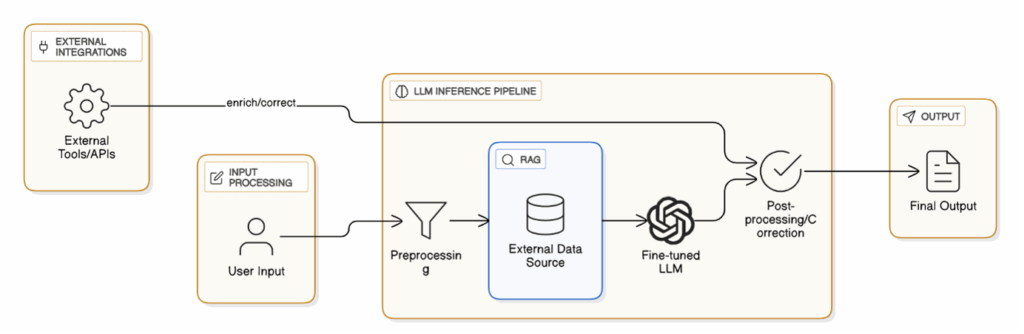

Figure: Process flow of Model inference

The process of inference typically involves feeding input data (such as a text prompt) into the model. The model then processes this input using its learned parameters and produces an output. This stage is crucial for model accuracy, as it determines how well the model generalises its training to real-world scenarios. Efficient inference ensures that AI systems respond quickly and reliably, which is vital for applications ranging from chatbots to automated customer support.

Approximate Inference

While standard model inference strives for precision, approximate inference adopts a different approach. In situations where exact inference is computationally expensive or impractical—such as with very large models or complex probabilistic structures—approximate inference methods offer a practical alternative. These techniques estimate the likely output without calculating every possibility, thus saving time and resources.

Approximate inference is common in probabilistic models and Bayesian networks, where exact calculations can be prohibitive. Methods such as sampling, variational inference, or belief propagation help make predictions feasible at scale. However, this comes with certain trade-offs: approximate results may be less accurate than exact ones, and sometimes introduce uncertainty into the system.

Here is the Comparison Table on the features of Model Inference vs Approximate Inference

| Feature | Model Inference | Approximate Inference |

| Accuracy | High (aims for exact output) | Varies (estimates output) |

| Speed | Moderate to Fast | Fast (saves computation) |

| Complexity | Can be high for large models | Optimised for scalability |

| Use Cases | General AI applications | Probabilistic models, large-scale AI |

| Reliability | Consistent outputs | May introduce uncertainty |

Key AI Concepts Explained: Inference, Training, Reasoning, Prediction, and Fine-Tuning

- Inference: The model uses its learned parameters to generate outputs for new data. For instance, an LLM generating a response to a user’s query is performing inference.

- Training: This is the phase where the AI model “learns” by analysing a large set of data and adjusting its internal parameters. For example, feeding thousands of sentences to a language model so it understands grammar and context.

- Reasoning: Reasoning involves the ability of a model to draw conclusions or make decisions based on input data. This goes beyond mere prediction, requiring the model to connect dots or justify its outputs, such as solving a puzzle or answering “why” questions.

- Prediction: The act of forecasting future outcomes or labelling data based on input. For example, predicting whether an email is spam or not.

- Fine-Tuning: Adjusting a pre-trained model with a smaller, specialised dataset to improve its performance on specific tasks. For instance, adapting a general LLM to understand legal jargon for legal document analysis.

For example, suppose you want to build a chatbot for a bank for Front office operation. You would first train your LLM on general language data, fine-tune it with bank-specific conversations, then deploy it for inference to answer customer queries. If the chatbot needs to explain why a transaction failed, it is employing reasoning. When it forecasts future account balances, it is making predictions.

Advantages of AI Inference

AI inference lies at the heart of deploying models in production. For organisations, it offers several advantages:

- Reliability: Well-designed inference ensures consistent and accurate responses, building trust among users and stakeholders.

- Scalability: Inference architectures can be optimised to handle millions of requests, crucial for large enterprises and consumer platforms.

- Cost Efficiency: Efficient inference reduces computational costs, especially when using approximate methods for complex models.

- Real-Time Performance: Fast inference allows AI systems to deliver instant responses, enhancing user experience in applications like customer service, healthcare, and finance.

- Customisation: Organisations can fine-tune inference pipelines to suit specific business needs, such as compliance or regional preferences.

Conclusion

AI inference transforms trained models into practical tools that drive real-world solutions. While model inference prioritises accuracy, approximate inference offers speed and scalability where exactness is less critical. By understanding the differences among key AI concepts—such as training, reasoning, prediction, and fine-tuning—organisations can design intelligent systems that are both reliable and efficient. As LLMs and AI technologies evolve, mastering inference techniques will remain essential for building trustworthy and future-ready AI applications.