By John Morrell, Senior Director, Product Marketing, of Acceldata

As organizations increasingly rely on data analytics for their operations, there has been a surge in the amount of data being captured and fed into analytics data stores. The objective is to leverage this data to make more accurate decisions. Consequently, ensuring the reliability of this data is crucial for enterprises to make informed choices based on accurate information.

The data utilized in analytics originates from various sources. Internally, it is generated by applications and stored in repositories, while externally, it is obtained from service providers and independent data producers. Companies that specialize in data products often rely heavily on external sources for a significant portion of their data. Since the end result is the data itself, it is paramount to bring together the data with high levels of quality and reliability.

When you consider data observability, the term “shift left” refers to a proactive strategy that involves incorporating observability practices at the early stages of the data lifecycle. This concept draws inspiration from software development methodologies and emphasizes the importance of addressing potential issues and ensuring high quality right from the start.

When applied to data observability, shifting left entails integrating observability practices and tools into the data pipeline and infrastructure right from the outset. This approach avoids treating observability as an afterthought or implementing it only in later stages. The primary goal is to identify and resolve data quality, integrity, and performance issues as early as possible, thereby minimizing the likelihood of problems propagating downstream.

The Economic Implications of Poor Quality Data

In various processes, including software development, the “1 x 10 x 100 Rule” is a guideline that outlines the increasing cost of fixing problems at different stages. According to this rule, detecting and fixing a problem in the development phase costs approximately $1, whereas the cost rises to $10 when the problem is identified during the QA/staging phase, and further escalates to $100 if the problem is detected and fixed once the software is in production. Essentially, it is far more cost-effective to address issues as early as possible.

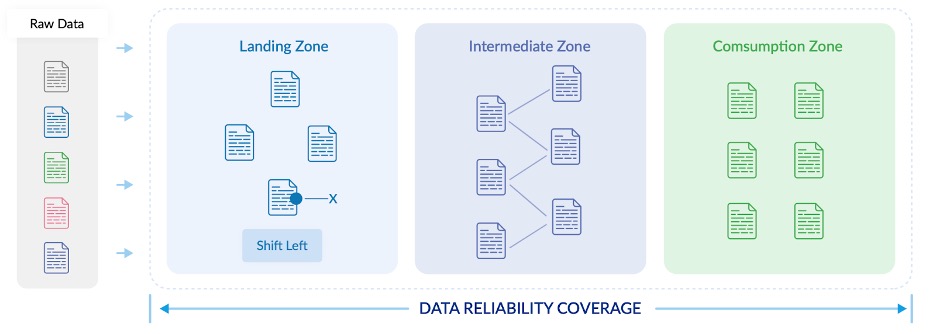

This rule can also be applied to data pipelines and supply chains. In the context of data management, detecting and resolving a problem in the landing zone incurs a cost of around $1, while the cost increases to $10 if the issue is identified in the transformation zone, and further rises to $100 if it is detected and fixed in the consumption zone.

To effectively manage data and data pipelines, it is crucial to detect data incidents as early as possible in the supply chain. This enables data teams to optimize resources, control costs, and deliver the highest quality data product possible.

When Data Reliability Shifts Left

Identifying issues has become increasingly challenging for data teams due to the evolving and complex nature of data supply chains. Several factors contribute to this complexity:

Expanded sources: The incorporation of a greater number of data sources into the supply chain has led to a significant increase. Organizations now integrate data from diverse internal and external sources, adding to the complexity of managing and processing data effectively.

Advanced data transformation logic: The logic and algorithms used to transform data within the supply chain have become more sophisticated. Raw data undergoes complex transformations and calculations to derive valuable insights, requiring robust systems and processes to handle the intricacies involved.

Resource-intensive processing: The processing requirements for data within the supply chain have escalated. With larger data volumes and more intricate operations, organizations must allocate substantial computing resources, such as servers, storage, and processing power, to effectively manage the data processing workload.

Let’s examine the diagram below, which illustrates the flow of data pipelines from left to right, starting from the data sources and moving through the data landing zone, transformation zone, and consumption zone. Traditionally, data reliability checks were primarily performed in the consumption zone. However, modern best practices emphasize the significance of shifting these data reliability checks leftward into the data landing zone.

Taking a proactive approach to address data incidents early on enables organizations to mitigate the potential impact and cost associated with data issues. By doing so, they ensure the reliability and accuracy of the data consumed by users while also safeguarding the integrity of downstream processes and decision-making. Ultimately, adopting a shift-left approach to data reliability promotes efficiency, reduces costs, and enhances overall data quality and trustworthiness.

How Data Teams Can Shift Left to Improve Data Reliability

To effectively implement a shift-left approach in your data reliability solution, it is essential to have certain capabilities in place. These include:

- Early Data Reliability Checks: Conduct data reliability tests before data enters the data warehouse and data lakehouse. This ensures that any bad data is identified and filtered out early in the data pipelines, preventing its propagation to the transformation and consumption zones.

- Support for Data-in-Motion Platforms: Enable support for data platforms such as Kafka and monitor data pipelines within Spark jobs or Airflow orchestrations. This allows for real-time monitoring and metering of data pipelines, ensuring timely identification of any reliability issues.

- File Support: Perform checks on various file types and capture file events to determine when incremental checks should be performed. This helps maintain data integrity and accuracy throughout the data pipelines.

- Circuit-Breakers: Integrate APIs that incorporate data reliability test results into your data pipelines, allowing for the automatic halt of data flow when bad data is detected. Circuit-breakers prevent the spread of erroneous data and protect downstream processes from being affected.

- Data Isolation: Identify and isolate bad data rows, preventing their continued processing. This helps contain and address data issues effectively, preventing their impact on other processes.

- Data Reconciliation: Implement data reconciliation capabilities to ensure consistency and synchronization of data across multiple locations. This helps identify and resolve any discrepancies, ensuring data accuracy and reliability.

By shifting left in your data reliability practices, data teams are empowered to focus on innovation, while reducing costs and enhancing data trust. Embracing this approach makes data more usable and resilient, creating a robust and reliable data ecosystem that supports informed decision-making and drives organizational success.