By Andy Ruth, of Sustainable Evolution & James Wilt, Distinguished Architect

Artificial Intelligence (AI) has a fairly long & substantial history. Yet it recently, especially the last year, has taken the world by storm. Why and what must one know to brave this now world of human-machine coexistence?

This primer is for those of you who haven’t yet had opportunity to dig into GenAI as much as you want and is aimed at giving you enough to start researching the topics you are interested in.

Background

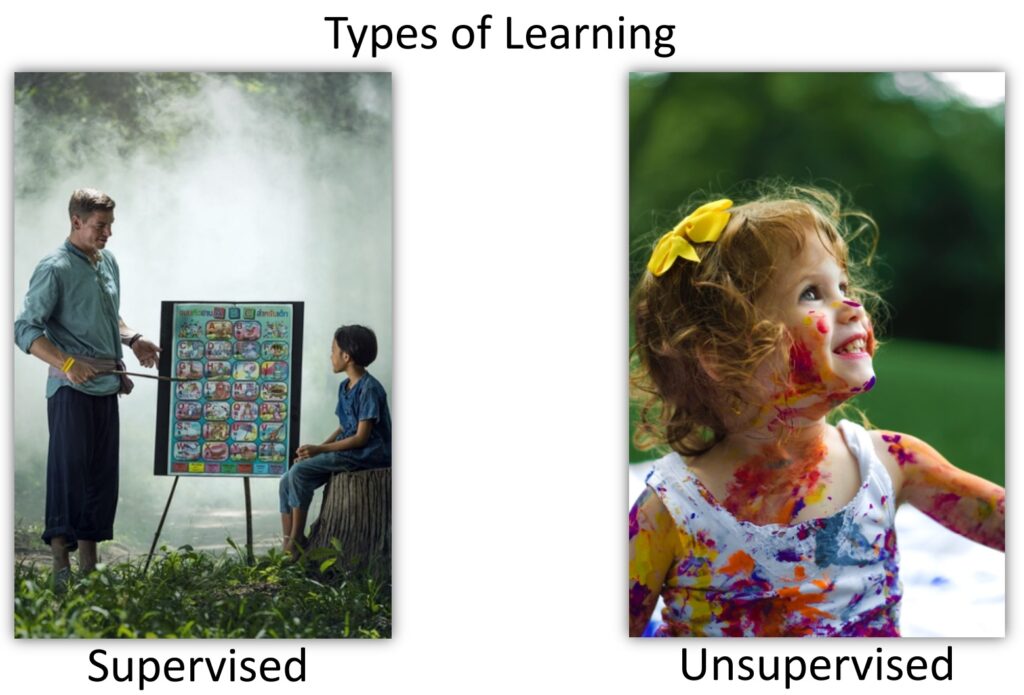

In the early days, much research and growth emerged from the era we now call, Traditional ML/AI where machine learning wrangled farms and lakes of data into structures using what is known as Supervised Learning. It learned to predict and create insights the same way the originators of the data it was trained from did. This introduced the world to various new roles of interaction like Data Scientist (model creator), Data Engineer (data wrangler), and Data Citizen (insights visualizer).

Generative AI (GenAI) using Large Language Models (LLM) additionally leverages Unsupervised Learning from unstructured data to offer original insights. This process requires much more data and time to create its models and it offers a completely different interaction experience compared to traditional AI.

GenAI has reached a tipping point that opens doors to any consumer by interacting through a simple manner of conversation. Most of us have heard of tools such as ChatGPT, Bard, Claud, and Bing to name a few. Hopefully, you’ve also had the chance to research and play with them, or to design and implement a solution using their direct APIs. New roles created by GenAI such as Prompt Engineer (model response tuner), Content Curator (source quality controller), and AI Ethics Specialist (risk manager) who further refine and govern models to “think” (respond) in certain, prescribed ways.

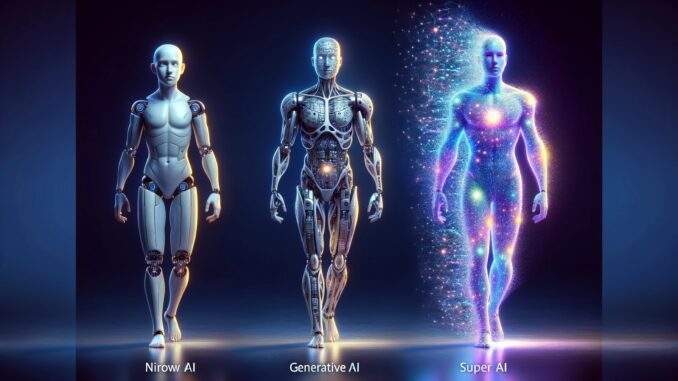

LLM < Generative Al < Narrow AI < General Al < Super AI

With machine learning (ML) and artificial intelligence (AI) there are narrow and general AI models, and after that will (or has) come super AI.

- Narrow AI (Artificial Narrow Intelligence, ANI) is also called weak AI and can only perform specific tasks that are bound within a limited (narrow) domain. Think of that fun small car that AWS put out pre-pandemic that used AI to race around a track or any of the personal assistants out there.

- Generative AI (GenAI) refers to AI systems that can generate content, whether it be text, images, music, or other forms of media like image generation models, text generation models, and music generation software. While they tend to mimic human intelligence (understand, learn, and perform intellectual tasks), they are still considered Narrow AI because they operate within the constraints of their programming and training and do not possess general intelligence or consciousness.

- Large Language Models (LLMs) are a subset of Generative AI, specifically designed to understand, interpret, and generate human language. Examples include chatbots, text completion tools, language translation models.

- Generative AI (GenAI) refers to AI systems that can generate content, whether it be text, images, music, or other forms of media like image generation models, text generation models, and music generation software. While they tend to mimic human intelligence (understand, learn, and perform intellectual tasks), they are still considered Narrow AI because they operate within the constraints of their programming and training and do not possess general intelligence or consciousness.

- General AI (Artificial General Intelligence, AGI) represents AI with the ability to understand, learn, and apply intelligence across a wide range of tasks, at a level comparable to human intelligence. Currently, AGI is theoretical and does not exist in practical applications. It’s often depicted in science fiction but is not yet technologically achievable.

- Super AI (Artificial Superintelligence, ASI) is when the machine is orders-of-magnitude beyond that of a single human and domains span the human catalog of knowledge plus. The machines can perform physical tasks. If you are gentle minded, think C3PO from Star Wars, or if you are more aggressive, then think The Terminator or The Matrix. The concept of ASI raises profound ethical, safety, and existential questions like the control problem (how to control or manage such an intelligence).

Focusing on Generative AI

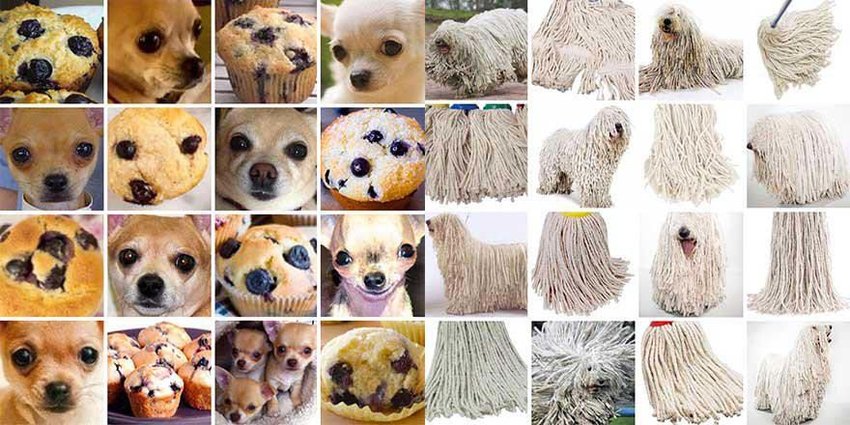

But this is about GenAI. A key difference between Traditional ML/AI and GenAI is that Traditional AI has models that classify existing data (labels) and return results about the existing data. For example, a traditional model might have a million pictures of cats and a million pictures of dogs and discover patterns that enable the model to analyze a new picture to determine if it is a dog or a cat. Below is an example of what Traditional AI can discern: Chihuahuas or blueberry muffins, and Sheepdogs or mops

ResearchGate – Do You See What I See? Capabilities and Limits of Automated Multimedia Content Analysis

GenAI models focus on discovering patterns in data, and then uses those patterns and data to originate and return new insights and original material. For example, you can generate new results outside the specific realm of the training data such as asking a model to create an image depicting a car tire falling in love and eloping with a wagon wheel. Tokens and tokenization from Large Language Models (LLMs) are the magic that makes this possible. Below is an example of what GenAI can create:

DALL-E – Here is the whimsical image depicting a car tire and a wagon wheel in a playful, romantic scenario, illustrating the concept of them falling in love and eloping

Tokenization

A token is the smallest unit in a model. It can be a word, portion of a word, individual character or punctuation, or pixel of an image. The smaller the unit, the more tokens in the vocabulary, the more complex the model, and the more compute power needed to respond to prompts. The smaller the vocabulary, the more out-of-vocabulary (OOV) words, the poorer the response. All OOV words are assigned the same OOV token so words such as corporal and digitization will be assigned the same token by the model if they are OOV, which might make for a confusing response to a prompt. It might seem that a larger vocabulary is the way to go, however, the bigger the vocabulary, more model parameters and resources will be required.

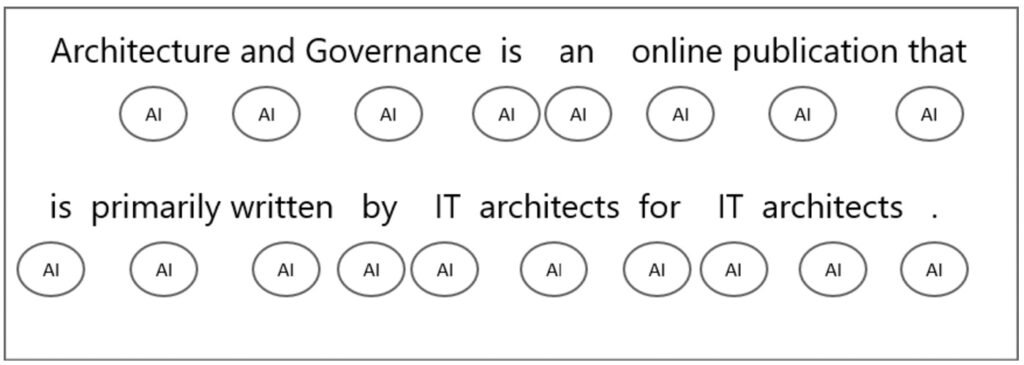

The process of breaking a dataset into tokens is called tokenization. For example, the sentence “Architecture and Governance is an online publication that is primarily written by IT architects for IT architects.” Might be tokenized to:

18 tokens. Words like “and“, “is“, “an“, and “that” are common enough to be individual tokens. “Architecture“, “Governance“, “publication“, “written“, “by“, “IT“, and “Architects” are tokens as well, considering their frequency and significance in the English language. The period at the end of the sentence is also treated as a separate token.

Tokenization is non-trivial. In the example above, punctuation is exemplified but not capitalization, contractions, nor compound words like factor, refactor, and refactoring.

Breaking out sub words (e.g., re and factor and ing) might make for a better model. While cannot and can’t, are the same, their tokenization may be different because the word can’t has punctuation. As you might expect, this all has a big impact on model performance and response quality.

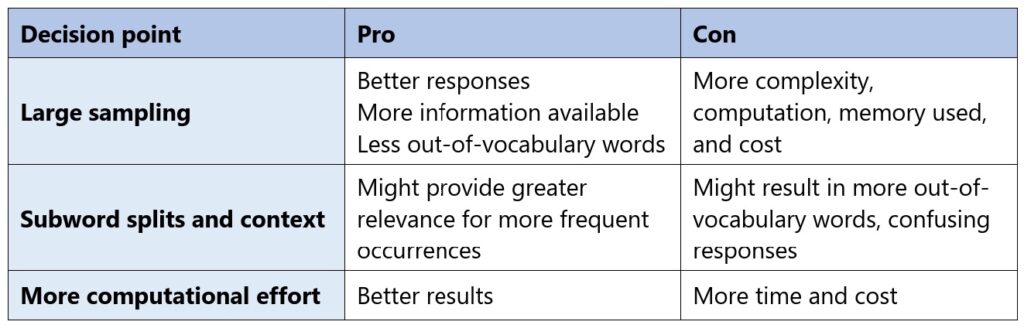

There are architectural tradeoffs that you will have to make that impact performance, cost, and user experience:

Subword tokenization techniques (i.e., breaking words into smaller subword units, like word stems, prefixes, and suffixes) like Byte Pair Encoding (BPE) and its Google refined derivatives WordPiece and SentencePiece (an unsupervised text tokenizer) help models handle rare and unknown words efficiently. Unigram based in probability iterations tokenizes words into character n-grams to improve the handling of out-of-vocabulary words.

If a model tokenizes a very large sample set, say trillions of words, using the same algorithm, it can better identify patterns for what typically comes before and after any token. The model can then use these patterns to generate new and original content providing that magical conversation feel to the interaction.

Parameters

After models are created, various parameters govern how the model is executed impacting quality and delivery of results. At the consumer level, you don’t have free-form access to these, but at the API level, you may (e.g., ChatGPT allows you to set Temperature & Nucleus).

Context Window refers to the amount of text a language model can “see” and consider or process at a time – influencing how well the model can understand and respond to prompts. Larger context windows generally lead to better understanding of long-range dependencies and more coherent responses, however, they also require more computational resources. Typical context windows for LLMs range from 512 to 2048 tokens (words or subwords).

Prompt Temperature (a numerical value, usually between 0 and 1) controls the randomness or creativity of the model’s responses. Lower temperatures encourage the model to produce more predictable, conservative responses that closely align with the prompt and training data. Higher temperatures introduce more randomness and creativity, leading to unexpected, surprising, or even nonsensical outputs.

Nucleus (Top-p) Sampling focuses on a cumulative probability mass of tokens by selecting the smallest set of tokens (the “nucleus”) that accounts for a certain probability threshold (p). This allows for dynamic selection of tokens, potentially choosing more or fewer options depending on the model’s confidence (the degree of certainty the model assigns to its predictions or decisions). A value of 0.8 means the model filters out lower 20% probable tokens, cutting off the “tail”, and resamples only from the most likely 80% tokens that make up a given nucleus.

Top-k Sampling considers only the k most probable tokens, truncating the probability distribution to include only those top k choices. This helps prevent highly improbable or irrelevant tokens from being selected. With k = 50, only the 50 most likely tokens would be considered for sampling.

What Could Possibly Go Wrong?

Hallucination refers to a phenomenon where a LLM perceives patterns or objects that are nonexistent or imperceptible to human observers, creating outputs that are nonsensical or altogether inaccurate (notice the misspelled word, Nirowv, in the title graphic). They can occur due to various factors, including overfitting, training data bias/inaccuracy, high model complexity, and if the training data used to develop the model is insufficient or includes large gaps leading to edge cases that are unfamiliar.

When parameters go near or outside their prescribed limits, hallucinations can be generated.

Below are two responses adjusting Temperature (range is 0-1) to the prompt: Explain quantum entanglement

With Temperature set to 0.2: Quantum entanglement is a phenomenon in which two or more particles become linked together in such a way that the quantum state of each particle cannot be described independently of the others, even when the particles are separated by a large distance.

With Temperature set to 1.8: Quantum entanglement is a quantum physics phenomenon in which particles sa kept at separated locations interact so that generally cannot indicate propertiesindicatedwnutility ofins independently of .fect wStates are ofottimske whenve uptmedAnahonuts pin/exchangeeduactedbruiquxe entropyener dzized theeGluryde resonAltered byton – clearly a hallucination.

Setting Temperature to 0.2 generates a response that is closest to the most used trailing and following tokens. When the temperature is set outside its bounds, to 1.8, the model produces a response with trailing and following tokens not used as often together. Since the model is generating new content based off patterns, the result does not make sense.

Bias refers to the tendency of AI models to favor certain outcomes over others, which can lead to unfair or discriminatory results. The following represent examples of bias and the reason they exist

Data Bias stems from the training data used to teach the AI model. If the training data contains biased information, the AI model will likely reproduce and even amplify these biases.

Algorithmic Bias is when the algorithms themselves introduce bias. This is often due to assumptions or simplifications made during the model’s development. This type of bias can be harder to detect and correct because it’s embedded in the model’s mechanics.

Confirmation Bias happens when the model or the users unintentionally reinforce existing prejudices. Users might selectively use or interpret AI-generated content in a way that confirms their existing beliefs.

Bias in Generative AI is a multifaceted issue with significant implications for fairness, ethics, and effectiveness. Addressing this bias requires a concerted effort involving diverse data collection, algorithmic fairness measures, continuous monitoring, human oversight, and a commitment to transparency and ethical AI practices.

Models & Networks

GenAI models require very large data sets and often, many layers. The models are structured in various ways as are the algorithms used. Different models are suited for different types of data. A few bits about models and networks:

- Feedforward Neural Network (FNN) is the simplest neural network structure where information flows in one direction from the input layer to the output layer, passing through one or more hidden layers in-between. Each node connects only to nodes in the next layer and they model relationships between current inputs and target through layered transformations. A FFN is well suited for static input patterns without temporal dependencies. In essence, feedforward networks map fixed inputs to outputs.

- Recurrent Neural Network (RNN) is more complex, designed to effectively process sequential information over time, like text or speech with temporal context. It has cyclic connections between nodes that span adjacent time steps, allowing a “memory” of previous states. Recurrent hidden layers receive input from the data and also their own previous output, propagating context forward. It is capable of learning temporal dynamics by maintaining an internal state summarized as a hidden vector and are designed to better handle long-range dependencies. RNN architectures more suitable for language and time series tasks.

- Finite Impulse Response Network (FIR) is a type of artificial neural network characterized by feedforward connections and no feedback loops. the strict feedforward architecture of finite impulse response networks allows efficiently learning fixed transformations between vector inputs and target outputs without complications of internal states or temporal dynamics. They trade off complexity for interpretability.

- Infinite Impulse Response Network (IIR) is a type of recurrent artificial neural network characterized by feedback connections that give the network memory over time. It leverages cyclic feedback connections to incorporate temporal context into their state representations. This equips the models to learn dynamic temporal patterns in sequential data like audio, speech or video. The memory also aids prediction.

*** Just those few bullets should suggest that you might seek guidance from a data scientist and discuss objectives for a GenAI model before decision criteria can be defined for a GenAI solution. ***

To become more aware of the models, a good starting point for exploration might be to research the Generative Adversarial Network (GAN) approach. This approach pits a generator against a discriminator and uses a back-propagation algorithm to help the generator and discriminator learn to generate more realistic output. Another point for exploration is Long Short-Term Memory networks (LSTMs) as this type of network has revolutionized speech and handwriting recognition.

Two good resources are Neural Networks and Deep Learning and OpenAI’s generative models research. The OpenAI website shows a publication date of 2016 but provides a great baseline to grow from and is only about 3000 words – the length of most academic and scientific articles. With that as a base, you can follow the links in the article or use one of these web searches:

- generative AI model list

- transformer generative AI models

What’s Next?

The potential for using GenAI is tremendous, but the concerns that must be addressed are considerable. For example, content created by public GenAI models can’t be copyrighted or trademarked and laws around ownership are well behind the curve of AI innovation.

This creates a dichotomy of a happy vs. apocalyptic future.

Tremendous Potential:

- Boosts creativity – help create art, stories, code, designs

- Increase accessibility – customize content to individual abilities

- Augment the workforce – automated assistance for tasks

- Streamline coding process – real-time information on available functions, parameters, and usage examples as the coder types, saving an average of 38 percent development time

- Reduce costs – self-service content generation

- Accelerate discovery – surface insights from data

- Revolutionize industries – streamline drug discovery, personalize healthcare, optimize materials science, design and iterate on prototypes with astonishing speed, accelerating innovation

Concerns:

- Bias amplification – embed problematic stereotypes

- Misinformation/Harmful content – falsely fabricated content & offensive/dangerous material creation

- Job disruption – automate roles before re-training workers

- Data privacy violations – underlying training data may contain sensitive information, including personally identifiable information (PII)

- Ethical & organizational risks – responsible use by ensuring accuracy, safety, honesty

- Copyright and legal exposure – infringe upon the copyrights and intellectual property rights

- Loss of agency – Attribution/consent for generated assets

As long as technology outpaces ethical progress, GenAI, despite its promise, threatens to undermine truth, accountability, and social equities even further. Prioritizing research into robust content moderation, multimodal fact-checking, forensics, and bias mitigation is crucial.

This article scarcely scratches the surface of what currently exists in this new territory. It is meant to provide some decent starting points, however, it covers just a fraction of the immense library of ever increasing materials. That said, if there are specific future topics you’d like to see more on, let us know!