By Dr. Gopichand Agnihotram and Joydeep Sarkar

Abstract

This paper proposes a comprehensive and modular architecture for intelligent question answering over enterprise documents using Model Context Protocol (MCP) and agent-oriented design. It addresses the need for multimodal, context-aware document understanding that combines Retrieval-Augmented Generation (RAG) with intelligent orchestration of specialized agents. Our approach includes support for unstructured formats, including text, tables, images, and scanned documents and introduces topic modeling as a complementary technique to improve retrieval relevance, especially in large or semantically diverse corpora. The system leverages powerful large language models (LLMs) to deliver accurate and explainable responses. The architecture is designed for enterprise-grade deployment, incorporating high availability, robust fault tolerance, and performance-aware parallelization to ensure reliability and responsiveness across various industry contexts.

1. Introduction

Enterprises generate an enormous volume of documents that contain vital operational, legal, and financial information. These documents often include heterogeneous content formats, such as embedded tables, diagrams, scanned pages, and natural language text. Stakeholders in industries such as banking, insurance, legal services and healthcare face significant challenges in efficiently querying, interpreting and synthesizing knowledge from such documents.

Conventional Retrieval Augmented Generation (RAG) pipelines are often limited to uniform text-based inputs and fail to adapt to multimodal or domain specific semantics. Additionally, they typically lack explainability and dynamic orchestration capabilities. To overcome these limitations, we propose a robust architecture that integrates MCP, a protocol for managing contextual flow and model invocation, with a network of task-specific agents and enhanced semantic retrieval techniques like topic modeling.

2. Architectural Framework

2.1 The MCP Server

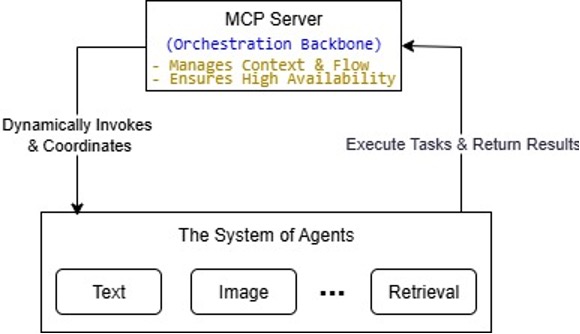

The Model Context Protocol server is the orchestration backbone of the system. It is responsible for maintaining user session context, parsing input metadata, dynamically invoking agents and routing data across components based on document type, content structure, or semantic intent. By preserving task continuity and managing control flow, MCP ensures coherence, efficiency and traceability across the question answering lifecycle.

Responsibilities include:

- Managing persistent query context (e.g., prior questions, embeddings, extracted features)

- Interpreting file metadata and semantic hints

- Orchestrating task pipelines through agent coordination

- Selecting appropriate LLMs or tools based on cost, latency, or capability profiles

To meet enterprise-grade reliability and uptime requirements, the MCP Server is designed for high availability, eliminating it as a single point of failure. The architecture employs a cluster of stateless MCP server instances running behind a load balancer. All critical states, including user session context, job queues, and operational logs, is externalized to highly available, distributed data stores like Redis and a fault-tolerant database. This design ensures that the failure of any single MCP instance is transparent to the end-user, allowing another instance to seamlessly take over and continue processing, thereby guaranteeing system resilience and continuous operation.

2.2 Agents in the System

Agents are modular service components that each handle a specific modality or computational task. This separation of concerns enables scalable and explainable processing, as well as improved fault isolation and domain adaptability.

Key agents include:

- Text Agent: Processes structured text from formats like PDFs and DOCX using parsers and rule-based chunking logic.

- Table Agent: Extracts and semantically interprets tables using Camelot, DeepDeSRT, or TAPAS.

- Image Agent: Converts image-based or scanned content into readable text via OCR systems.

- Topic Modeling Agent: Applies algorithms like LDA or BERTopic to group document sections into coherent topics for improved semantic indexing and retrieval.

- Retrieval Agent: Embeds and retrieves document chunks based on both semantic similarity and topic alignment.

- Answer Synthesis Agent: Uses LLMs to generate contextual, accurate responses grounded in retrieved knowledge.

Figure 1: MCP & Agents

2.3 Toolchain and Infrastructure

| Capability | Tools / Models | Description |

| Vector Search / Retrieval | FAISS, Weaviate, Qdrant | Dense vector databases used for fast semantic search and retrieval |

| OCR (Optical Character Recognition) | Tesseract, AWS Textract, Google Vision OCR | Extracts text from scanned images or PDFs; supports multilingual content |

| Topic Modelling | Gensim (LDA), BERTopic, Top2Vec | Groups document chunks by semantic topics for better retrieval filtering |

| Table Understanding | TAPAS, DeepDeSRT, Donut, PubTables | Models that parse, understand, and answer questions over tabular data |

| Image Understanding | Donut, LayoutLMv3, DocFormer | Processes scanned or structured images with layout-aware vision models |

| Language Models (LLMs) | GPT-4, Claude 3, Mistral, LLaMA 3 | Used for answer synthesis, reasoning, summarization, and classification |

| Multimodal Embedding | CLIP, BLIP-2, Florence | Joint image-text embedding for enhanced retrieval and alignment |

| Chunking Strategies | LangChain, Custom Token-Based Slicers | Adaptive chunking for documents based on token limits, sections, or tables |

| Document Classification | SciSpacy, LayoutLM, RoBERTa-based models | Classify document types or sections to inform downstream processing |

3. Workflow and Orchestration

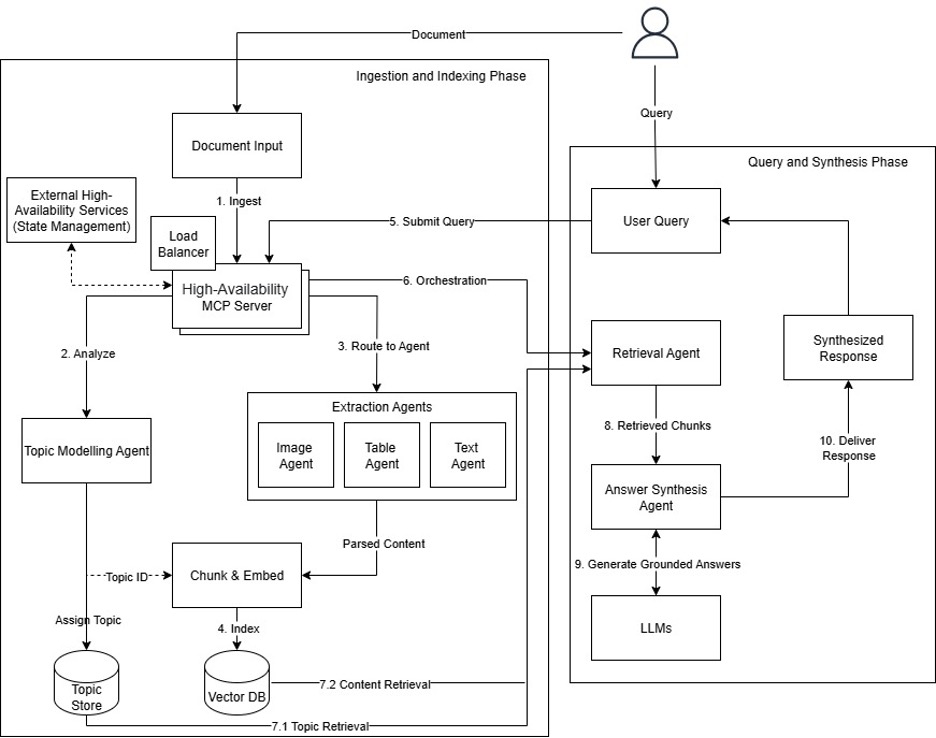

Step 1: Query Submission

Users initiate a query through a front-end interface, optionally uploading one or more documents. Each request includes user metadata and contextual intent. For example:

“Summarize premium exclusions in this scanned travel policy.”

Step 2: Context Initialization and Topic Assignment

Upon receiving the query, the MCP server performs pre-analysis, identifying document types and formats. The document is passed to the Topic Modeling Agent, which infers high-level thematic structures (e.g., ‘Coverage Terms’, ‘Claims Process’, ‘Exclusions’) and assigns topic tags to each document chunk.

Step 3: Content Extraction

The MCP routes documents to the appropriate extraction agent:

- Image Agent for scanned forms

- Table Agent for tabular financial data

- Text Agent for standard textual sections

These agents return parsed outputs, which are further annotated with topic labels, token limits, and metadata like page numbers.

Step 4: Embedding and Semantic Retrieval

All extracted chunks are embedded into vector representations, augmented with topic annotations. When a query arrives, the Retrieval Agent must first map it to the most relevant topic(s) to enable the dual-filter approach. To balance accuracy, speed, and cost, our primary method for this is embedding similarity to topic centroids. During ingestion, a representative “centroid” embedding is calculated for each topic cluster. At query time, the user’s query is embedded, and its vector is compared against these pre-computed centroids to find the closest topical match, a process that is nearly instantaneous and avoids additional LLM calls.

With the relevant topic(s) identified, the Retrieval Agent computes both query embedding and topic alignment scores to select the most semantically relevant and topically consistent chunks. This dual-filter approach, semantic similarity plus topic clustering, improves precision, especially for ambiguous or multi-part queries.

The method for combining these scores is a critical design choice. Our system implements its dual-filter approach using a Hybrid Adaptive Boosting strategy. This technique uses the two filters sequentially, first, topic alignment acts as a “soft filter” by segmenting the search space into high-priority (on-topic) and lower-priority tiers. Second, semantic similarity is used to find the most relevant chunks within each tier. The ‘boosting’ mechanism then ensures the influence of the first filter is preserved by significantly elevating the scores of on-topic chunks in the final ranking. This creates a system that is both precise, like a hard filter, and robust, like a simple weighted score, perfectly embodying the dual-filter philosophy.

Step 5: Answer Generation

The Answer Synthesis Agent uses the retrieved chunks to construct a prompt and passes it to the most suitable LLM. Prompt templates include system instructions, citations, and user-specific constraints. The generated answer is validated against document provenance, ensuring traceability and regulatory compliance.

Step 6: Response Delivery and Logging

The system returns the synthesized answer to the user, often along with highlighted source snippets. MCP logs the full interaction trace for review, debugging, and continuous improvement.

4. Role of Topic Modeling in Enhancing Retrieval

Topic modeling plays a pivotal role in elevating the performance of RAG pipelines, especially when dealing with long documents, heterogeneous corpora, or queries that require contextual nuance. In this system, the Topic Modeling Agent analyzes each document using either probabilistic (e.g., LDA) or transformer-based methods (e.g., BERTopic) to infer latent topics that structure the content.

These topic clusters serve as a semantic map, segmenting documents into coherent units that share topical similarity. During retrieval, the query is first mapped to the most relevant topic clusters, which acts as a filter on top of vector similarity. This ensures that the retrieved chunks are not only semantically similar in embedding space but are also topically relevant.

For example, a query like “How are claims processed for emergency evacuation?” might embed semantically close to both ‘Evacuation Procedures’ and ‘Reimbursement Policies’. Topic modeling ensures that retrieval narrows down to the right section, improving both relevance and efficiency.

Moreover, topic modeling enables hierarchical or faceted retrieval, allowing the system to dynamically shift between document sections or adjust focus based on evolving user interaction. In the case of lengthy contracts or reports, topic tags can help surface overview summaries first and dive deeper as required.

4.1 Practical Considerations for Topic Model Management

A production-grade topic model must be managed throughout its lifecycle. We address two key operational challenges: determining the optimal number of topics and updating the model as new documents are added.

Optimal Topic Determination: Instead of using a fixed number of topics, our system employs a data-driven approach. For models like BERTopic, the optimal number of topics is determined automatically during the clustering phase. For probabilistic models like LDA, we automate the evaluation of different topic counts using coherence scores to find the most semantically meaningful configuration. This ensures topics are neither too broad nor too granular.

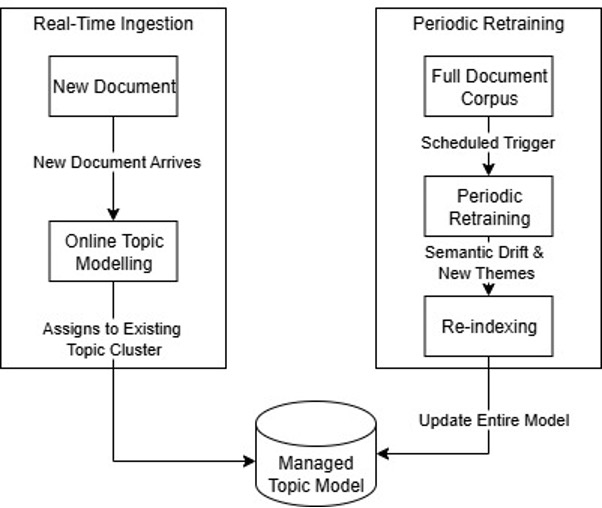

Handling Corpus Evolution: A static topic model becomes stale. To manage this, our system supports two complementary strategies. For real-time ingestion, we use online topic modeling, which efficiently assigns new documents to existing topic clusters to maintain stability. To capture semantic drift and discover new themes over time, we perform periodic retraining of the entire model in a staged environment. This full refresh is followed by a managed re-indexing process, ensuring the topic-based retrieval enhancement remains accurate and relevant as the enterprise knowledge base evolves.

Figure 2: Handling Corpus Evaluation

4.2 Data Architecture for Topic-Based Retrieval

To implement this efficiently, topic information is stored strategically. The topic_id for each document chunk is stored as metadata alongside its vector in the vector database. This allows for highly efficient pre-filtering during retrieval. The topic clusters themselves, specifically their centroid embeddings and human-readable descriptions—are maintained in a separate, lightweight store for the initial, rapid query-to-topic mapping step. This separation ensures both fast mapping and efficient, filtered semantic search.

Figure 3: Reference Architecture

5. Use Cases

5.1 Insurance Analysis

Insurance underwriters process travel or health policies to answer queries like “What exclusions apply to evacuation coverage?” The Topic Modeling Agent helps separate sections into claims, coverage, and exclusions, ensuring that the retrieval stage surfaces only the most relevant clauses.

5.2 Credit Evaluation

In financial decisioning, documents with earnings tables and debt schedules are queried to answer “How has the revenue grown over 3 years?” Topic modeling pre-clusters document sections, helping the Table Agent focus on ‘Financial Summary’ topics for extraction.

5.3 Legal Contract Review

Lawyers working with multi-section contracts can ask “List all indemnification clauses.” Topic-based filtering ensures the Retrieval Agent pulls only relevant segments from ‘Liability’ or ‘Indemnity’ sections before LLM summarization.

5.4 Healthcare Claim Processing

In healthcare, claims and scanned receipts are queried with questions like “Total amount claimed for pharmaceuticals?” The Image Agent performs OCR, while topic modeling ensures that pharmaceutical-related expenses are grouped during retrieval.

6. Implementation Stack

- FastAPI backend for MCP and agents

- LangGraph or custom agent runners for task execution

- Redis or SQLite for temporary context caching

- Gensim / BERTopic for topic inference

- OpenAI SDK / HuggingFace for LLM access

The system uses FastAPI as the backend framework for the MCP server and agent communication due to its asynchronous capabilities, high performance, and ease of development with automatic documentation and validation support. To coordinate complex task flows across agents, it incorporates LangGraph or custom runners that allow stateful and multi-step orchestration tailored to specific document processing pipelines.

For context caching and lightweight storage of embeddings or session data, Redis is used in high-throughput scenarios, while SQLite serves as a portable and zero-dependency alternative for development or single-node deployment. The topic modelling layer combines Gensim, which is well-suited for interpretable LDA modelling on large corpora, and BERTopic, which leverages transformer-based embeddings for more nuanced topic detection in semantically diverse documents.

Large Language Model (LLM) access is facilitated through the OpenAI SDK for commercial APIs like GPT-4 or Claude, and through Hugging Face Transformers to support open-source models like Mistral or LLaMA 3, enabling flexible routing strategies based on cost, latency, or regulatory requirements.

The MCP server exposes RESTful endpoints—/mcp/invoke for initiating tasks, /mcp/context for retrieving session information, and /mcp/route for dynamically dispatching tasks to the appropriate agent—making it easy to integrate with frontends or external systems.

7. Fault Tolerance and Error Handling

In a distributed, multi-agent architecture, a robust fault tolerance strategy is essential for system reliability and user trust. Our framework addresses this through a multi-layered approach, managed centrally by the MCP server.

- Graceful Degradation and Fallbacks: Agents are designed to provide a best effort result rather than fail completely. For instance, if a primary table recognition model fails, the Table Agent can fall back to a simpler parser. If a premium LLM API is unresponsive, the system can route the request to a secondary or local model. This ensures service continuity even when individual components are impaired.

- Intelligent Orchestration and Recovery Paths: The MCP server acts as the central error handler, tracking the state of each task. It can dynamically alter the workflow based on component health. If a non-critical step like topic modelling fails, the MCP can bypass it and proceed with standard semantic retrieval. For documents that repeatedly fail processing (a “poison pill”), the MCP moves the job to a Dead-Letter Queue (DLQ) for offline analysis, preventing it from halting the entire ingestion pipeline.

- Proactive Validation and Confidence Scoring: To prevent errors from propagating, agents enforce strict input/output data schemas. Furthermore, critical agents like OCR and Answer Synthesis generate confidence scores alongside their output. The MCP uses these scores as a signal for quality, allowing it to flag low-confidence answers for user caution or route them for human-in-the-loop review, ensuring the final output is not just generated, but trustworthy.

7.1 Performance, Latency, and Parallelization

To ensure a responsive user experience, our architecture distinguishes between offline ingestion latency and real-time query latency, employing specific strategies to optimize both.

Offline Ingestion Throughput: The computationally intensive ingestion pipeline (topic modelling, extraction, embedding) is optimized for throughput. The system processes multiple documents in parallel using a distributed task queue. For very large documents, chunking and embedding tasks are further parallelized at the page or section level, enabling horizontal scaling to handle high-volume workloads efficiently.

Real-Time Query Performance: The latency-sensitive query workflow (retrieval -> synthesis) is engineered for speed. Most of the heavy computation is shifted to the ingestion phase. At query time, our dual-filter retrieval acts as a powerful performance optimization, using efficient metadata filtering on topic tags to drastically reduce the vector search space. Furthermore, the system employs semantic caching for common queries and can stream the final answer token-by-token to the user, significantly improving perceived performance by delivering the start of a response in seconds.

8. Strategic Benefits

This architecture delivers a powerful combination of automation, modularity, and contextual intelligence:

- Multimodal Support: Images, tables, and rich text formats handled natively

- Semantic RAG++: Topic modeling improves retrieval granularity and relevance

- Auditability: Full pipeline logs improve explainability and compliance

- Flexibility: Domain-specific extensions via custom agents and templates

- Cost Efficiency: Optimized model routing reduces unnecessary LLM calls

9. Roadmap

Upcoming capabilities include:

- Active Learning from User Feedback

- LangGraph-style Autonomous Planning

- Topic-Aware Chunk Prioritization

- Real-Time Streaming Ingestion

- Fine-Tuned Retrieval Models Per Topic Cluster

10. Conclusion

Incorporating topic modeling within an MCP-based RAG framework allows for smarter orchestration and enhanced semantic understanding of documents. By intelligently routing documents through modality-specific agents and aligning retrieval with topic-based segmentation, the system significantly improves accuracy, transparency, and domain adaptability in enterprise-grade question answering.

Appendix

A. Sample API Call

POST /mcp/invoke

{

“input”: “Summarize cash flows from this document”,

“user_id”: “user123”,

“doc_type”: “pdf”,

“file_name”: “2023_financials.pdf”

}

B. Libraries and Tools

- LangChain / LangGraph

- FAISS / Weaviate

- Gensim / BERTopic

- Tesseract / Textract

- cpp / Ollama

- GPT-4 / Claude

C. Chunking Strategy

Our system employs a tiered chunking strategy. A baseline approach is used for simple content:

- Text: ~512 tokens per chunk

- Tables: One row or 5×5 cells per chunk

- Images: Captioned OCR text blocks

For complex structures like financial or scientific tables containing merged cells and nested headers, the system applies more sophisticated semantic chunking techniques

- Recursive Header Propagation (Contextual Cell Chunking)

- Logical Sub-Table Segmentation