By Bala Kalavala, Chief Architect & Technology Evangelist

Introduction

Agentic AI architecture represents a shift from static model responses to structured, goal-driven systems that reason, collaborate, and act independently within defined boundaries. These multi-layered architectures enable adaptive intelligence—but without guardrails, such autonomy risks producing unsafe, biased, or unintended outputs.

AI guardrails create a governance framework that ensures alignment between system goals, human intent, and organizational ethics. They bind autonomy within context, ensuring that every layer—from intent parsing to execution—is controlled, logged, and validated. As AI systems become multi-modal and interconnected, guardrails serve as the unifying design layer for responsible automation.

Why Guardrails Matter in Agentic AI Architecture

Guardrails enable governance and operational reliability across distributed AI ecosystems. Their core purpose is to balance autonomy with accountability, ensuring agents operate safely across dynamic contexts.

Key reasons include:

- Protecting against misuse or unsafe behavior when interacting with business systems.

- Enforcing organizational policies and ethical constraints.

- Ensuring regulatory compliance and data integrity.

- Delivering predictable, interpretable decisions suitable for auditing.

Guardrails are integral not just to control—but to architectural resilience, shaping how each layer of an agent behaves under variable real-world demands.

| Type | Objective | Architectural Use |

| Input Guardrails | Validate and filter user requests. | Ensures requests are safe, authorized, and semantically sound before processing. |

| Planning Guardrails | Regulate goal decomposition. | Prevents creation of unethical, infeasible, or non-compliant sub-goals. |

| Reasoning Guardrails | Audit decision pathways. | Intervenes in biased or illogical inference steps during reasoning loops. |

| Action Guardrails | Manage system-level execution. | Constrains agents from performing harmful or unauthorized API/system calls. |

| Output Guardrails | Validate and sanitize generated outcomes. | Checks for factuality, compliance, and sensitive data leakage. |

| Continuous Guardrails | Enable runtime monitoring and self-correction. | Maintain audits, apply feedback loops, and handle drift detection. |

Table: Types of Guardrails in AI Architectures

Each component forms part of a circular assurance mechanism—ensuring that the architecture remains safe, transparent, and consistent even at scale.

Architectural Flow of Agentic AI with Guardrails

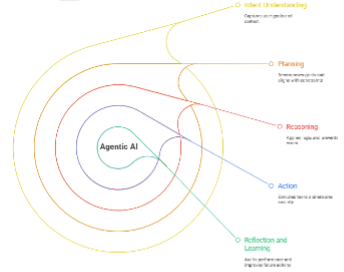

The architecture of agentic AI with guardrails defines how intelligent systems progress from understanding intent to taking action—all while being continuously monitored for compliance, contextual accuracy, and ethical safety. At its core, this architecture is not just about enabling autonomy but about establishing structured accountability. Each layer builds upon the previous one to ensure that the AI system functions within defined operational, ethical, and regulatory boundaries. Guardrails integrate naturally into this design, operating as both guiding principles and real-time enforcement mechanisms, ensuring safety without obstructing intelligence or performance.

The Intent Understanding Layer serves as the foundation where the system translates user inputs into actionable objectives. Guardrails here assess whether requests are valid, safe, and align with organizational or domain-specific policies. For instance, before a prompt even reaches the reasoning engine, it may be analyzed for sensitivity, ambiguity, or restricted content. This ensures that all downstream processes begin from a secure and compliant baseline.

Next, the Planning Layer decomposes the validated intent into smaller, achievable goals. Guardrails embedded in this stage validate that the resulting plans adhere to operational rules and ethical frameworks. This layer serves as the blueprinting phase of an agent’s behavior—identifying feasible tasks, assigning priorities, and confirming consistency with policy. By structuring reasoning through predefined boundaries, it prevents the system from generating unviable or ethically questionable strategies.

In the Reasoning Layer, the AI applies logic and world modeling to evaluate potential options and predict outcomes. This is where the system’s “thinking” occurs, and thus, guardrails perform dynamic oversight—evaluating reasoning chains for accuracy, bias, and logical validity. They may halt reasoning loops that drift outside policy bounds or show signs of hallucination. This combination of proactive filtering and reactive tuning ensures reliability in decision-making complexity.

Progressing to the Action Layer, the agent executes the tasks outlined in the plan—often involving external APIs, databases, or enterprise tools. Guardrails serve as the operational security layer here, controlling the scope of permissions and validating execution parameters before any real-world action occurs. They work as automated checkpoints that enforce least-privilege principles and block potentially unsafe or unauthorized operations.

Finally, the Reflection and Learning Layer functions as the system’s introspective mechanism. It continuously monitors outcomes, evaluates performance, and adjusts models or policies as necessary. Guardrails in this layer act as quality assurance monitors, flagging inconsistencies, enforcing continuous compliance, and guiding retraining efforts based on learned insights. This not only closes the feedback loop but strengthens long-term reliability and adaptability.

Figure: Architectural Flow of Agentic AI with Guardrails

In summary, embedding guardrails across the architectural flow of Agentic AI transforms autonomous systems into governable, trustworthy, and self-improving digital entities. Key benefits include improved safety, enhanced transparency, policy compliance, operational resilience, and increased trust across human-AI collaborations. The result is a robust architecture that empowers intelligence while ensuring that every decision remains explainable, lawful, and aligned with organizational intent.

Key Technical Components of Agentic Guardrails

Implementing agentic guardrails requires a combination of technical, architectural, and governance components that work together to ensure AI systems operate safely and reliably. These components span across multiple layers — from data ingestion and prompt handling to reasoning validation and continuous monitoring — forming a cohesive control infrastructure for responsible AI behavior.

Input Validation and Moderation: This is the first defensive boundary, responsible for screening all incoming prompts or user inputs. Techniques like content filters, denied topic classifiers, and regex pattern detection identify inappropriate, unsafe, or non-compliant input before processing. Some frameworks incorporate machine learning-powered toxicity detectors or semantic classifiers to understand intent and context.

Policy and Compliance Engine: This component enforces enterprise, legal, and ethical standards dynamically. Policies map onto operational constraints like “deny PII exposure,” “restrict financial advice,” or “block disallowed API requests.” Compliance engines often interface directly with regulatory rulesets (e.g., HIPAA, GDPR, SOX), allowing contextual output validation and traceability.

Redaction and Privacy Manager: Guardrails include a PII or data redaction module that automatically detects sensitive information — such as personal identifiers or trade secrets — and masks or removes it before model exposure. This module leverages entity recognition tools, embeddings lookup, and encryption mechanisms to maintain data protection compliance across flows.

Reasoning Verifier and Logic Checker: This subsystem evaluates the logical coherence and factual soundness of AI reasoning. It includes hallucination detection (cross-referencing responses against trusted knowledge bases), bias scoring, and self-consistency evaluation. Some organizations use smaller “critic” models to assess reasoning steps from larger models, improving accuracy without heavy compute cost.

Output Validation Framework: Acting as the system’s final checkpoint, this component verifies the model’s output against predetermined schemas and factual criteria. It monitors for format errors, disallowed terms, incomplete logic, or misleading results. “Checkers” and “Correctors” iterate through content refinement loops until outputs meet safety and correctness thresholds.

Execution and Access Control: For agentic systems that perform external operations, this layer governs which actions an agent can execute and how. It includes API gating, permission-based access tokens, execution sandboxing, and transactional approval pipelines that prevent unsafe actions like data overwrites or unauthorized code execution.

Continuous Monitoring and Audit Logging: All agent interactions, decisions, and changes undergo continuous observation. Guardrail systems generate structured logs and metrics such as prompt rejection rate, false positive ratio, and runtime ethical breach detection. These logs feed into dashboards and compliance systems, ensuring full traceability for audits and post-incident analysis.

Human Oversight and Feedback Loops: A necessary governance pillar, human-in-the-loop systems allow flagged or ambiguous cases to be escalated for review. This not only prevents automation bias but helps retrain and improve guardrail models through red teaming, user feedback, and adversarial testing cycles.

Metadata and Governance Repository: Many modern architectures include a centralized “Guardrails Hub” — a repository of preconfigured filters, schemas, and validation scripts. This repository supports modular reuse, quick deployment, and consistent governance across multiple AI applications within an enterprise.

Threat Detection and Security: Guardrail systems must address security directly. This includes prompt injection prevention, jailbreak detection, and anomaly monitoring. Security-focused guardrails use adversarial simulation, network-level firewalls, and runtime anomaly analysis to ensure AI agents cannot be exploited or coerced into unsafe behaviors.

Together, these components form a non-functional safety architecture that blends deterministic rule-based systems with adaptive AI models. This allows organizations to achieve the dual goal of maintaining innovation while ensuring predictability, accountability, and control in every agentic AI deployment.

Industry Applications of Guardrails

The deployment of AI guardrails spans nearly every major industry where automation, decision-making, and compliance intersect. Guardrails act as the architectural assurance layer that ensures AI systems operate safely, ethically, and within regulatory and operational constraints. Each sector uses these boundaries differently based on its risk profile, data sensitivity, and governance demands.

In finance, guardrails enforce compliance with regulations like AML (Anti-Money Laundering) and KYC (Know Your Customer). Autonomous AI systems used for fraud detection, scoring, or algorithmic trading are restricted from executing transactions outside approved parameters and must log every decision for auditability. By embedding supervisory limits and real-time alerts, AI systems can autonomously act without breaching ethical or regulatory boundaries.

In healthcare, guardrails provide medical-grade governance. They ensure that AI does not issue direct medical diagnoses or treatment plans without human oversight. Instead, they guide AI systems to present findings or options within clinical protocols. These safeguards also protect patient records under HIPAA and GDPR compliance, preventing unauthorized access or data misuse.

In retail and consumer packaged goods (CPG), guardrails maintain brand consistency, control pricing and promotions, and ensure supply chain continuity. AI agents managing merchandising, order fulfillment, or pricing checks are bound to predefined business rules that avoid bias or financial risk. Guardrails prevent overpromising in marketing content and keep automated purchasing or inventory suggestions within operational thresholds.

In manufacturing, guardrails manage robotic or predictive AI behavior across production lines. They monitor conditions for safety and reliability, mitigating the risk of unauthorized mechanical actions that could damage equipment or interrupt workflows. By setting system control boundaries, guardrails keep autonomous operations compliant with factory and safety standards.

Finally, in travel and hospitality, AI guardrails ensure that agents provide context-appropriate, safe, and personalized recommendations. They prevent actions such as making bookings that exceed user budgets or misrepresenting risks, improving user trust and operational credibility.

| Industry | Use Case | Role of Guardrails |

| Finance | AI agent conducting portfolio rebalancing, autonomous trading or fraud detection. | Prevents unauthorized transactions, policy breaches, ensures regulatory compliance (AML/KYC). |

| Healthcare | AI-assisted diagnostic or patient treatment data management, preventative care suggestion systems. | Enforces patient data privacy, unauthorized access with human oversight (human-in-the-loop) and regulates medically approved output scope. |

| Retail/CPG | Autonomous supply chain optimization agents. | Prevents overstocking, protect against price abnormal surge, compliance violations in procurement, and budget breaches. |

| Manufacturing | Predictive maintenance scheduling agents and production automation. | Guardrails restrict unsafe operational commands and enforce downtime policies. Mechanical autonomous decisions, ensure safety and equipment integrity |

| Travel & Hospitality | Booking assistants, trip planning agents | Enforce the recommended travel plan is safe with hyper-personalization applied and adhere to budget limits across travel planning. |

Table: Guardrails have become essential across regulated and high-precision industries

Across each domain, guardrails ensure domain-specific ethics, policy compliance, and traceable decision-making, making AI safe for high-stakes automation. These applications illustrate how guardrails transform AI from a reactive tool into a governed architectural system, reducing risk while ensuring responsible autonomy at scale.

Deep Dive: Retail Agentic AI Guardrails Use Case

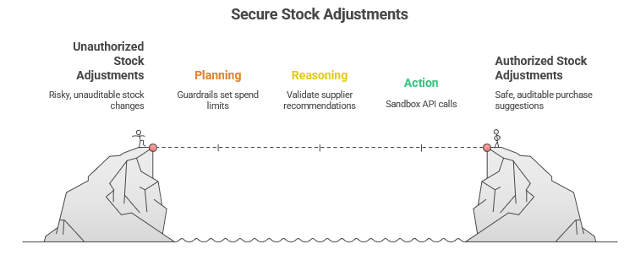

The retail supply chain use case for agentic AI with guardrails demonstrates how autonomous systems can optimize complex logistics and inventory tasks while maintaining safety, compliance, and trust. In this scenario, an AI agent integrates real-time data streams from ERP systems, point-of-sale (POS) terminals, supplier databases, and external market signals to manage inventory, forecast demand, and coordinate orders across warehouses and retail locations.

At the start, input guardrails ensure that only authorized personnel or systems submit requests—preventing unauthorized or erroneous instructions from triggering costly downstream actions. For instance, the agent might require multi-factor authentication or semantic validation before accepting restock triggers or supplier contract modifications.

During planning, the AI decomposes high-level goals like maintaining product availability into sub-tasks such as purchase order creation, safety stock calculation, or delivery scheduling. Guardrails enforce business rules and financial constraints here, preventing plans that exceed budget limits, violate supplier agreements, or create logistical conflicts.

Once tasks are planned, the reasoning guardrails verify that forecasts and predictive analytics driving inventory decisions are accurate, consistent, and unbiased. The agent cross-checks demand predictions against historical sales, real-time customer trends, and external factors like weather, ensuring robust decision making and that hallucinated or spurious correlations are minimized.

In the action phase, guardrails meticulously control system interactions. For example, purchase orders or shipping reroutes executed by the AI are gated by strict permissions and sandbox environments, preventing unauthorized changes and ensuring that all transactions are auditable.

Finally, in the reflection and learning phase, guardrails collect continuous feedback on inventory performance metrics like stockouts, holding costs, and supplier reliability. This feedback loops into model retraining and policy tuning, improving agent accuracy and operational alignment over time.

Figure: Retail Supply Chain Agentic AI Governance with Guardrails Use Case

The tangible business benefits of this guardrail-embedded agentic AI approach include reduced stockouts and overstocks, lower operational costs from optimized procurement and logistics, and compliance with corporate procurement policies and supplier contracts.

Structuring supply chain AI as a layered architecture with integrated guardrails transforms it from an opaque, risky automation tool into a transparent, accountable, and measurable system that can adapt autonomously while remaining under deliberate control.

This approach enables retailers to meet dynamic consumer demand swiftly and cost-effectively—while maintaining operational and regulatory integrity throughout the supply chain.

Platforms for Guarded Agentic AI

This ecosystem of open-source and commercial platforms represents the technical foundation of guarded Agentic AI architectures—each enabling modular workflows with AI safety, explainability, and audit in mind. Together, they provide developers and enterprises with scalable, governed AI environments where intelligence operates securely, predictably, and in full alignment with human and regulatory oversight.

| Platform | Type | Category | Key Capabilities |

| LangChain | Open Source / Managed | Orchestration Framework | Modular pipelines for LLM chaining, I/O validation, and tool integration |

| LangGraph | Open Source | Graph Workflow Framework | Visual, stateful orchestration and complex error recovery layers |

| AutoGen (Microsoft) | Open Source | Multi-Agent Framework | Agent collaboration, human-in-loop interaction, and built-in guardrails for reasoning control |

| CrewAI | Open Source / Hybrid | Task Collaboration Framework | Role-based multi-agent interactions with guardrails in decision routing |

| LlamaIndex | Open Source Toolkit | Knowledge Agent Framework | Integrates retrieval and safety screening for compliance-aware reasoning |

| OpenAI Agent Builder | Commercial | Enterprise Agent Platform | Combines input moderation, scoped tool control, and centralized governance APIs |

| Anthropic Claude Agents | Commercial | Constitutional AI System | Embeds ethical reasoning self-checks aligned with company policies |

| Google Vertex AI Agents | Commercial | Enterprise Workflow Platform | Includes agent orchestration, compliance tracking, and seamless system integration |

| Microsoft Azure AutoGen Cloud Suite | Commercial | Multi-Agent Enterprise Framework | Integrates federated policy enforcement, auditing, and agent interplay across systems |

Table: Top Agentic AI platforms with focus on Guardrails

Conclusion

While agentic AI holds extraordinary potential, recent failures across industries underscore the need for comprehensive governance frameworks, robust integration strategies, and explicit success criteria. Enterprises must treat agentic AI not as a turnkey solution but as a complex ecosystem requiring ongoing training, monitoring, and architectural guardrails. Addressing these challenges is pivotal to transitioning agentic AI from pilot failures to sustainable, high-impact deployments.

Agentic AI architecture fuses intelligence, autonomy, and governance into one cohesive design. Guardrails form its structural backbone—ensuring every stage of perception, reasoning, and action is secured and auditable. By embedding guardrails across all architectural layers, developers create AI systems that are not only autonomous but governable, scalable, and safe for real-world enterprise integration. The result is an AI future that’s innovative yet accountable, empowered, but always under vigilant control.

Reference:

- OpenAI Agent Builder: https://openai.com/agent-builder

- LangChain Open Source Framework: https://langchain.com

- Microsoft AutoGen: https://github.com/microsoft/autogen

- Anthropic Claude Agents: https://www.anthropic.com

- Google Vertex AI Agents: https://cloud.google.com/vertex-ai

- Haystack Agents by deepset: https://deepset.ai/haystack

The author is a seasoned technologist enthusiastic about pragmatic business solutions influencing thought leadership. He is a sought-after keynote speaker, evangelist, and tech blogger. He is also a digital transformation business development executive at a global technology consulting firm, an Angel investor, and a serial entrepreneur with an impressive track record. The article expresses the author’s view, Bala Kalavala, a Chief Architect & Technology Evangelist, a digital transformation business development executive at a global technology consulting firm. The opinions expressed in this article are his own and do not necessarily reflect the views of his organization.