By Bala Kalavala, Chief Architect & Technology Evangelist

The evolution of enterprise AI: Understanding the maturity curve

When ChatGPT emerged in late 2022, it democratized access to sophisticated AI capabilities overnight. Suddenly, any organization could integrate conversational AI into its products or operations through simple API calls. However, this accessibility created a paradox: if everyone has access to the same foundation models, how does an organization differentiate itself?

As organizations race to harness the transformative power of Large Language Models, a critical gap has emerged between experimental implementations and production-ready AI systems. While prompt engineering offers a quick entry point, enterprises seeking competitive advantage must architect sophisticated fine-tuning pipelines that deliver consistent, domain-specific intelligence at scale.

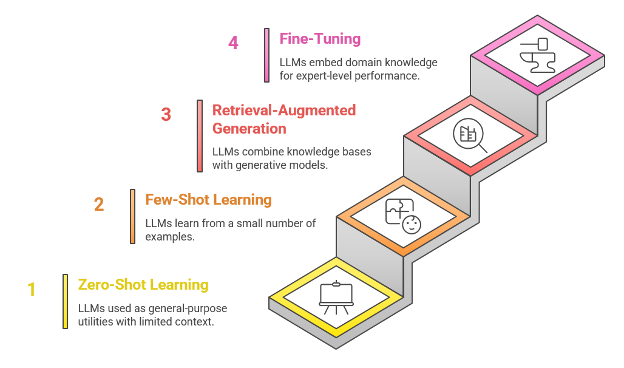

The landscape of LLM deployment presents three distinct approaches for fine-tuning, each with architectural implications. The answer lies in understanding the maturity curve of AI implementation. Organizations typically progress through three distinct phases, each with different characteristics, benefits, and limitations. Let’s look at each phase in depth, understanding the advancements of the existing evolution.

Diagram: LLM AI Maturity

Phase One: Prompt Engineering and Zero-Shot Learning

In the initial phase, organizations interact with AI models via carefully crafted prompts, without customizing the underlying model. This approach, known as zero-shot or few-shot learning, treats AI models as general-purpose utilities. An analyst might prompt a model with “Summarize this financial report and identify key risks,” and the model responds based solely on its pre-trained knowledge and the instructions provided in that moment.

The business benefit of this approach is speed to market. Organizations can prototype AI applications in days or weeks rather than months. A customer service team can start using AI to draft responses immediately. A marketing team can generate content variations without waiting for model training. This rapid experimentation allows organizations to validate use cases and build organizational buy-in for larger AI investments.

However, this approach has inherent limitations that become apparent at scale. First, consistency suffers because the model’s behavior depends entirely on how questions are phrased. Two employees asking similar questions in different ways may receive dramatically different answers. Second, the quality ceiling is constrained by the model’s general knowledge. A healthcare organization cannot expect a general-purpose model to consistently apply its specific clinical protocols or regulatory requirements. Third, costs accumulate quickly because every interaction requires sending extensive context in the prompt, which consumes tokens and increases expenses.

Phase two: Retrieval-Augmented Generation (RAG)

As organizations mature beyond basic prompting, many adopt Retrieval-Augmented Generation, a hybrid approach that combines AI models with organizational knowledge bases. In this architecture, when a user asks a question, the system first searches through company documents, databases, and other repositories to find relevant information. This retrieved content is then provided to the AI model along with the user’s question, allowing the model to generate responses grounded in the organization’s specific knowledge.

Consider a pharmaceutical company implementing RAG for their research teams. When a scientist asks about drug interactions, the system retrieves relevant sections from their proprietary research database, clinical trial results, and regulatory filings. The AI model then synthesizes this information into a coherent response, effectively acting as an intelligent interface to the organization’s accumulated knowledge.

The business value of RAG is compelling. Organizations can leverage AI while maintaining control over the knowledge base, ensuring responses are based on current, accurate information. When regulations change or new research emerges, updating the knowledge base immediately affects all AI responses without retraining any models. This dynamic capability makes RAG particularly valuable for scenarios where information changes frequently or where citations to source documents are required for compliance or verification.

However, RAG introduces architectural complexity. Organizations must build and maintain sophisticated search infrastructure, implement effective embedding strategies to represent documents numerically, and orchestrate the integration between retrieval systems and generative models. Additionally, RAG systems face challenges with reasoning across multiple documents or applying consistent judgment calls that require deep domain expertise rather than just information retrieval.

Phase Three: Fine-Tuning (For Strategic Differentiation)

Fine-tuning represents the architectural apex of AI implementation. Rather than relying on prompts or external knowledge bases, fine-tuning modifies the AI model itself by continuing its training on domain-specific data. This embeds organizational knowledge, reasoning patterns, and domain expertise directly into the model’s parameters.

Think of it this way: a general-purpose AI model is like a talented generalist who reads widely but lacks deep expertise. Fine-tuning transforms this generalist into a domain expert who has internalized years of specialized knowledge and consistently applies sophisticated judgment without needing constant instruction.

The business impact of fine-tuning manifests across multiple dimensions. First, operational efficiency improves dramatically because fine-tuned models require minimal prompting to perform specialized tasks correctly. A legal tech company that fine-tunes a model on contract analysis can simply provide a contract and receive structured analysis without elaborate instructions in every query. This reduces both latency and per-interaction costs.

Second, quality consistency reaches levels impossible with prompt engineering. A fine-tuned model learns not just what to do, but how your organization does it. It picks up on subtle patterns in decision-making, communication style, and domain-specific reasoning that cannot be easily captured in prompts. A financial services firm might fine-tune a model on years of analyst reports, enabling it to consistently apply its specific investment framework and writing conventions.

Third, and perhaps most strategically, fine-tuning creates defensible competitive moats. While competitors can access the same foundation models and even reverse-engineer prompts, they cannot replicate the accumulated domain expertise encoded in a well-executed fine-tuning implementation. The model becomes a proprietary asset that continues to improve as the organization generates more specialized data.

Strategic Framework: Architecting the Fine-Tuning Journey

Organizations embarking on fine-tuning initiatives face numerous architectural decisions. Success requires understanding not just the technical components, but how they interconnect to create a sustainable system that delivers business value.

Choosing a foundation model is not merely a technical decision but a strategic one with long-term implications. The landscape includes both open-source models like Meta’s Llama, Mistral AI’s models, and proprietary options like OpenAI’s GPT-4 or Anthropic’s Claude, each with distinct characteristics.

Open-source models offer deployment flexibility and cost predictability. An organization can download these models, run them on their own infrastructure, and avoid per-token pricing that scales with usage. This independence becomes particularly valuable for high-volume applications or scenarios requiring strict data privacy. A healthcare provider processing millions of patient interactions might choose an open-source model to maintain complete data control and predictable infrastructure costs.

Proprietary models typically offer superior baseline performance and require less technical overhead. Organizations can fine-tune these models through API-based services without managing training infrastructure. A mid-sized company entering AI might prefer this approach to achieve results quickly without building specialized AI engineering teams.

The decision involves evaluating several factors. Model scale matters because larger models generally offer better performance but demand more computational resources. An organization must balance the quality improvements of a 70-billion-parameter model against the infrastructure costs and latency implications. Domain alignment is equally important; some models have been pre-trained on industry-specific data. A coding assistance application benefits from models pre-trained extensively on code repositories.

Licensing considerations extend beyond initial costs. Some licenses restrict commercial use or require sharing modifications. Organizations must ensure their chosen model’s license aligns with their business model and IP strategy. A company building a commercial product cannot use models with restrictive licenses that would limit their go-to-market options.

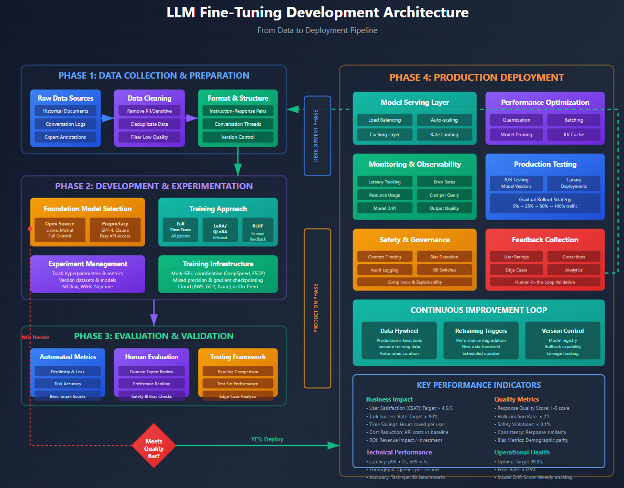

If model selection sets the stage, data architecture determines ultimate success. Fine-tuning quality depends entirely on the data used to train the model, making data strategy the most critical architectural decision.

Effective data architecture begins with curation pipelines that systematically collect, filter, and validate domain-specific datasets. Consider a customer service organization fine-tuning a model to handle support inquiries. Their data pipeline might aggregate historical chat transcripts, email exchanges, and resolution notes. However, raw data is never sufficient. The pipeline must filter out low-quality interactions, remove personally identifiable information to protect privacy, and identify exemplary responses that represent the desired behavior.

Format standardization represents another critical component. Fine-tuning typically requires data in specific formats depending on the use case. Instruction-response pairs work well for question-answering scenarios, where each example shows a user query and the ideal response. Conversation threads better represent multi-turn interactions where context builds across exchanges. Completion formats suit scenarios in which the model continues or elaborates on the provided text. The architecture must consistently transform diverse raw data into these standardized formats.

Diagram: LLM Fine-Tuning Development Architecture

Quality gates ensure that only high-quality examples influence the model. This typically involves multiple layers of validation. Automated quality scoring might evaluate factors like response completeness, factual accuracy against known sources, and appropriate tone. Human-in-the-loop validation provides the final quality assessment, with domain experts reviewing samples to ensure they represent desired behavior. Some organizations implement collaborative annotation platforms where multiple reviewers assess each example, using inter-rater reliability metrics to identify ambiguous cases requiring clarification.

A financial services firm implemented this architecture for fine-tuning a model to generate investment research summaries. Their pipeline aggregated analyst reports, but automated filters first removed any reports with compliance flags or factual errors. A quality scoring system then evaluated each report on criteria like analytical depth, clarity, and adherence to firm standards. Finally, senior analysts reviewed high-scoring reports to identify exemplary analysis patterns. This multi-stage process ensured the fine-tuned model learned from the firm’s best work rather than merely average performance.

| Development Focus

The development phase emphasizes experimentation, iteration, and quality validation. Teams focus on data quality, model selection, and rigorous evaluation before any production deployment. ✓ Data curation takes 40-50% of total effort ✓ Multiple training iterations expected (5-10 runs) ✓ Evaluation must meet the quality bar before deployment |

Production Focus

Production deployment requires robust infrastructure for serving, monitoring, safety, and continuous improvement. The goal is reliable, scalable operation with measurable business impact. ✓ Gradual rollout reduces risk (canary → full) ✓ Real-time monitoring enables rapid response ✓ Feedback loops drive continuous improvement |

| The Continuous Improvement Cycle

The architecture’s true power emerges in the feedback loop connecting production back to development. Production interactions generate new training data, performance monitoring identifies opportunities for improvement, and the system evolves continuously. Organizations that master this cycle build AI capabilities that compound in value over time, creating sustainable competitive advantages. |

|

Table: LLM Fine-Tuning DevOps Lifecycle

Version control and lineage tracking complete the data architecture. Just as software teams version their code, AI teams must version their datasets. Each fine-tuning run should be traceable to specific dataset versions, enabling teams to understand how changes to the data affect model performance. When a fine-tuned model exhibits problematic behavior, teams need the ability to audit which training data may have contributed to it.

Training infrastructure represents the operational heart of a fine-tuning architecture. While the specifics are technical, understanding the architectural approaches and their business implications is essential for strategic decision-making.

Modern fine-tuning employs sophisticated distributed training techniques to handle the computational demands of working with large models. These systems coordinate training across multiple GPUs or specialized AI accelerators, efficiently distributing the model and data. Organizations must decide whether to build this infrastructure in-house or leverage cloud-based training services. The choice involves trade-offs between control, cost, and time-to-value.

Parameter-Efficient Fine-Tuning (PEFT) methods have revolutionized the economics of model customization. Traditional fine-tuning updates all model parameters, requiring substantial computational resources and memory. PEFT techniques like Low-Rank Adaptation (LoRA) instead add small adapter layers to the model, updating only these additions while keeping the base model frozen. This approach dramatically reduces training costs and time while often achieving 90-95% of full fine-tuning performance.

A practical example illustrates the impact: a legal technology company needed to fine-tune a 13-billion parameter model for contract analysis. Full fine-tuning would have required expensive multi-GPU infrastructure and days of training time. By implementing LoRA, they reduced training time to hours on consumer-grade GPUs while achieving comparable accuracy. This efficiency enabled rapid iteration, allowing them to experiment with different training approaches and quickly incorporate new contract types.

Experiment tracking and MLOps integration ensure training infrastructure supports organizational learning rather than one-off experiments. Modern implementations integrate with platforms like Weights & Biases or MLflow, automatically logging training parameters, performance metrics, and resource utilization. This creates an organizational knowledge base of what approaches work, enabling teams to build on previous successes rather than starting from scratch with each new project.

Cost optimization strategies become critical at scale. Cloud providers offer spot instances at significant discounts, but these can be interrupted. Sophisticated architectures implement checkpointing systems that regularly save training progress, allowing training to resume from these checkpoints if interrupted. Gradient checkpointing trades computational time for memory efficiency, enabling training of larger models on limited hardware. Mixed-precision training uses lower-precision arithmetic where possible, speeding up training without sacrificing accuracy.

A sophisticated evaluation architecture separates successful fine-tuning initiatives from those that disappoint. Models can appear impressive in demonstrations yet fail in production, making rigorous evaluation essential before deployment.

Effective evaluation employs multiple assessment dimensions. Automated metrics provide objective, scalable measures of model performance. Perplexity measures how surprised the model is by test data, with lower scores indicating better language modeling. Task-specific accuracy evaluates performance on held-out examples relevant to the use case. For a customer service model, this might measure how often the model’s suggested responses match expert-approved responses.

However, automated metrics alone prove insufficient because they cannot capture qualities like helpfulness, safety, or alignment with organizational values. Human evaluation provides these critical assessments. Organizations typically implement structured evaluation protocols where domain experts review model outputs across diverse test cases, rating them on multiple dimensions. A healthcare organization might have clinicians evaluate a model’s responses for medical accuracy, appropriate treatment recommendations, and adherence to clinical guidelines.

A/B testing frameworks enable systematic comparison between models. Rather than subjectively deciding if a new model is better, organizations can deploy competing models to subsets of users and measure real-world performance. A customer service application might route 20% of inquiries to the new fine-tuned model while the existing system handles the remaining 80%. Metrics like customer satisfaction scores, resolution times, and escalation rates provide objective evidence of relative performance.

One e-commerce company implemented a comprehensive evaluation architecture for their product recommendation model. Automated metrics measured recommendation accuracy against historical purchase data. Human evaluators assessed the diversity and appropriateness of recommendations. A/B testing in production measured actual conversion rates. This multi-faceted approach revealed that while the model achieved high accuracy on automated metrics, human evaluators noted that it over-recommended popular items at the expense of catalog diversity. The A/B test confirmed this hurt overall revenue because customers weren’t discovering new products. This insight led to retraining with a modified objective function that balanced accuracy with diversity.

Deployment architecture transforms experimental models into reliable production systems. This involves far more than simply hosting a model behind an API; it requires building infrastructure for monitoring, scaling, and continuous improvement.

Load balancing and auto-scaling ensure the system efficiently handles varying demand. Traffic to AI applications often follows unpredictable patterns, with spikes during business hours or in response to events. Modern deployment architectures automatically scale compute resources up during high-demand periods and scale down during quiet times, optimizing costs without sacrificing availability. A news organization’s content generation system might scale up dramatically when breaking news occurs, then scale back during normal operations.

Latency optimization becomes critical for user-facing applications. Techniques like model quantization reduce model size by using lower-precision numbers, speeding up inference with minimal accuracy loss. Batching strategies group multiple requests together for processing, improving throughput for high-volume scenarios. Caching stores responses to frequently asked queries, eliminating the need to re-infer the model for repeated questions.

Monitoring and observability infrastructure tracks model performance in production. Unlike traditional software, where failures are often obvious, AI systems can degrade subtly. Monitoring tracks metrics like inference latency, error rates, and resource utilization, alerting teams to technical issues. More sophisticated monitoring assesses output quality over time, detecting when the model starts producing lower-quality responses that might indicate model drift or adversarial inputs.

Drift detection specifically monitors whether the model’s performance degrades as real-world data diverges from training data. A fraud detection model trained on 2023 data might become less effective in 2024 as fraudsters adapt their tactics. Drift detection systems identify these performance declines and trigger retraining with updated data.

Feedback loops close the circle from production back to improvement. User feedback, whether explicit ratings or implicit signals like user corrections, flows back into data pipelines. High-quality production interactions serve as training data for future fine-tuning iterations, creating a virtuous cycle in which the model continuously improves based on real-world use.

Architectural Patterns: Proven Approaches for Different Scenarios

Organizations can choose from several established architectural patterns, each suited to different business contexts and maturity levels.

The incremental refinement pattern starts with a strong foundation model and progressively specializes it through multiple fine-tuning stages. The first stage fine-tunes on broad domain data to establish general domain competence. A legal AI might first train on thousands of diverse legal documents to develop general legal reasoning. The second stage introduces task-specific refinement with curated examples of the target task. The legal AI might then be trained specifically on contract-review examples. A final stage incorporates human feedback through Reinforcement Learning from Human Feedback (RLHF) to align behavior with human preferences and organizational values.

This pattern offers several advantages. It manages complexity by breaking the fine-tuning process into manageable stages, each with clear objectives. It enables organizational learning as teams develop expertise with simpler stages before tackling more complex ones. It also proves cost-effective because earlier, broader stages can use larger, more diverse datasets that may be easier to acquire, while later stages require only targeted, high-quality examples.

A healthcare technology company exemplified this pattern when building a clinical decision support system. They began by fine-tuning on medical literature and general clinical notes to establish medical knowledge. Next, they fine-tuned on specific diagnostic scenarios relevant to their target conditions. Finally, they incorporated feedback from physicians who reviewed the model’s recommendations, teaching it to match clinical reasoning patterns preferred by their user base. This staged approach allowed them to achieve FDA-quality performance while managing the complexity of medical AI development.

Rather than creating a single model for all scenarios, the multi-model ensemble pattern deploys multiple specialized models, each optimized for specific contexts or domains. A routing layer intelligently directs queries to the most appropriate specialized model. If no specialized model fits, the system falls back to a general-purpose model.

This architecture excels when an organization operates across diverse domains that require genuinely different expertise. A multinational corporation might maintain separate fine-tuned models for legal, financial, technical, and operational queries, each trained by respective domain experts. The routing system analyzes incoming queries and directs them to the appropriate specialist model.

The business benefits are substantial. Each specialized model can be smaller and more focused than a single generalist model attempting to cover all domains, reducing computational costs. Specialized teams can own and improve their respective models independently, enabling parallel development. The system degrades gracefully because if a specialized model is unavailable, the routing layer can fall back to alternatives.

However, this pattern introduces operational complexity. The routing layer itself requires development and maintenance, with logic to classify queries and route them appropriately. Organizations must also manage multiple model versions, training pipelines, and deployment systems. This overhead only makes sense at a sufficient scale and domain diversity.

This sophisticated hybrid RAG-fine-tuning pattern combines retrieval-augmented generation with fine-tuning to leverage the strengths of both approaches. The fine-tuned model learns consistent reasoning patterns, domain-specific language, and judgment frameworks. Meanwhile, the RAG component provides access to current documents, recent updates, and specific factual information.

Consider an investment research application. The fine-tuned model learns the firm’s analytical framework, writing style, and reasoning approach. When analysts query the system, RAG retrieves relevant recent financial reports, news articles, and market data. The fine-tuned model then synthesizes this information using the firm’s established methodology, producing an analysis that sounds like it came from the firm’s best analysts while incorporating the latest available information.

This pattern addresses limitations of either approach alone. Fine-tuning alone struggles with current events and frequently updated information. RAG alone may lead to inconsistent reasoning or to failure to apply sophisticated domain expertise. Together, they create models that “think” like domain experts while accessing current information.

The architecture requires careful design of component interactions. The system must determine which information to retrieve, how much to include, and how to structure prompts that combine retrieved information with fine-tuned model capabilities. Despite this complexity, the pattern often delivers the best overall performance for knowledge-intensive applications requiring both expertise and currency.

Experience Driven Best Practices

Organizations that have successfully deployed fine-tuning at scale consistently report several critical lessons that can guide others on this journey.

Many organizations begin fine-tuning with vague goals like “improve model performance.” This leads to wasted effort because teams don’t know what success looks like. Successful implementations define precise, measurable objectives upfront with clear success metrics before training. Is the goal to reduce customer service response time by 30%? Increasing user satisfaction scores above 4.5 out of 5? Achieving 95% accuracy on specific compliance checks? This concrete metrics guide data collection, training approaches, and evaluation design.

A consistent finding across industries is that fine-tuning on 1,000 high-quality examples outperforms training on 10,000 mediocre examples, leading to disproportionate investment in data quality over quantity. Organizations often accumulate large datasets, but volume alone doesn’t drive success. One financial service firm initially trained on 50,000 analyst reports but achieved better results after curating just 2,000 exemplary reports representing their best analytical work. The lesson: prioritize curation over collection.

First fine-tuning attempts rarely achieve production quality. Successful organizations plan for iterative refinement from the start. They budget time and resources for multiple training runs as they refine data, adjust training parameters, and incorporate evaluation feedback. Treating fine-tuning as a one-shot effort typically leads to disappointment.

Even well-tested models can behave unexpectedly in production. Organizations should deploy fine-tuned models gradually, starting with limited user populations or non-critical paths. Implementing kill switches allows immediate rollback if issues arise. One company discovered its customer service model occasionally generated overly casual responses, inappropriate for certain customer segments. Their gradual rollout meant only 5% of customers saw these responses, and kill switches let them revert immediately while they corrected the issue.

Fine-tuning success requires collaboration between cross-functional teams, between data scientists, domain experts, and engineering teams. Data scientists understand model training and evaluation. Domain experts identify what good performance looks like and provide quality training data. Engineers build the infrastructure for training and deployment. Organizations that silo these functions struggle because each group lacks context on the others’ constraints and requirements.

AI systems require extensive documentation for both regulatory compliance and operational needs. Organizations should document the provenance of training data, model architectures, evaluation results, and deployment configurations. When issues arise in production, this documentation helps teams understand what went wrong and how to fix them. For regulated industries, documentation proves compliance with requirements around model development and validation.

Organizations that successfully implement fine-tuning architectures report transformative business impact across multiple dimensions.

Operational efficiency improvements manifest most immediately. A global consulting firm reduced the time its consultants spent on routine document analysis from hours to minutes by fine-tuning the models in its methodology. This allowed consultants to focus on high-value strategic work rather than repetitive analysis tasks. They calculated that this freed up approximately 20% of consultant time, translating directly to capacity for additional client projects without hiring.

Cost reduction often exceeds expectations. While fine-tuning requires upfront investment, the ongoing operational costs typically fall dramatically compared to prompt engineering approaches. One company reduced its AI API costs by 60% after fine-tuning because it no longer needed to send extensive context with every query. The fine-tuned model already understood their domain, requiring minimal prompting.

Quality consistency represents perhaps the most significant but hardest-to-quantify benefit. A healthcare organization found that their fine-tuned model provided more consistent clinical guidance than their previous prompt-based system, which varied in quality depending on how staff phrased their questions. This consistency improved patient outcomes and reduced liability concerns around inconsistent guidance.

Competitive differentiation emerges as organizations deploy fine-tuned models that competitors cannot easily replicate. A legal technology startup built market-leading contract analysis capabilities by fine-tuning on proprietary annotated datasets representing decades of legal expertise. Competitors with the same foundation models couldn’t match their performance without similar specialized data and expertise.

Conclusion: Building AI that Compounds in Value

The journey from experimentation to production AI capabilities requires architectural sophistication that goes far beyond simple prompt engineering. Organizations that invest in proper architecture fine-tuning build AI systems that improve continuously, creating compounding value over time.

The key insight is that fine-tuning is not a destination but a capability. Organizations don’t just fine-tune a model once; they build systems to continuously refine and improve models as new data and requirements emerge. This transforms AI from a tool into a strategic asset that grows more valuable with use.

Success requires commitment across technical, organizational, and strategic dimensions. Technical teams must build sophisticated data pipelines, training infrastructure, and evaluation systems. Organizational structures must enable collaboration between AI specialists and domain experts. Strategic leaders must recognize that competitive advantage in AI comes not from access to foundation models but from the architecture and expertise to specialize them effectively.

As AI capabilities continue advancing, the gap between organizations with sophisticated fine-tuning architectures and those relying on generic models will only widen. The time to build these capabilities is now, while the competitive landscape is still forming. Organizations that master fine-tuning architecture will shape the next decade of AI-driven business transformation.

Reference:

- How do you fine-tune in theory: https://ai.stackexchange.com/questions/46389/how-do-you-fine-tune-a-llm-in-theory

- Hugging Face instruction tuning LLM: https://discuss.huggingface.co/t/instruction-tuning-llm/67597

- Fine-tuning LLMs and AI Models: https://cloud.google.com/use-cases/fine-tuning-ai-models

- OpenAI – Model Optimization: https://platform.openai.com/docs/guides/model-optimization

The author is a seasoned technologist, enthusiastic about pragmatic business solutions, influencing thought leadership. He is a sought-after keynote speaker, evangelist, and tech blogger. He is also a digital transformation business development executive at a global technology consulting firm, an Angel investor, and a serial entrepreneur with an impressive track record.

The article expresses the author’s view, Bala Kalavala, a Chief Architect & Technology Evangelist, a digital transformation business development executive at a global technology consulting firm. The opinions expressed in this article are his own and do not necessarily reflect the views of his organization.