By Kumar Chinnakali, of Capgemini

In the realm of artificial intelligence (AI), large language models have emerged as a transformative force. Large Language Models (LLMs) have become a transformative force, captivating researchers, and businesses alike. Their ability to process and generate human-like text unlocks a vast array of possibilities, from crafting compelling content to streamlining complex workflows. However, transitioning from a promising LLM prototype to a robust production system presents significant challenges.

This article explores the exciting realm of open-source LLMOps, empowering you to navigate this path with agility and efficiency. These models, powered by deep learning algorithms, have the potential to revolutionize numerous industries, from customer service to content creation. However, the journey from prototype to production is not without its challenges.

Large language models, such as GPT-3, have demonstrated remarkable capabilities in understanding and generating human-like text. This opens up exciting possibilities for applications like virtual assistants, automated content generation, and even language translation.

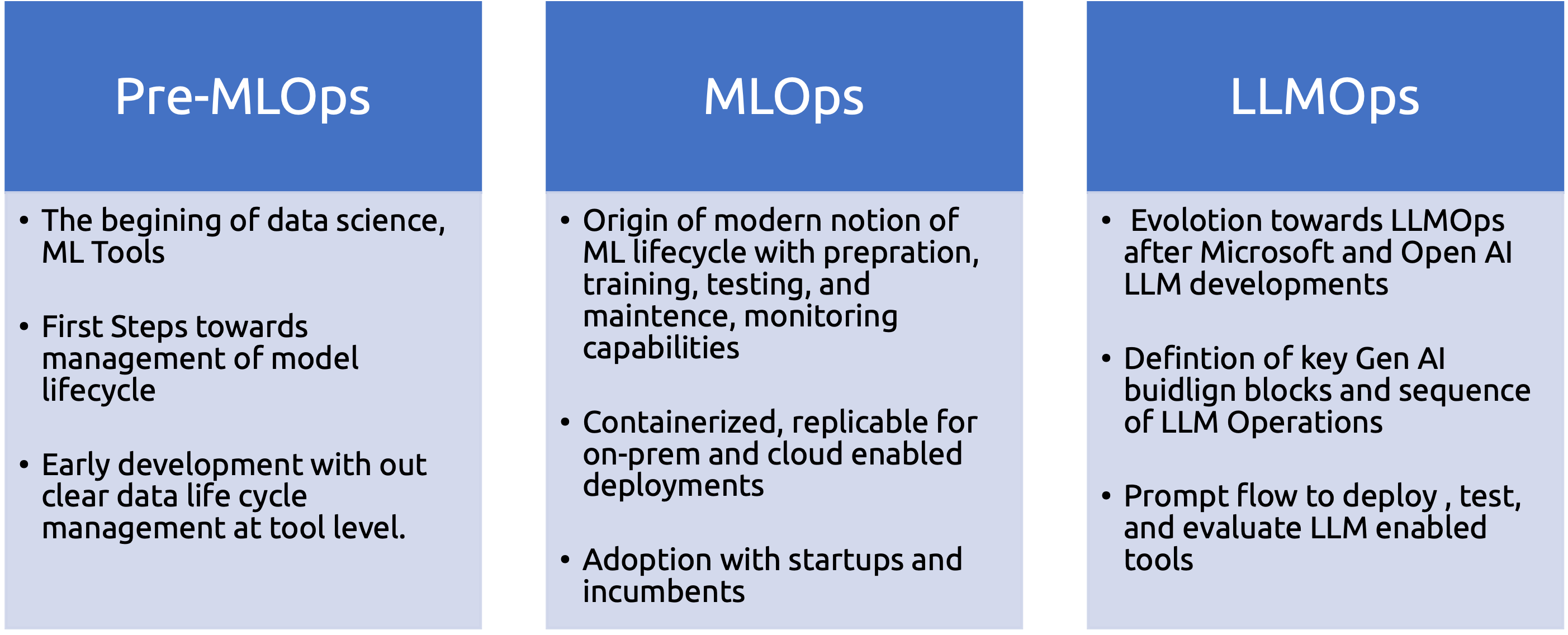

LLMOps Evolution:

LLMOps Maturity Levels: LLMOps Maturity Levels are a way to gauge an organization’s proficiency in managing Large Language Models (LLMs) in production. These levels likely categorize how well an organization leverages open-source tools, automates processes, and ensures robust LLM pipelines.

In simpler terms, it’s a benchmark for how effectively you’ve implemented open-source LLMOps practices for your LLM systems.

The Power of Open Source for LLM Development

Proprietary solutions can limit innovation and flexibility by locking you into vendor ecosystems. Open-source tools, on the other hand, foster collaboration, and transparency. They provide a comprehensive suite of options for each stage of the LLM lifecycle, enabling you to craft a solution tailored to your specific needs.

Building a Robust Foundation with Open-Source Powerhouses

- Model Training: Leverage the established dominance of open-source frameworks like TensorFlow and PyTorch for LLM training. These versatile tools offer a rich ecosystem of libraries and pre-trained models, significantly accelerating your development process.

- Fine-Tuning for Specialized Tasks: Bridge the gap between generic LLM capabilities and specialized tasks with fine-tuning. Open-source libraries like Hugging Face Transformers provide a treasure trove of pre-trained models specifically designed for various NLP applications. Imagine you’re building a chatbot for customer service. You can leverage a pre-trained model on dialogue data, fine-tuning it on your specific customer support conversations to ensure it delivers natural and informative responses.

- Serving Infrastructure: Once your LLM is trained, it’s time to make it accessible. Open-source frameworks like Flask and Django offer a robust foundation for building scalable APIs that expose your LLM’s functionalities to the world. Consider containerization technologies like Docker for packaging your LLM and its dependencies, simplifying deployment and ensuring consistency across environments.

The LLMOps Imperative: Orchestrating a Successful LLM Production Pipeline

LLMOps, the practice of applying DevOps principles to LLMs, ensures a smooth transition from development to production.

Here’s where open-source shines again:

- Version Control: Tools like Git seamlessly manage your codebase, enabling collaboration and tracking changes throughout the development lifecycle. This fosters a transparent and accountable development environment.

- Monitoring and Logging: Gain real-time insights into your LLM’s performance with open-source solutions like Prometheus and Grafana. These tools allow for proactive identification and rectification of issues before they impact users, ensuring system stability and optimal performance.

- Continuous Integration and Deployment (CI/CD): Streamline the deployment process with CI/CD tools like Jenkins or GitHub CI/CD. These tools automate testing and deployment, ensuring rapid and reliable updates to your LLM system, minimizing downtime, and maximizing efficiency.

Open-Source LLMOps in Action: A Practical Example

Imagine you’ve developed a sentiment analysis LLM prototype. Here’s how open source can empower your journey to production:

- Training: Leverage TensorFlow’s capabilities to train your LLM on a massive dataset of labeled text.

- Fine-Tuning for Sentiment Analysis: Utilize Hugging Face Transformers to fine-tune a pre-trained model on sentiment-specific data, enhancing its ability to accurately assess emotional tones within text.

- Building a Web Service: Craft a Flask API exposing your LLM’s functionalities, allowing users to submit text for sentiment analysis. Containerize your LLM and API using Docker for simplified deployment.

- Implementing LLMOps Practices: Utilize Git for version control, Prometheus for monitoring, and Jenkins for CI/CD to automate testing and deployment, creating a robust and efficient production pipeline.

The Open-Source Future of LLMs

The open-source LLMOps landscape is brimming with potential. As the community flourishes, we can expect even more powerful tools and frameworks to emerge. This will democratize access to the power of LLMs and accelerate innovation across industries.

Beyond the tools mentioned, consider exploring open-source platforms like Kubeflow for managing machine learning workflows at scale. Additionally, distributed training frameworks like Horovod can be leveraged to train massive LLMs on clusters of machines, further accelerating the development process.

Embrace the open-source advantage and embark on your LLMs production journey with confidence. The world awaits the unique capabilities your LLM has to offer!