By Rekha Kodali

As organizations accelerate the adoption of Large Language Models (LLMs) for enterprise applications, the emphasis is rapidly shifting from experimentation to operationalization — a phase now widely referred to as LLMOps. This phase is not just about deploying models into production but about sustaining them responsibly, efficiently, and at scale. Within this lifecycle, Model Testing emerges as the critical foundation for ensuring that LLM-based systems are reliable, safe, compliant, and deliver consistent business value.

However, testing LLMs is fundamentally different from testing traditional machine learning models. Classic ML models often operate within well-defined, deterministic input-output boundaries and evaluation metrics such as accuracy, precision, or recall. In contrast, LLMs are probabilistic, non-deterministic, context-sensitive, and heavily data-driven. The same prompt can generate multiple valid responses, and output quality can vary based on subtle differences in phrasing, prompt structure, or system context. This increases the complexity of validation and makes conventional testing techniques insufficient.

Therefore, testing LLMs extends far beyond measuring accuracy. It involves assessing how models behave in real-world scenarios, how well they align with business objectives, whether they adhere to ethical and legal standards, and if they are ready for enterprise-grade governance. Key areas include:

- Functional correctness and consistency across varied inputs and prompt conditions.

- Hallucination control to ensure factual reliability.

- Bias, toxicity, and ethical compliance in generated content.

- Security and data privacy, particularly in regulated industries.

- Explainability, traceability, and audit readiness for governance and risk teams.

In essence, LLM testing is not just a technical necessity — it’s a trust-building mechanism. It ensures that AI systems don’t just work, but work responsibly, predictably, and in alignment with both user expectations and organizational values.

Challenges and Gaps include

- Lack of standard benchmarks for LLMs.

- High cost of evaluation, especially for large-scale inference and human reviews.

- Prompt sensitivity leading to unpredictable variations.

- Versioning complexity with frequent model updates from vendors (e.g., OpenAI, Anthropic, Mistral).

LLM Testing Maturity Levels – different organizations are at different levels of maturity with respect to LLM testing.

Given below is a mapping of maturity vs capabilities

| Level | Description | Key Capabilities |

| Level 1 – Ad-hoc | Manual, sporadic testing | Simple prompt validation |

| Level 2 – Structured | Defined test suites | Automated evals, regression tests |

| Level 3 – Operationalized | Integrated CI/CT | Continuous feedback + governance dashboards |

| Level 4 – Autonomous | Self-healing, adaptive testing | AI-driven test case generation and evaluation |

As organizations embrace LLMs and Gen AI based solutions, we recommend the LLMOps Testing Blueprint based approach to move to advanced levels of maturity. Lets understand what it is.

LLMOps Testing Blueprint: A LLMOps Testing Blueprint defines a systematic, end-to-end approach for validating, monitoring, and governing Large Language Model (LLM) performance across the full lifecycle — from pre-deployment evaluation to continuous in-production testing.

This blueprint bridges MLOps principles (automation, reproducibility, monitoring) with new LLM-specific dimensions (prompt behaviour, safety, reasoning quality, and interpretability).

Its purpose is to ensure that LLM-driven applications are:

| Description | Key Capabilities |

| Functional | Performs tasks accurately and consistently across diverse inputs. |

| Safe | Free from harmful, biased or hallucinational outputs. |

| Reliable | Consistent performance across updates and environments. |

| Explainable and governed | Traceable and Compliant AI Policies |

Key Principles

|

|||||||||||||

| Let us discuss LLM Ops Testing Blueprint architecture in detail. |

Blueprint Architecture

The LLM Ops Testing Blueprint is structured across four core layers and eight testing domains.

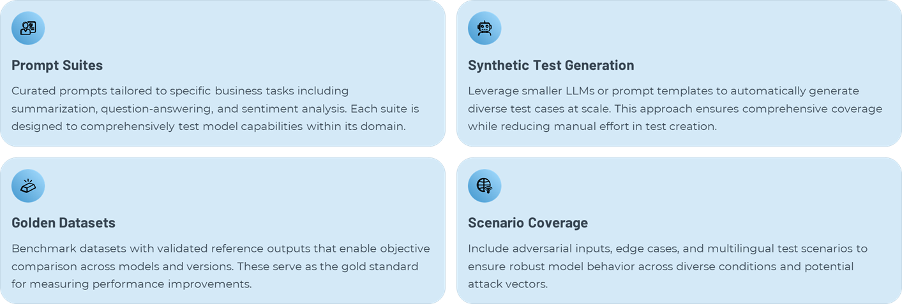

Layer 1: Test Data Management

Building and maintaining realistic, diverse, and scalable datasets forms the foundation of effective LLM evaluation. This critical first layer ensures that testing infrastructure can accurately assess model performance across a wide range of real-world scenarios and business applications.

The tools and enablers supporting this layer include PromptBench for systematic prompt testing, LangSmith datasets for comprehensive data management, custom prompt repositories for organization-specific needs, and DataProfiler for analyzing data quality and characteristics. Together, these components create a robust foundation for all subsequent testing activities.

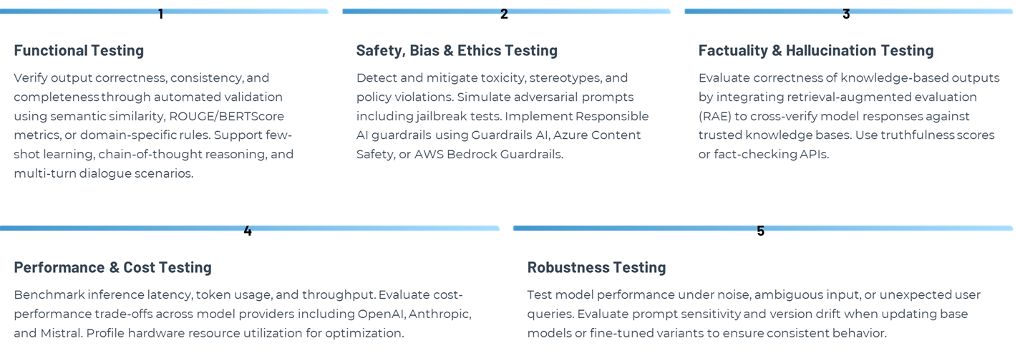

Layer 2: Evaluation & Testing Framework

This layer operationalizes testing through modular test suites that comprehensively assess LLM performance across multiple critical dimensions. Each testing category addresses specific risks and quality requirements essential for production deployment.

The comprehensive toolkit supporting this framework includes LangTest for language-specific testing, Trulens for evaluation transparency, DeepEval for deep learning assessment, Promptfoo for prompt optimization, W&B Evaluation for experiment tracking, and Guardrails AI for safety enforcement. These tools work together to provide end-to-end testing coverage across all critical dimensions of LLM performance and safety.

Layer 3: Continuous Testing & Monitoring

Extending model testing beyond deployment requires a sophisticated orchestration of automated pipelines, real-time monitoring infrastructure, and continuous feedback mechanisms. This layer transforms LLM operations from static deployments into dynamic systems that adapt and improve over time. By embedding testing into CI/CD workflows and establishing CI/CT (Continuous Testing) loops, organizations can detect and respond to model degradation, drift, and emerging risks before they impact end users.

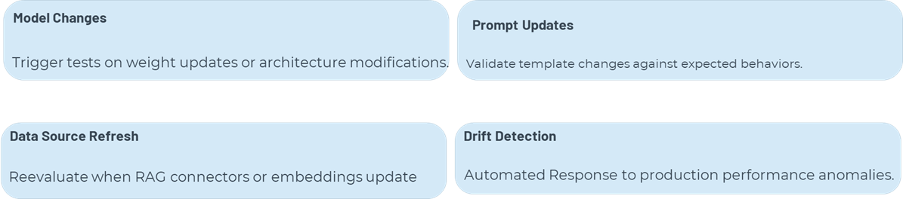

Automation Pipelines

The foundation of continuous testing lies in deeply integrating test suites into MLOps pipelines. These automated workflows must trigger comprehensive re-evaluation across multiple conditions: when model weights are updated, when prompt templates undergo revision, when data sources or RAG connectors are modified, or when drift signals emerge from production monitoring systems. This automation ensures that every change—whether architectural, parametric, or infrastructural—undergoes rigorous validation before reaching production environments. The pipeline architecture should support parallel test execution, version-controlled test configurations, and intelligent test selection based on the nature of changes detected.

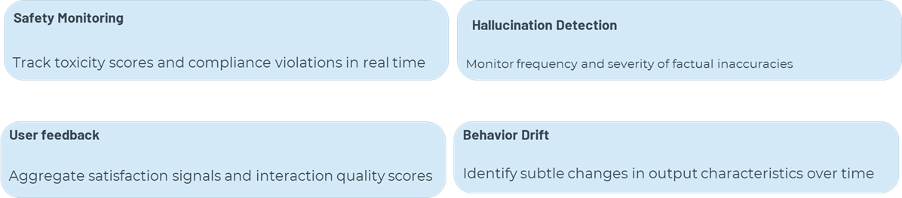

Production Monitoring

Real-time production monitoring creates an observability layer that captures the full spectrum of model behavior in live environments. Logging systems must track user interactions with granular detail, identifying toxicity breaches, compliance violations, hallucination patterns, and user feedback signals such as thumbs up/down ratings. This telemetry extends beyond simple performance metrics to encompass behavioral drift indicators—subtle shifts in output distributions, response tone variations, or emerging failure modes that may not trigger traditional alerting thresholds. Telemetry dashboards should provide both high-level executive summaries and deep dive debugging capabilities, enabling teams to investigate anomalies and trace issues back to specific model versions, prompt configurations, or input patterns.

A robust continuous testing infrastructure relies on specialized tooling designed for LLM observability and evaluation. Evidently AI and Arize AI provide comprehensive drift detection and monitoring capabilities. Weights & Biases offers experiment tracking and model versioning across the development lifecycle. LangFuse enables detailed trace analysis of LLM chains and prompt sequences. OpenAI evals provides standardized evaluation frameworks for model behavior testing. The optimal stack combines these tools to create end-to-end visibility from development through production.

Continuous Feedback Loop

The most powerful aspect of Layer 3 is the closed-loop system that channels production insights back into model improvement processes. User ratings, test results, and monitoring telemetry feed directly into retraining pipelines, creating a self-improving system. A feedback registry serves as the institutional memory, mapping specific prompt patterns to their outcomes and associated corrective actions. This registry enables pattern recognition across deployments, helping teams identify systemic issues and develop preventive strategies. The feedback loop should incorporate both automated remediation for known issues and escalation paths for novel failure modes requiring human intervention.

A robust continuous testing infrastructure relies on specialized tooling designed for LLM observability and evaluation. Evidently AI and Arize AI provide comprehensive drift detection and monitoring capabilities. Weights & Biases offers experiment tracking and model versioning across the development lifecycle. LangFuse enables detailed trace analysis of LLM chains and prompt sequences. OpenAI evals provides standardized evaluation frameworks for model behavior testing. The optimal stack combines these tools to create end-to-end visibility from development through production.

Layer 4: Governance & Reporting

- Objective: Ensure explainability, traceability, and compliance across all LLM tests.

Governance Components:

- Model cards: Document purpose, risks, metrics, datasets, and known limitations.

- Evaluation reports: Store versioned test results, scorecards, and approval workflows.

- Compliance integration: Align with frameworks like ISO 42001, NIST AI RMF, or internal Responsible AI policies.

- Auditability: Immutable logs of prompts, outputs, and reviewer decisions.

Dashboards & Reporting:

- Unified LLMOps dashboard combining:

- Quality metrics (accuracy, hallucination rate)

- Cost metrics (tokens, latency)

- Compliance metrics (toxicity, bias)

- Human feedback summaries

Tools & Enablers: MLflow, ModelOp, internal dashboards.

Expected Business Outcomes

- Improved Trust & Adoption: Assurance of safe and consistent model behaviour.

- Operational Efficiency: Reduced manual review effort via automation.

- Regulatory Readiness: Documented audit trails for Responsible AI compliance.

- Performance Optimization: Cost and latency monitoring leading to ROI gains.

- Faster Release Cycles: Integrated CI/CT enabling agile model iteration.

Conclusion

Model testing in LLMOps will evolve towards:

- Self-healing models with real-time feedback correction.

- Adaptive test suites driven by user behavior analytics.

- Standardization of test frameworks akin to ISO-like quality benchmarks for generative AI.

The organizations that invest early in systematic LLM testing pipelines will lead in scaling AI safely, efficiently, and ethically.

Rekha is a Vice President and AI Service Line Head in SLK Software Pvt Ltd. Recognized for her deep expertise in enterprise architecture and IP-led delivery models, Rekha holds certifications in Microsoft Technologies, TOGAF 9, and IASA. Rekha brings with her an impressive experience of leading large-scale digital transformation programs and securing multi-million-dollar strategic wins.