In the GenAI era, the role of Quality Engineering (QE) is under the spotlight like never before. Some whisper that QE may soon be obsolete after all, if developer agents can code autonomously, why not let GenAI-powered QE agents generate test cases from user stories, synthesize test data, and automate regression suites with near-perfect precision? Playwright and its peers are already showing glimpses of this future. In corporate corridors, by the water coolers, and in smoke breaks, the question lingers: Are we witnessing the sunset of QE as a discipline? The reality, however, is far more nuanced. QE is not disappearing it is being reshaped, redefined, and elevated to meet the demands of an AI-driven world.

To forecast the future of QE, it helps to look back at history. In the mainframe era, the role of Quality Engineering was largely confined to system, integration, performance/load, and user acceptance testing. But as the client–server model gained traction, the testing landscape shifted dramatically. What once took weeks on mainframes like preparing and executing performance tests became faster and more accessible with tools like WinRunner and LoadRunner. The scope didn’t shrink; it expanded. New dimensions emerged: user experience testing, cross-browser validation, and security/penetration testing. The mobile revolution repeated the cycle, introducing a new layer of complexity cross-device testing across Android, iOS, and beyond. History shows us a clear pattern: every radical shift in technology simplifies some aspects of QE while simultaneously spawning entirely new challenges. GenAI will be no exception

Let’s begin by busting one of the most common myths: that GenAI can effortlessly create flawless test cases straight from user stories. Having spent more than two decades across dozens of organizations as both employee and consultant, I can say this with conviction: I am yet to see any team consistently writing well-formed, detail-rich user stories. Most stories are cryptic by design. Teams rely on shared product knowledge, fill in the gaps during sprint grooming, or lean on business analysts to add critical context.

Take a simple example:

User story: ‘Verify that a user can log in to the banking portal.’

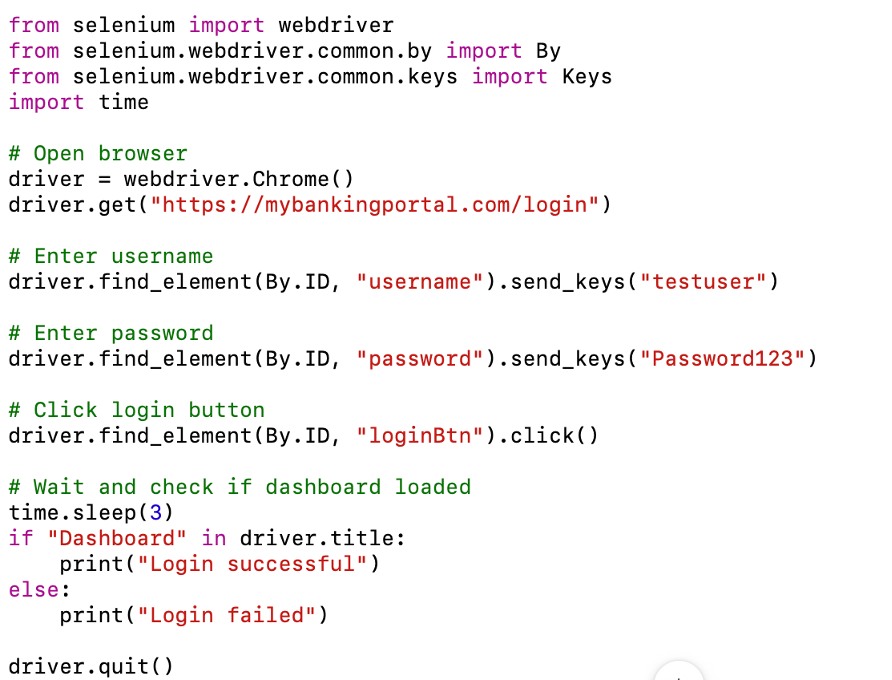

Test script generated :

A large language model dutifully generates a script. Technically correct—but dangerously shallow. What does it miss?

- Hardcoded credentials – insecure, instead of pulling from environment variables or a test data store.

- No negative testing – assumes a happy path; ignores wrong passwords, locked accounts, SQL injection attempts.

- Missing 2FA – most banking portals require OTP/email confirmation, which the AI skips due to vague input.

- Weak validation – only checks a page title instead of confirming presence of sensitive, secure elements.

- Flaky waits – relies on sleep(3) instead of explicit waits.

- No security/compliance lens – fails to ask: Is HTTPS enforced? Are error messages properly masked?

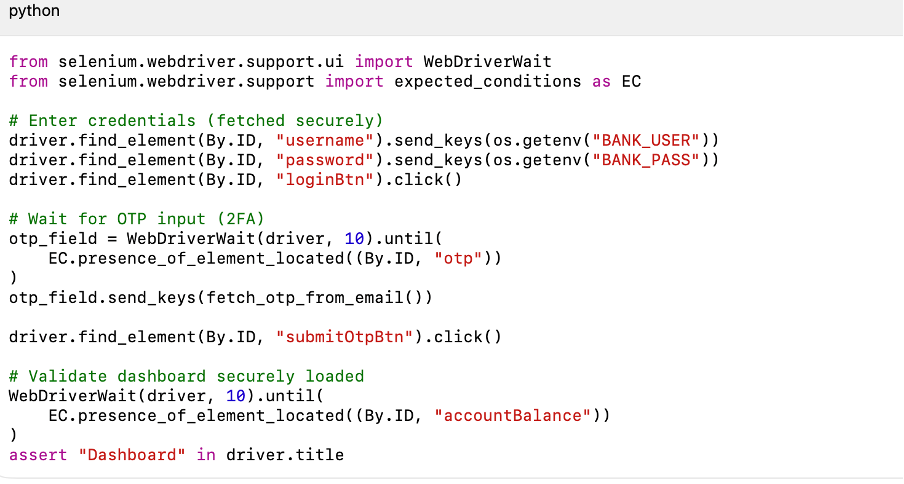

Now, after embellishing, we finally get a more robust script: ‘Verify that a user can log in to the banking portal using valid credentials with OTP verification, and confirm that sensitive data (like balance) is displayed only after secure login.’

But here’s the catch: this is just for the simplest of test cases. Imagine repeating this effort for thousands of cases, spanning complex workflows, intricate integrations, and endless edge conditions. The idea that you can simply ‘empty the Jira repository into an LLM and get production-ready QE scripts’ is not just naïve it overlooks the nuance, creativity, and judgment that define real Quality Engineering. LLMs are your accelerators, not your replacements. The real value lies in what AI overlooks and you catch.

if test scripts pose one challenge, test data is an even trickier frontier. For testers, data that mirrors production is a blessing; data that strays too far is a nightmare. Left to itself, a large language model will naturally try to generate test data that looks very close to production. That may be convenient, but here’s the real question: can it stand up to compliance scrutiny?

The danger lies in re-identification. Even if a dataset is formally ‘de-identified,’ hidden patterns can betray the privacy it promises. Imagine this scenario: you have a payer database containing de-identified patient records along with the types of procedures performed in the last two quarters. Separately, a hospital dataset leaks with details of surgeries performed in the same period. Neither contains direct PII. Yet, when the two are cross-referenced, it becomes alarmingly easy for an analyst or even an algorithm to triangulate identities. If one of the patients happens to be a public figure, with more details already in the open domain, the re-identification becomes almost trivial.

This risk has always existed, even in the pre-LLM world. But with GenAI models synthesizing and generating data without the guardrails of accountability, the exposure grows sharper. LLMs won’t sit across the table with compliance officers. That responsibility still rests squarely on Quality Engineering to ensure data remains useful for testing, but never drifts into a minefield of re-identification and compliance violations

What we’ve explored so far only scratches the surface of why LLMs cannot and should not be seen as replacements for Quality Engineering. Yes, they can accelerate certain tasks, but they also expose blind spots, compliance risks, and the limits of context-free automation. At the same time, GenAI is not just a challenge; it is a catalyst for entirely new QE frontiers evaluations, guardrail validations, and hallucination testing, to name a few. These emerging opportunities demand fresh thinking and redefined skillsets. I will unpack these dimensions in the next edition of Navigating the AI Frontier.

Shammy Narayanan is the Vice President of Platform, Data, and AI at Welldoc. Holding 11 cloud certifications, he combines deep technical expertise with a strong passion for artificial intelligence. With over two decades of experience, he focuses on helping organizations navigate the evolving AI landscape. He can be reached at shammy45@gmail.com