(What follows is Part 1. Part 2, which addresses “Benefits and Challenges of Each Approach” will appear on 5/21)

By Bala Kalavala, Chief Architect & Technology Evangelist

Event-Driven Architecture (EDA) and Data-Driven Architecture (DDA) represent two pivotal paradigms shaping modern enterprise landscapes. EDA is fundamentally an architectural pattern constructed from small, decoupled services that publish, consume, or route events, where an event signifies a state change or an update. This approach champions real-time responsiveness and operational agility. Conversely, DDA is an approach that prioritizes data as a core element in shaping applications and services, leveraging data analytics and real-time insights to inform decisions, optimize performance, and enhance user experiences.

Individually, EDA enables enterprises to build highly scalable and resilient systems that can respond instantly to a multitude of triggers, ranging from customer interactions to sensor readings. DDA empowers organizations with strategic intelligence, enabling them to understand complex patterns, predict future trends, and optimize operations through the systematic collection and analysis of data.

However, the true transformative power emerges not from their isolated application but from their synergistic interplay. Modern enterprises increasingly find that a purely reactive stance (EDA alone) or a solely analytical posture (DDA alone) is insufficient. The competitive edge lies in the convergence of EDA’s reactivity with DDA’s proactivity, creating systems that can sense, analyze, and respond intelligently in real-time. This combination fosters a continuous loop of action, learning, and optimized reaction, driving businesses towards becoming more adaptive and intelligent entities.

Key technological advancements, including the integration of Artificial Intelligence (AI) and Machine Learning (ML), the maturation of real-time stream processing, and the evolution of concepts like Data Mesh, are profoundly influencing both architectures and fostering their convergence. AI/ML, for instance, is not only enhancing event processing within EDA through intelligent routing and correlation but also supercharging DDA with advanced predictive and prescriptive analytics.

Defining Event-Driven Architecture (EDA)

Event-Driven Architecture (EDA) is a software design pattern where system behavior and communication are orchestrated around the production, detection, and consumption of “events”. An event represents a significant occurrence or a change in state within a system, such as an item being placed in a shopping cart, a sensor reading exceeding a threshold, or a financial transaction completing. The core purpose of EDA is to enable systems to react to these events in real-time or near real-time, thereby fostering responsiveness, agility, and loose coupling between system components.

Key Components of EDA:

The primary components that constitute an EDA are:

- Event Producers: These are the system components or services that detect business or system occurrences and generate events. Examples include user interface interactions, IoT devices sending telemetry data, or backend applications noting a change in data state. A fundamental characteristic is that producers are decoupled from consumers; they publish events without knowledge of which services, if any, will consume them.

- Event Brokers (Event Channels/Routers/Message-Oriented Middleware): These act as intermediaries, or a “post office,” for events. Producers send events to the broker, which then filters and routes these events to interested consumers. This component is critical for decoupling producers from consumers and managing the flow of events. Different models and topologies exist for event brokers:

- Publish-Subscribe (Pub/Sub) Model: The broker maintains a list of subscriptions to specific event types or topics. When an event is published, the broker delivers it to all active subscribers. Typically, in this model, events are not replayed for new subscribers after initial delivery.

- Event Streaming Model: Events are written to an ordered, persistent log (a stream). Consumers read from the stream at their own pace, tracking their position (offset). This model allows for event replay and for new consumers to process historical events. Platforms like Apache Kafka are prominent examples.

- Broker Topology: Components broadcast events, and other components listen and react if interested. This is highly decoupled and suitable for simpler event processing flows but may lack central coordination for complex transactions.

- Mediator Topology: An event mediator orchestrates the flow of events, often managing state, error handling, and complex event sequences. This offers more control but can introduce a potential bottleneck and tighter coupling around the mediator.

- Event Consumers (Subscribers/Handlers): These are components that subscribe to specific types of events or event streams. Upon receiving an event, a consumer processes it and performs an appropriate action, which might include updating its own state, invoking other services, or producing new events. Consumers operate independently of producers and other consumers.

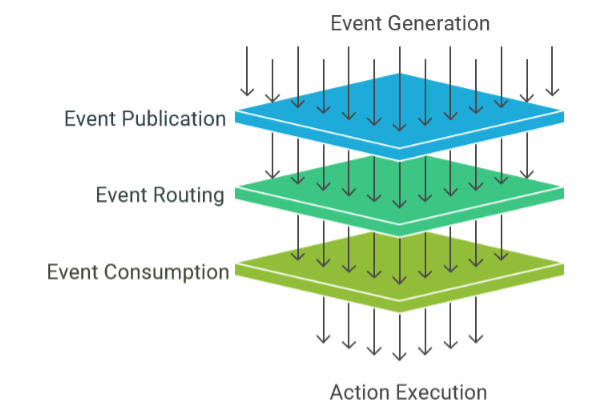

Figure: EDA Event Processing Funnel

The choice of event broker model (pub/sub vs. streaming) and topology (broker vs. mediator) represents a critical architectural decision point in EDA. This decision carries significant implications for system complexity, the guarantees around data consistency (e.g., eventual consistency versus stronger forms), and the ability to replay events for recovery or new analytics. A pub/sub model with a broker topology might offer simplicity for certain use cases but can struggle with complex event choreographies or guaranteed ordered delivery, which are often vital in enterprise scenarios like financial processing. Conversely, event streaming provides durability and replay capabilities but introduces its own set of complexities in management and consumption patterns. A mediator topology can centralize control and simplify error handling for complex, multi-step processes, but it risks becoming a performance bottleneck and a single point of failure if not designed carefully. Enterprises must, therefore, meticulously assess their specific non-functional requirements, including transactional integrity, auditability needs, and the intricacy of their business processes, before committing to a particular event broker strategy. A misalignment at this foundational level can lead to substantial future challenges or an inability to meet critical business or technical objectives.

Defining Data-Driven Architecture (DDA)

Data-Driven Architecture (DDA) is an architectural paradigm that positions data as the central and foremost driver in the design, development, operation, and strategic evolution of systems and services. The fundamental purpose of DDA is to systematically collect, manage, analyze, and leverage data to generate actionable insights, optimize performance, personalize user experiences, and inform strategic business decisions. In a DDA, data is not merely a byproduct of processes but is treated as a first-class citizen and a critical strategic asset.

Key Components of DDA:

A comprehensive DDA typically comprises the following interconnected components:

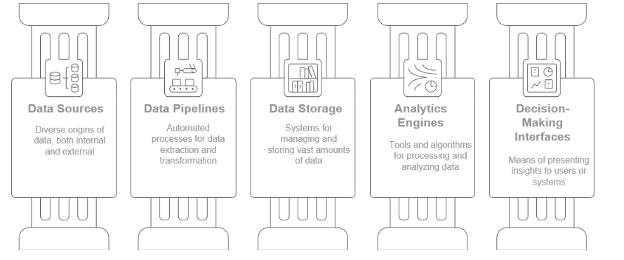

- Data Sources: These are the diverse origins from which data is generated or collected. They can be internal, such as Customer Relationship Management (CRM) systems, Enterprise Resource Planning (ERP) systems, and application logs, or external, including third-party data providers, social media feeds, IoT sensors, and public APIs.

- Data Pipelines (Data Ingestion & Transformation): These are the automated pathways and processes responsible for extracting data from various sources, transforming it into a usable format (cleansing, standardizing, enriching), and loading it into data storage systems. This encompasses Extract, Transform, Load (ETL) and Extract, Load, Transform (ELT) processes.

- Data Storage (Data Repositories): These are systems designed for storing and managing vast quantities of both raw and processed data. Common examples include:

- Data Warehouses: Typically store structured, historical data optimized for business intelligence and reporting.

- Data Lakes: Store vast amounts of raw data in various formats (structured, semi-structured, unstructured), providing flexibility for exploration and advanced analytics.

- Data Lakehouses: A newer architectural pattern combining the benefits of data lakes (flexible storage for raw data) and data warehouses (data management features and query performance).

- Architecture Repositories: In the context of EA itself, these repositories store metadata about the enterprise architecture and can be considered a DDA component for EA data.

- Analytics Engines: These are software tools, platforms, and algorithms used to process and analyze the stored data. This includes capabilities for querying, data mining, statistical analysis, machine learning model training and execution, as well as other advanced analytical techniques.

- Decision-Making Interfaces (Data Visualization & Access): These are the mechanisms by which insights derived from data analysis are presented to human users or consumed by automated systems. Examples include business intelligence dashboards, reporting tools, data visualization platforms, and APIs that expose analytical results or data products.

Figure: Components of Data Driven Architecture

The evolution of DDA from primarily supporting historical reporting and business intelligence to now deeply integrating predictive and prescriptive analytics, largely propelled by advancements in AI and ML, signifies a fundamental shift in its strategic value to the enterprise. Initially, DDA might have been perceived as a system for understanding past performance (“what happened?”). However, the infusion of AI/ML capabilities allows DDA to address “what will happen?” (predictive analytics) and, more powerfully, “what should we do about it?” (prescriptive analytics). This transforms DDA from a passive, backward-looking architecture into an active, forward-looking intelligence engine. Such an evolution necessitates different technological underpinnings, including sophisticated AI/ML platforms and infrastructure, and requires new skill sets within the organization, such as data scientists and ML engineers. Furthermore, it demands a much tighter integration loop between these advanced analytical insights and the core business processes to effectively act upon them, thereby significantly amplifying the overall strategic impact of a data-driven approach.

III. Comparing EDA and DDA: An Enterprise Architecture Perspective

While both Event-Driven Architecture (EDA) and Data-Driven Architecture (DDA) are crucial for modern enterprises, they serve distinct purposes, operate on different core principles, and manifest through different architectural characteristics. Understanding these differences is key for enterprise architects to effectively leverage their individual strengths and potential synergies.

While EDA is often highly operational and tactical, facilitating immediate responses to specific triggers, DDA can span both operational (e.g., real-time fraud analytics feeding into an EDA) and strategic domains (e.g., long-term business intelligence, market trend analysis, product development strategies).

A key differentiator between the two lies in the “granularity of trigger.” EDA is typically triggered by fine-grained, individual events—a single mouse click, a specific sensor reading, a new message arrival. Each event is a distinct signal that can initiate a process. DDA, on the other hand, often initiates its processes or derives its triggers from aggregated data, identified patterns, or the outcomes of analytical models that have processed numerous data points. For example, an analytical process in DDA might be triggered by the availability of a complete daily sales dataset, or an alert might be generated when a predictive model identifies an anomaly based on a complex evaluation of multiple data streams over time. This distinction in trigger granularity directly influences the design of processing logic, the selection of underlying technologies (e.g., lightweight event handlers in EDA versus more computationally intensive analytical engines in DDA), and the expected immediacy and nature of the system’s response.

Core Architectural Differences (Data Flow, Processing Modes, Triggers)

The distinct purposes of EDA and DDA translate into fundamental differences in their core architectural characteristics:

| Architectural Characteristics | EDA | DDA |

| Data Flow | In EDA, data flow is predominantly asynchronous. Event producers publish events to an event broker or message bus, often without knowing who the consumers are or if there are any consumers at all. The data within an event typically represents a “delta” (a change that occurred) or a notification, rather than a complete state snapshot. For example, an “InventoryUpdated” event might only contain the product ID and the new quantity, not the entire product record. | In DDA, data flow can be synchronous (e.g., an application querying a database via an API for specific information) or, more commonly for large-scale analytics, batch-oriented. Data moves through defined pipelines involving stages of collection from diverse sources, transformation (cleaning, aggregation, enrichment), loading into analytical stores, and subsequent analysis. The data here often represents a comprehensive state or a collection of historical states. |

| Processing Modes | EDA is characterized by real-time or near real-time processing of individual events or small, related batches of events. The emphasis is on low latency and immediate reaction | DDA traditionally involved batch processing for large datasets, especially for data warehousing and historical reporting. However, there is a strong trend towards incorporating real-time stream processing for continuous intelligence and immediate analytical insights, blurring the lines somewhat with EDA’s processing mode but typically with a focus on analytical outcomes rather than direct operational reactions. |

| Triggers | EDA systems are triggered by discrete events that signify a change in state, a user action, a sensor input, or a message from another system. | DDA processes are often triggered by schedules (e.g., nightly ETL jobs), the availability of new data in a staging area, or direct analytical queries initiated by users or other systems. Actions within a DDA context can also be triggered by insights derived from the data analysis itself, such as a predictive model flagging a customer for a specific offer. |

Table: Fundamental architecture characteristics compared for EDA and DDA

The management of “state” also presents a significant divergence. EDA components, particularly event processors and handlers, are often designed to be stateless or manage only short-lived state related to the event being processed. This design promotes scalability and resilience, as instances can be easily created or destroyed. For a durable state, EDAs often rely on patterns such as event sourcing (where the state is reconstructed by replaying a sequence of events) or interacting with external state stores. DDA, by its very nature, is deeply involved in managing and analyzing large volumes of historical state, typically persisted in data warehouses, data lakes, or data lakehouses. This fundamental difference in state handling has profound implications for technology choices (e.g., stream processing frameworks optimized for stateless computation in EDA versus distributed file systems and massive parallel processing databases in DDA), data modeling approaches (event schemas focused on change versus relational or dimensional models for analytical state), and the prevalent consistency models (often eventual consistency in distributed EDAs versus potentially stronger consistency requirements within DDA’s core analytical repositories).

Typical Use Cases and Scenarios

The distinct characteristics of EDA and DDA make them suitable for different types of enterprise scenarios:

| EDA Use Cases | DDA Use Cases |

| · Real-time Monitoring and Alerts: Detecting anomalies in financial transactions for fraud prevention, monitoring system health and performance metrics, or tracking logistics in real-time.

· Internet of Things (IoT): Ingesting and processing high-velocity data streams from sensors and devices to trigger actions, log data, or perform edge analytics. · Microservices Communication: Enabling asynchronous, loosely coupled communication between independent microservices, allowing them to evolve and scale independently. · E-commerce Platforms: Managing real-time order processing, inventory updates, personalized notifications (e.g., shipping status), and reacting to customer browsing behavior. · Financial Trading Systems: Processing market data updates, executing trades, and managing order books in real-time. · Mobile Push Notifications: Delivering timely alerts and updates to users based on specific triggers or user actions. |

· Business Intelligence and Reporting: Consolidating data from various sources to generate dashboards, reports, and visualizations that provide insights into business performance.

· Machine Learning and Artificial Intelligence: Preparing and feeding large, high-quality datasets to train, test, and deploy ML/AI models for tasks like prediction, classification, and natural language processing. · ETL (Extract, Transform, Load) and Data Warehousing: Building and maintaining data pipelines to extract data from operational systems, transform it for analytical consistency, and load it into data warehouses or data lakes for querying and analysis. · Healthcare Data Analysis: Analyzing patient records, clinical trial data, and public health information to improve diagnostics, treatment outcomes, and population health management. · Predictive Maintenance: Analyzing sensor data and equipment logs to predict potential failures and schedule maintenance proactively, minimizing downtime in manufacturing or other industries. |

While these use cases often appear distinct, a growing trend involves their convergence. For instance, a sophisticated fraud detection system, a classic EDA use case, becomes significantly more potent when advanced machine learning models augment its real-time event processing capabilities. These models, developed and trained within a DDA framework using vast historical transaction data, can identify subtle and novel fraud patterns that rule-based EDA systems might miss. In this scenario, the event stream from the EDA provides the immediate data (e.g., a new transaction attempt), and the DDA-powered ML model provides the sophisticated intelligence to interpret that event in real-time and flag it as potentially fraudulent. This implies that modern, complex systems frequently demand a hybrid architectural approach. Enterprise architectures must be designed to facilitate this interplay, for example, by creating data pipelines that can efficiently serve both real-time event consumers (EDA) and the batch or stream-based analytical processes of DDA.

References

- EDA Navigating Challenges from a Developer’s Perspective: https://dev.to/gvensan/event-driven-architecture-navigating-challenges-from-a-developers-perspective-5a3

- Azure EDA Style: https://learn.microsoft.com/en-us/azure/architecture/guide/architecture-styles/event-driven

- AWS What is EDA: https://aws.amazon.com/what-is/eda/

- DataIdeology – EDA Overview: https://www.dataideology.com/enterprise-data-architecture-overview/

- IBM EDA Benefits: https://www.ibm.com/think/insights/event-driven-architecture-benefits

The author is a seasoned technologist who is enthusiastic about pragmatic business solutions, influencing thought leadership. He is a sought-after keynote speaker, evangelist, and tech blogger. He is also a digital transformation business development executive at a global technology consulting firm, an Angel investor, and a serial entrepreneur with an impressive track record.

The article expresses the view of Bala Kalavala, a Chief Architect & Technology Evangelist, a digital transformation business development executive at a global technology consulting firm. The opinions expressed in this article are his own and do not necessarily reflect the views of his organization.