Summary

In the race for AI-driven transformation, enterprise leaders face a critical decision: rely solely on proprietary large language models (LLMs) or harness the power and flexibility of the open-source ecosystem. This article provides a strategic guide for C-level and leadership executives within Fortune 2000 companies, demystifying the intersection of open-source LLMs and advanced prompt engineering. We will explore how these technologies are not merely a cost-saving alternative but a powerful catalyst for innovation, data security, and competitive differentiation. By examining real-world applications across finance, retail, and healthcare, we’ll illustrate how a nuanced understanding of prompting techniques can unlock bespoke solutions, optimize operational efficiency, and drive a new wave of business value. This is a framework for leaders to shift from passive AI adoption to active, strategic AI mastery.

Introduction: From AI Hype to Strategic Reality

For many enterprise leaders, the rise of generative AI has been a whirlwind of headlines and ambitious promises. While proprietary models from tech giants have dominated the conversation, a parallel and equally powerful movement is underway: the rapid maturation of the open-source LLM landscape. Models like Llama, Mistral, and Falcon are now approaching or even surpassing the performance of their closed-source counterparts on specific tasks, offering unparalleled control and customization. However, the true strategic advantage lies not just in the choice of the model, but in the skill of communicating with it. This is where prompt engineering becomes a C-level imperative—a discipline that bridges technical capability with business intent.

This article outlines how to build a robust, future-proof AI strategy by leveraging open-source LLMs and a targeted approach to prompt engineering. We’ll show you how leading enterprises are moving beyond basic “chatbots” to build sophisticated, domain-specific AI applications that deliver tangible ROI. This is a playbook for harnessing AI’s full potential, ensuring your organization is not just an AI consumer, but a true AI innovator.

The Open-Source LLM Advantage: A Strategic Comparison

The decision to adopt open-source LLMs is a strategic one, offering key advantages that are particularly attractive to large, data-sensitive enterprises. The comparison below highlights why many companies are making the shift.

| Feature | Proprietary (Closed-Source) LLMs | Open-Source LLMs | Strategic Advantage |

| Data Control & Privacy | Your data is processed by a third party, raising significant concerns for regulated industries (Finance, Healthcare). | Models can be hosted on-premises or within a private cloud, ensuring your sensitive data never leaves your environment. | Mitigated Risk: Full compliance with data regulations (GDPR, HIPAA) and protection of intellectual property. |

| Customization | Limited fine-tuning and adaptation to specific business needs. The model’s core architecture is a black box. | Complete control over the model’s architecture, weights, and fine-tuning. Can be adapted for highly specific, niche tasks. | Competitive Edge: Build proprietary, in-house AI capabilities that are unique to your business processes. |

| Cost | Transactional, usage-based fees can scale unpredictably with high volume. | Upfront infrastructure investment with predictable operational costs. No per-token fees. | Predictable ROI: Scale your AI initiatives without unexpected expenses, leading to clearer budget forecasting. |

| Innovation Speed | Dependent on the vendor’s roadmap. Feature updates and bug fixes are out of your control. | Access to a global community of developers. Rapid iteration, bug fixes, and continuous improvement from a diverse talent pool. | Agility: The ability to innovate and deploy new features at the pace of your business, not your vendor. |

| Vendor Lock-in | High risk of being locked into a single vendor’s ecosystem, making future migration difficult and costly. | Freedom to switch between different models and platforms, ensuring long-term flexibility. | Business Continuity: Avoids dependency on a single provider and future-proofs your technology stack. |

Prompt Engineering: The Language of Value Creation

Prompt engineering is not just about writing good queries; it’s about structuring communication to guide an LLM to produce a desired, high-quality output. It is the critical layer between business logic and AI capability. For executives, understanding the core concepts of prompting is essential for directing strategy and evaluating the ROI of AI projects.

| Prompting Technique | Description | Strategic Application | Common Business Use Case |

| Zero-Shot & Few-Shot Prompting | Zero-shot provides no examples, while few-shot provides a few examples within the prompt to guide the model. | Use for initial proof-of-concept and tasks where high accuracy isn’t critical. Few-shot is a quick way to improve results without fine-tuning a model. | Zero-shot for a basic product Q&A chatbot. Few-shot for sentiment analysis of customer reviews. |

| Chain-of-Thought (CoT) Prompting | Instructs the model to “think step-by-step,” breaking down a complex problem into intermediate reasoning steps. | Essential for tasks requiring logical deduction, multi-step problem-solving, and data analysis. | Analyzing a series of financial reports to identify discrepancies or trends. Diagnosing a patient based on multiple symptoms and test results. |

| Retrieval-Augmented Generation (RAG) | The LLM retrieves information from a private, external knowledge base (e.g., company documents, databases) before generating a response. | Critical for enterprise applications that need to access proprietary, up-to-the-minute information. Combats model hallucinations and ensures accuracy. | Building an internal knowledge base for legal documents, sales playbooks, or medical research. |

| Role-Playing Prompting | Assigns a specific persona to the LLM (e.g., “Act as a financial analyst,” “You are a customer service agent”). | Ensures consistent tone, style, and accuracy for domain-specific tasks. | Generating discharge summaries from the perspective of a medical professional. Creating market analysis reports tailored for a specific audience. |

Architecting for the Future: A CIO’s Blueprint

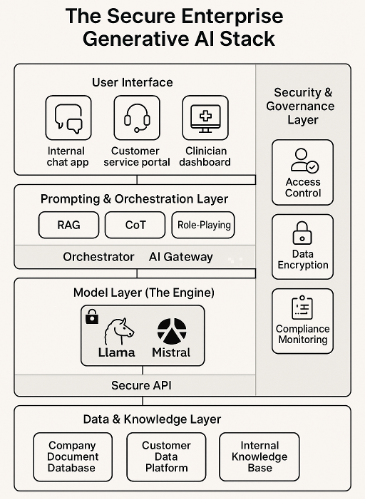

The Secure Enterprise Generative AI Stack offers a layered architectural approach that balances innovation with governance, enabling organizations to deploy AI solutions with confidence. Instead of a monolithic, black-box API, this approach enables a modular, secure, and scalable AI infrastructure. A compelling architecture would focus on the flow of data and the separation of concerns across layers, demonstrating how open-source models can be integrated without compromising security.

Figure: The Secure Enterprise Generative AI Stack

At the top of the stack lies the User Interface Layer, where generative AI meets its users. These interfaces are tailored to specific roles and workflows, ensuring AI is embedded where it adds the most value. The prompting & orchestration layer is the brain of the stack, where user intent is transformed and routed intelligently. This layer ensures that AI responses are not only accurate but also aligned with enterprise goals and policies. At the heart of the stack is the Model Layer, which powers the generative capabilities. This layer is encapsulated as a Secure LLM Service, ensuring that no sensitive data leaves the enterprise boundary. The Data & Knowledge layer is the most critical layer for the organization, as it determines the quality and relevance of AI outputs. This layer transforms generative AI from a generic tool into a strategic knowledge assistant. Running vertically across all layers is the Security & Governance Layer, which ensures enterprise-grade protection and compliance. This layer is not an afterthought—it is foundational to enterprise AI adoption. By adopting this layered architecture, enterprises can move beyond experimentation and into secure, scalable, and sustainable AI transformation.

Future Trends: What’s Next for Enterprise AI Stacks?

As the AI landscape evolves, so too will the architecture. Here are key trends shaping the future:

- Multi-Agent Systems: AI stacks will increasingly support collaborative agents that can reason, delegate tasks, and interact with each other—enabling more complex workflows and automation.

- Hybrid AI Models: Enterprises will blend symbolic reasoning with LLMs to improve explainability, reliability, and compliance—especially in regulated industries.

- Modular AI Components: Composable architectures will allow organizations to plug-and-play different models, tools, and data sources, enabling faster innovation and vendor flexibility.

- AI Risk Management Platforms: Expect the rise of AI-specific GRC (Governance, Risk, and Compliance) platforms that monitor model behavior, bias, drift, and hallucinations in real time.

- Federated & Edge AI: To meet data sovereignty and latency requirements, AI stacks will increasingly support federated learning and edge inference—especially in healthcare, finance, and manufacturing.

- Synthetic Data & Simulation: Synthetic data generation will become a core capability for training, testing, and validating AI models without compromising privacy.

Conclusion

The convergence of powerful open-source LLMs and sophisticated prompting techniques marks a pivotal moment in enterprise technology. This is not about choosing between good and bad models; it’s about choosing a strategic path that aligns with your business’s core values of security, control, and innovation. By moving from a “black box” mentality to a “toolbox” approach, you can build proprietary AI capabilities that are a true source of competitive advantage.

References

- Mistral Frontier AI: https://mistral.ai/

- Llama: https://www.llama.com/

- AI Governance Frameworks: https://www.ai21.com/knowledge/ai-governance-frameworks/

- Multi-Agent and Multi-LLM Architecture: https://collabnix.com/multi-agent-and-multi-llm-architecture-complete-guide-for-2025/

- Open-Source LLMs you can actually deploy: https://www.sentisight.ai/open-source-llms-you-can-actually-deploy/

Bala Kalavala is a seasoned technologist enthusiastic about pragmatic business solutions influencing thought leadership. He is a sought-after keynote speaker, evangelist, and tech blogger. He is also a digital transformation business development executive at a global technology consulting firm, an Angel investor, and a serial entrepreneur with an impressive track record. The article expresses the author’s view, Bala Kalavala, a Chief Architect & Technology Evangelist, a digital transformation business development executive at a global technology consulting firm. The opinions expressed in this article are his own and do not necessarily reflect the views of his organization.

keynote speaker, evangelist, and tech blogger. He is also a digital transformation business development executive at a global technology consulting firm, an Angel investor, and a serial entrepreneur with an impressive track record. The article expresses the author’s view, Bala Kalavala, a Chief Architect & Technology Evangelist, a digital transformation business development executive at a global technology consulting firm. The opinions expressed in this article are his own and do not necessarily reflect the views of his organization.