How neuroscience-based frameworks can help organizations manage the psychological impact of AI integration.

By Dr. Sarah Dyson

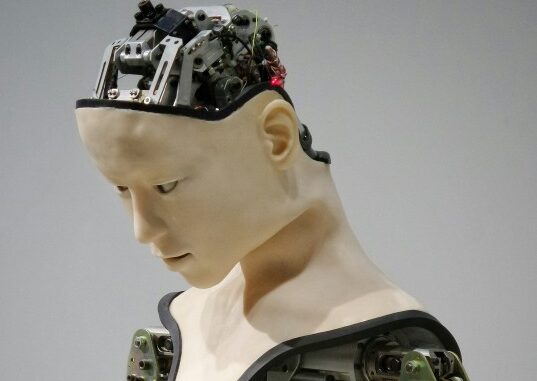

As artificial intelligence becomes more embedded in enterprise workflows, organizations face a crucial challenge that goes beyond technical implementation: managing how people psychologically respond to AI integration. Drawing from David Rock’s SCARF model—a neuroscience-based framework identifying five key areas of social experience—this article offers governance leaders practical tools to address the often-overlooked emotional aspects of digital transformation.

The integration of AI into organizational workflows triggers fundamental psychological responses related to Status, Certainty, Autonomy, Relatedness, and Fairness. By understanding and proactively addressing these SCARF domains, governance professionals can develop more effective AI lifecycle management protocols and conduct meaningful stakeholder impact assessments that account for emotional attachment risks in long-term AI collaborations.

The Governance Challenge is Beyond the Technical Implementation

Traditional IT governance frameworks excel at managing technical risks, compliance requirements, and operational metrics. However, they often fall short in addressing the psychological dimensions of AI adoption—dimensions that can make or break digital transformation initiatives.

Recent research indicates that up to 30% of AI project outcomes are negatively affected by inadequate consideration of human factors during implementation. When stakeholders experience AI systems as threats to their status, autonomy, or sense of fairness, even technically successful implementations can face significant resistance, reduced adoption, and suboptimal performance.

The SCARF Framework: A Neuroscience-Based Approach

Developed by David Rock in 2008, the SCARF model provides a comprehensive framework for understanding human social behavior through five critical domains that trigger either threat or reward responses in the brain. These domains encompass Status (our perceived importance relative to others), Certainty (our ability to predict future outcomes), Autonomy (our sense of control over events), Relatedness (our sense of safety and connection with others), and Fairness (our perception of equitable treatment). The significance of this framework lies in its neurological foundation.

These five social domains activate the same neural pathways that govern our physical survival responses, which explains why perceived social threats can generate reactions as intense as those triggered by physical danger. For governance professionals overseeing AI implementations, this neurological reality necessitates a fundamental shift in approach—successful AI integration requires deliberate attention to psychological safety alongside technical performance, ensuring that digital transformation initiatives support rather than undermine the basic human needs that drive workplace behavior and adoption.

Governance Framework Evolution: The AI Team Member Lifecycle

One of the most significant shifts in AI governance thinking involves conceptualizing AI systems as team members rather than mere tools. This reframing has profound implications for how we structure governance protocols around AI lifecycle management.

Consider a scenario where an AI system has been collaborating with a marketing team for eighteen months, learning team preferences, adapting to workflow patterns, and becoming integral to daily operations. When organizational changes require transitioning to a different AI system, the psychological impact can be substantial, comparable to losing a valued team member.

The Four Phases of AI Team Member Lifecycle Management

Phase 1: Introduction and Onboarding

The foundation of ethical AI integration begins with how organizations introduce AI systems to their teams. From a SCARF perspective, this critical phase requires positioning AI as augmenting rather than replacing human expertise to protect status concerns, while providing comprehensive documentation of AI capabilities, limitations, and decision-making processes to ensure certainty. Organizations must maintain meaningful human control over AI recommendations and actions to preserve autonomy, create deliberate opportunities for teams to develop positive working relationships with AI systems to foster relatedness, and establish transparent criteria for AI performance evaluation to ensure fairness.

Governance Protocol: Develop standardized “AI team member profiles” that include ethical guidelines, capability descriptions, and human oversight requirements. Require formal introduction meetings where teams can understand how AI will enhance their work rather than threaten their roles.

Phase 2: Integration and Collaboration

As AI systems become embedded in daily workflows, governance frameworks must actively monitor and support the evolving human-AI relationships. Organizations can create mechanisms for publicly recognizing successful human-AI collaborations while implementing regular “performance reviews” that explain how AI decision-making evolves. Establish clear protocols for human override capabilities, foster a team identity that includes AI as a valued contributor, and conduct regular bias audits to ensure equitable AI performance across different user groups.

Governance Protocol: Implement quarterly “AI team health checks” that assess not just technical performance metrics, but also team satisfaction, trust levels, and psychological safety indicators.

Phase 3: Evolution and Adaptation

Long-term AI collaborations require governance frameworks capable of adapting to changing relationships and capabilities. During this maturity phase, organizations should acknowledge when teams develop expertise in AI collaboration and leverage this knowledge organizationally, provide advance notice of AI updates or capability changes, and gradually increase AI authority in areas where trust is established. Leaders can oversee the development of sophisticated human-AI collaboration patterns while continuously refining bias detection and mitigation strategies.

Governance Protocol: Create “AI collaboration maturity models” that recognize teams’ growing expertise in human-AI partnership and provide pathways for sharing best practices across the organization.

Phase 4: Transition and Legacy Management

Perhaps the most overlooked aspect of AI governance involves managing transitions when AI systems are upgraded, replaced, or retired. Legacy management ensures that knowledge gained through AI collaboration is valued and preserved, clearly communicating transition timelines and expectations for new systems, and providing choice in how transitions occur, along with appropriate training. Organizations must acknowledge the loss of familiar AI collaborators and support adaptation to new ones, while ensuring that transition processes don’t disproportionately impact any particular groups.

Governance Protocol: Develop “AI legacy documentation” that captures the institutional knowledge embedded in long-term human-AI collaborations and ensures this knowledge informs future AI implementations.

Stakeholder Impact Assessment and Measuring Emotional Attachment

Conventional stakeholder impact assessments focus on operational changes, training requirements, and performance metrics. However, they rarely address the emotional dimensions of AI integration, particularly the risk of excessive dependence or inappropriate attachment to AI systems. Traditional stakeholder assessments emphasise operations, training, and metrics, but often overlook the emotional risks of AI integration, such as overdependence or inappropriate attachment to AI systems.

The Emotional Attachment Risk Framework

Based on SCARF principles, organizations should assess stakeholder groups across three critical dimensions that reveal the depth and nature of human-AI relationships within the workplace.

| Assessment Domain | Focus Areas | Key Evaluation Points | Potential Findings |

| Attachment Depth | Centrality of AI to work processes, reliance on decision-making confidence, and personalization in AI interactions | Signs of deep operational dependency, vulnerability during system transitions, or failures | Operational dependency, vulnerability |

| Psychological Investment | Stakeholder view of AI as team members, identity tied to AI collaboration, emotional reactions to AI unavailability | Human self-concept and professional confidence are intertwined with AI performance. | Extent of psychological investment |

| Transition Readiness | Preparedness for AI system changes or retirement, support structures for transitions, and workflow resilience. | Readiness for system changes, support structures, and workflow resilience if AI is removed. | Potential risks, mitigation strategies |

Practical Assessment Tools

The SCARF Impact Matrix provides a systematic method for evaluating how different stakeholder groups will likely respond to AI implementation or changes. This assessment tool helps governance professionals prioritize support resources and develop targeted mitigation strategies based on psychological risk factors.

Tool 1: The SCARF Impact Matrix

Impact Level Definitions

- High Impact: Significant threat or reward response likely; requires immediate attention and mitigation strategies.

- Medium Impact: Moderate response expected; benefits from proactive management and monitoring.

- Low Impact: Minimal response anticipated; standard communication and support sufficient.Sample Assessment Matrix

| Stakeholder Group | Status Impact | Certainty Impact | Autonomy Impact | Relatedness Impact | Fairness Impact | Overall Risk Level |

| Marketing Team | Medium | High | Low | Medium | Low | Medium |

| Data Analytics | High | Medium | Medium | High | Medium | High |

| Customer Service | Low | High | High | Medium | High | Medium |

Matrix Analysis

- Marketing Team (Medium Risk)

- Shows high certainty concerns, likely due to unclear AI impact on campaign effectiveness and ROI measurement. The medium status impact suggests concerns about AI affecting their creative expertise value.

- Recommended Actions: Provide detailed AI capability documentation and position AI as enhancing rather than replacing marketing creativity.

- Shows high certainty concerns, likely due to unclear AI impact on campaign effectiveness and ROI measurement. The medium status impact suggests concerns about AI affecting their creative expertise value.

- Data Analytics (High Risk)

- Displays high status and relatedness impacts, indicating concerns about AI replacing analytical expertise and disrupting team dynamics. Multiple medium-to-high impacts across domains suggest this group requires comprehensive change management support.

- Recommended Actions: Develop a specialized onboarding process, create data scientist-AI collaboration showcases, and establish clear human oversight protocols.

- Displays high status and relatedness impacts, indicating concerns about AI replacing analytical expertise and disrupting team dynamics. Multiple medium-to-high impacts across domains suggest this group requires comprehensive change management support.

- Customer Service (Medium Risk)

- High certainty and autonomy impacts reflect concerns about unpredictable AI responses to customers and loss of decision-making control. High fairness impact suggests worry about consistent service quality across different customer interactions.

- Recommended Actions: Implement robust human override capabilities and transparent AI decision-making processes.

- High certainty and autonomy impacts reflect concerns about unpredictable AI responses to customers and loss of decision-making control. High fairness impact suggests worry about consistent service quality across different customer interactions.

This matrix should be customized for each organization’s specific stakeholder groups and AI implementation context, with regular reassessment as AI integration matures.

Tool 2: Attachment Indicator Survey

Develop regular pulse surveys that measure emotional attachment indicators

| Statement |

| I feel confident in my work when AI systems are available |

| I would describe our AI as a valuable team member |

| I feel anxious when AI systems are down or unavailable |

| I trust AI recommendations more than my own initial instincts |

| I worry about what would happen if we lost access to our AI systems |

Tool 3: Transition Readiness Assessment

Assess organizational preparedness for AI lifecycle changes

| Assessment Area | Key Question |

| Knowledge documentation | Is institutional knowledge tied to specific AI systems? |

| Skill diversification | Do teams maintain capabilities that are independent of AI support? |

| Emotional preparedness | How do stakeholders react to discussions about AI changes? |

| Support systems | What resources are available for managing AI-related transitions? |

Implementation Roadmap

Putting SCARF-Based AI Governance into Practice

| Phase 1: Foundation Building (Months 1-2) | |

| Key Activities | 1. Conduct organizational SCARF awareness training for governance leaders. |

| 2. Develop AI team member lifecycle protocols. | |

| 3. Create stakeholder impact assessment templates. | |

| Deliverables | 1. SCARF training materials and governance team certification |

| 2. AI lifecycle governance framework documentation | |

| 3. Assessment tool templates ready for deployment | |

| Success Metrics | 1. 100% governance team training completion |

| 2. Framework documentation approved by leadership | |

| 3. Assessment tools validated and ready for use | |

| Phase 2: Pilot Implementation (Months 3-4) | |

| Key Activities | 1. Select 2-3 AI implementations for pilot SCARF-based governance. |

| 2. Implement baseline stakeholder impact assessments. | |

| 3. Begin regular SCARF monitoring of AI integrations. | |

| Deliverables | 1. Pilot program selection criteria and baseline assessment reports |

| 2. SCARF monitoring dashboard with initial metrics | |

| 3. Stakeholder feedback collection system | |

| Success Metrics | 1. 3 pilot programs successfully launched. |

| 2. Baseline assessments completed for all pilot groups. | |

| 3. Monthly SCARF metrics collection established. | |

| 4. Initial stakeholder satisfaction scores documented. | |

| Phase 3: Scaling and Refinement (Months 5-6) | |

| Key Activities | 1. Expand SCARF-based governance to all AI implementations. |

| 2. Refine assessment tools based on pilot feedback. | |

| 3. Develop organizational playbooks for common SCARF scenarios. | |

| Deliverables | 1. Refined assessment tools (version 2.0) |

| 2. SCARF scenario playbooks for top use cases | |

| 3. Expanded governance coverage across all AI systems | |

| 4. Updated training materials incorporating lessons learned | |

| Success Metrics | 1. 100% of AI implementations covered by SCARF governance |

| 2. Tool refinement completed based on pilot feedback | |

| 3. Playbooks developed for top 5 SCARF scenarios | |

| 4. Improved stakeholder satisfaction scores from baseline | |

| Phase 4: Continuous Improvement (Months 7-12) | |

| Key Activities | 1. Implement regular governance framework reviews |

| 2. Build organizational capability in human-AI collaboration management. | |

| 3. Share learnings and best practices across the enterprise | |

| Deliverables | 1. Quarterly governance review process and reports |

| 2. Comprehensive capability development program | |

| 3. Best practices repository and knowledge sharing platform | |

| 4. Industry case studies and thought leadership content | |

| Success Metrics | 1. Quarterly review process established and operating |

| 2. 80% of staff trained in human-AI collaboration principles | |

| 3. Best practices documented, shared, and adopted | |

| 4. Industry recognition and external case study development | |

| Implementation Success Factors | |

| Critical Dependencies | 1. Executive sponsorship and governance team commitment |

| 2. Access to pilot AI implementations and stakeholder groups | |

| 3. Resources for training development and delivery | |

| 4. Integration with existing governance processes | |

| · Start with willing pilot groups to build momentum | |

| Risk Mitigation Strategies | · Integrate SCARF principles into existing governance meetings |

| · Create quick wins to demonstrate value | |

| · Establish clear communication channels for feedback | |

| Resource Requirements

|

· Governance Team Time: 20-30% allocation for the first 6 months |

| · Training Resources: Internal facilitators or external SCARF expertise | |

| · Technology: Dashboard/monitoring tools for SCARF metrics | |

| · Stakeholder Time: 2-4 hours per month for assessments and feedback | |

Case Study: SCARF-Informed AI Governance in Action

The Challenge: A large financial services firm was implementing an AI system to support investment advisory teams. Initial pilots showed strong technical performance, but adoption remained inconsistent across different teams.

The SCARF Analysis

| SCARF Factor | Observation |

| Status | Senior advisors felt threatened by AI that could analyze market data faster than they could |

| Certainty | Teams were unclear about when to trust AI recommendations versus their expertise. |

| Autonomy | The system initially provided limited override capabilities for AI suggestions.. |

| Relatedness | Individual advisors were working with AI in isolation rather than as collaborative teams. |

| Fairness | Some teams received more advanced AI capabilities than others due to a phased rollout. |

| Governance Response | Description |

| Status Enhancement | Repositioned AI as “market intelligence amplifier” rather than “investment advisor” |

| Certainty Building | Created detailed transparency documentation showing how AI reached conclusions |

| Autonomy Preservation | Implemented robust override capabilities and human-in-the-loop processes |

| Relatedness Fostering | Established AI collaboration communities of practice across teams |

| Fairness Assurance | Developed an equitable rollout process with clear criteria for capability distribution |

Results

After implementing SCARF-informed governance protocols, AI adoption rates increased by 70%, and stakeholder satisfaction scores improved significantly across all measured dimensions.

The Future of Human-Centered AI Governance

As AI systems become more sophisticated and integrated into organizational workflows, the importance of addressing psychological dimensions will only grow. Organizations that proactively develop SCARF-informed governance frameworks will be better positioned to achieve the following.

| Goal | Approach |

| Maximize AI ROI | Higher adoption rates, more effective human-AI collaboration |

| Reduce Implementation Risk | Anticipating and addressing stakeholder resistance |

| Build Organizational Resilience | Better management of AI lifecycle transitions |

| Enhance Ethical AI Use | Ensuring AI implementations respect human psychological needs |

The integration of neuroscience insights into AI governance represents a critical evolution in how we think about digital transformation. By understanding and addressing the fundamental human needs captured in the SCARF model, governance professionals can create AI implementations that not only succeed technically but also support human flourishing in increasingly AI-augmented workplaces.

Conclusion

The future of AI governance lies not in choosing between human and artificial intelligence, but in orchestrating their collaboration in ways that honor both technical capabilities and human psychology. The SCARF model provides a practical, science-based framework for achieving this balance. As governance professionals, our role is expanding beyond traditional risk management and compliance oversight. We must become architects of human-AI collaboration, designing systems and processes that enable both humans and AI to contribute their unique strengths while addressing the fundamental psychological needs that drive human behavior.

The organizations that master this balance—implementing robust AI lifecycle management protocols while conducting thorough stakeholder impact assessments—will not only achieve better technical outcomes but will also create more resilient, adaptive, and ultimately more human workplaces.

The question is no longer whether AI will transform our organizations, but whether we will transform our governance approaches to support humans through that transformation.

About the Author

Dr. Sarah Dyson is an Assistant Professor of Project Management at Harrisburg University of Science and Technology, where she integrates her expertise in social psychology with project management to advance leadership and organizational practices. Holding a Ph.D. in Psychology with a specialization in Social Psychology from Walden University and an M.S. in Business Management and Information Management from Colorado Technical University, Dr. Dyson brings over a decade of experience as an entrepreneur and CEO in the mental health field to her academic and consulting roles.

Her research explores the interplay of artificial intelligence, media, emotions, and motivation, focusing on their impact on project managers and organizational behavior. Notable publications include Operational Endings, Emotional Impacts: Ethical Considerations When Project Teams Form Attachments to AI Collaborators (2025) and Why Do Relationships Matter to Project Managers? (2023), published in Beyond the Project Horizon: Journal of the Center for Project Management Innovation, where she serves as Editor-in-Chief. Dr. Dyson’s work also addresses gender dynamics, as seen in her 2021 literature review on women’s roles in the home and her presentation at the Women’s Economic Forum of Ecuador.

As a research consultant, Dr. Dyson collaborates with public and private sectors to enhance leadership and team dynamics. She serves as an editor for The Arkansas Psychological Journal and is an academic reviewer for the Society of Personality and Social Psychology and The Journal of Social Behavioral and Health Sciences. A member of the National Coalition of Independent Scholars and an associate member of the Society of Personality and Social Psychology, Dr. Dyson is dedicated to fostering interdisciplinary approaches and promoting positive social change through her scholarship and mentorship.

Resources

Bobburi, N. G., & Li, L. (2024). Why AI ethics matters in project management. Projectified Podcast. Project Management Institute. https://www.pmi.org/projectified-podcast/podcasts/why-ai-ethics-matters-in-project-management

NeuroLeadership Institute. (2024). Change in weeks, not years: Making organizations more human and high-performing through science. https://neuroleadership.com/

Project Management Institute. (2025). AI essentials for project professionals. https://www.pmi.org/standards/ai-essentials-for-project-professionals

Project Management Institute. (2025). Artificial intelligence in project management. https://www.pmi.org/learning/ai-in-project-management

Rock, D. (2009). Your brain at work: Strategies for overcoming distraction, regaining focus, and working smarter all day long. HarperBusiness.

Rock, D., & Cox, C. (2012). SCARF in 2012: Updating the social neuroscience of collaborating with others. NeuroLeadership Journal, 4(4), 1-16.