By Rekha Kodali

As large language models transform enterprise operations, organizations face unprecedented challenges in ensuring these powerful systems operate safely, ethically, and in alignment with business objectives. This comprehensive guide addresses the critical intersection of model governance, operational excellence, and protective guardrails that define enterprise-ready AI systems.

The stakes have never been higher. A single poorly governed AI deployment can result in regulatory violations, brand damage, and operational disruption that reverberates across entire organizations. Yet the competitive advantages of well-implemented LLM systems are equally profound, offering transformative capabilities in automation, decision support, and customer experience.

Effective governance includes establishing effective model governance, LLM operations, implementing guardrails.

The Foundation: Understanding Model Governance

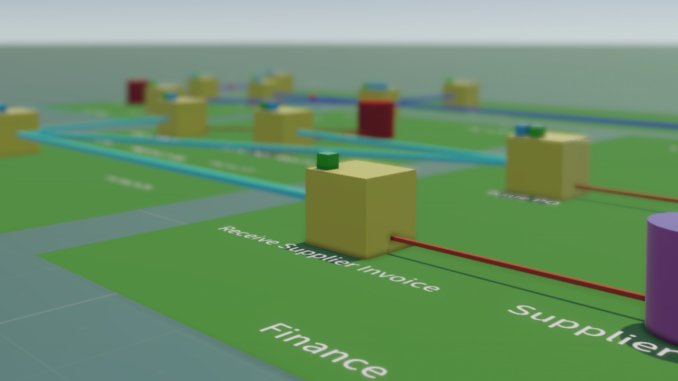

Model governance encompasses the policies, processes, and organizational structures that ensure AI systems operate within defined parameters while delivering consistent business value. Unlike traditional software governance, AI model governance must address unique challenges including model drift, bias amplification, and the inherent unpredictability of generative systems.

Effective governance begins with establishing clear ownership structures. Organizations must define roles for model owners, data stewards, and risk managers, creating accountability frameworks that span the entire model lifecycle. This includes not only initial deployment decisions but ongoing monitoring, maintenance, and eventual retirement of AI systems.

The governance framework must also address model versioning, change management, and audit trails. Enterprise leaders need visibility into which models are running where, how they’re performing, and what changes have been made over time. This operational transparency becomes crucial during regulatory reviews or when investigating unexpected model behavior.

LLM Operations: Scaling AI with Operational Excellence

Infrastructure Planning

Establish robust computational infrastructure capable of handling variable LLM workloads while maintaining cost efficiency. This includes selecting appropriate cloud resources, implementing auto-scaling mechanisms, and ensuring redundancy across critical systems.

Model Deployment Pipelines

Develop automated deployment pipelines that incorporate testing, validation, and rollback capabilities. These pipelines must handle model updates, configuration changes, and emergency interventions while maintaining service availability.

Performance Monitoring

Implement comprehensive monitoring systems that track both technical metrics (latency, throughput, error rates) and business metrics (accuracy, user satisfaction, cost per interaction). Real-time dashboards enable proactive intervention before issues impact users.

Incident Response

Establish clear escalation procedures and response protocols for AI-related incidents. This includes defining severity levels, notification chains, and recovery procedures specific to LLM systems and their unique failure modes.

Implementing Robust Guardrails

Input Validation: Implement comprehensive input sanitization and validation to prevent prompt injection attacks and malicious content submission.

Output Filtering: Deploy real-time content filtering systems that detect and block inappropriate, biased, or harmful outputs before they reach end users.

Rate Limiting: Establish usage quotas and rate limiting mechanisms to prevent system abuse and manage computational resource consumption.

Operational Guardrails

Human Oversight: Maintain human-in-the-loop processes for high-risk decisions and establish clear escalation paths when AI confidence levels drop below acceptable thresholds.

Access Controls: Implement role-based access controls that limit model interactions based on user permissions and organizational hierarchy.

Audit Logging: Comprehensive logging of all model interactions, decisions, and outcomes to support regulatory compliance and forensic analysis.

Conclusion: Success in enterprise AI governance requires commitment from the highest levels of leadership, cross-functional collaboration, and a culture that values both innovation and responsible deployment. Organizations that invest in robust governance frameworks today will be positioned to leverage AI’s transformative potential while maintaining the trust of customers, regulators, and stakeholders. The path forward demands technical excellence, operational discipline, and unwavering commitment to ethical AI practices.