By Sanjoy Ghosh, AI & Digital Engineering Leader

Rise of AI Agents

The AI journey in enterprises has moved from scripted bots to copilots, and now to adaptive agents—machines that not only assist but act autonomously. We stand at the threshold of a new era: Agentic AI, in which systems reason, respond, and adapt across workflows without human prompts. The latest vanguard in this evolution? Lean Agents; purpose-built, lightweight agents designed to do one job exceptionally well, without the overhead or carbon footprint of their bulkier predecessors.

In a time when sustainability matters as much as speed, Lean Agents optimize for three critical dimensions: cost, complexity, and carbon. They deliver intelligent outcomes by keeping execution tight and transparent—ensuring automation drives results, not hidden inefficiency

Organizations are tired of gold‑plated mega systems that promise everything and deliver chaos. Enter frameworks like AutoGen and LangGraph, alongside protocols such as MCP; all enabling Lean Agents to be spun up on-demand, plug into APIs, execute a defined task, then quietly retire. This is a radical departure from heavyweight models that stay online indefinitely, consuming compute cycles, budget, and attention.

At the core lies a central thesis: Lean Agents are the next frontier in agentic scale, offering on-demand intelligence with minimal overhead. They deploy rapidly, respond swiftly, and recycle cleanly; perfect for fast‑paced enterprise functions that demand just-in-time intelligence. Open frameworks and cloud-native triggers now make this model not just feasible, but imperative for any organization serious about cost-efficiency and operational agility.

What Are Lean Agents?

Lean Agents are purpose-built AI workers; minimal in design, maximally efficient in function. Think of them as stateless or scoped-memory micro-agents: they wake when triggered, perform a discrete task like summarizing an RFP clause or flagging anomalies in payments and then gracefully exit, freeing resources and eliminating runtime drag.

Lean Agents are to AI what Lambda functions are to code: ephemeral, single-purpose, and cloud-native. They may hold just enough context to operate reliably but otherwise avoid persistent state that bloats memory and complicates governance.

By contrast, monolithic assistants agents that juggle dozens of tasks under one roof—may look versatile, but they suffer from unpredictability, high costs, and maintenance nightmares. Lean Agents, instead, offer surgical precision. If you need a clause comparator or ticket triage, you invoke a purpose-built agent for that task alone no cross-task interference, no runaway compute. They are discreet, explainable, and scalable.

A useful analogy: Lean Agents are like headless micro bots for knowledge work, spinning up dynamically, executing specific logic, and then vanishing leaving behind only logs and results. Or put another way: they are the serverless Lambdas of agentic workflows, built to trigger, run, and exit with surgical clarity.

Few systems can balance autonomy, lightweight design, and sustainability—and Lean Agents manage it by design. By stripping down to essentials, they reduce operational friction, make real-time auditing straightforward, and align with today’s demands for responsible AI: lower costs, lower carbon emissions, and lower complexity without sacrificing capability.

From technology standpoint, combined with the emerging Model‑Context Protocol (MCP) give engineering teams the scaffolding to create discoverable, policy‑aware agent meshes. Lean Agents transform AI from a monolithic “brain in the cloud” into an elastic workforce that can be budgeted, secured, and reasoned about like any other microservice.

Defining Lean Agents

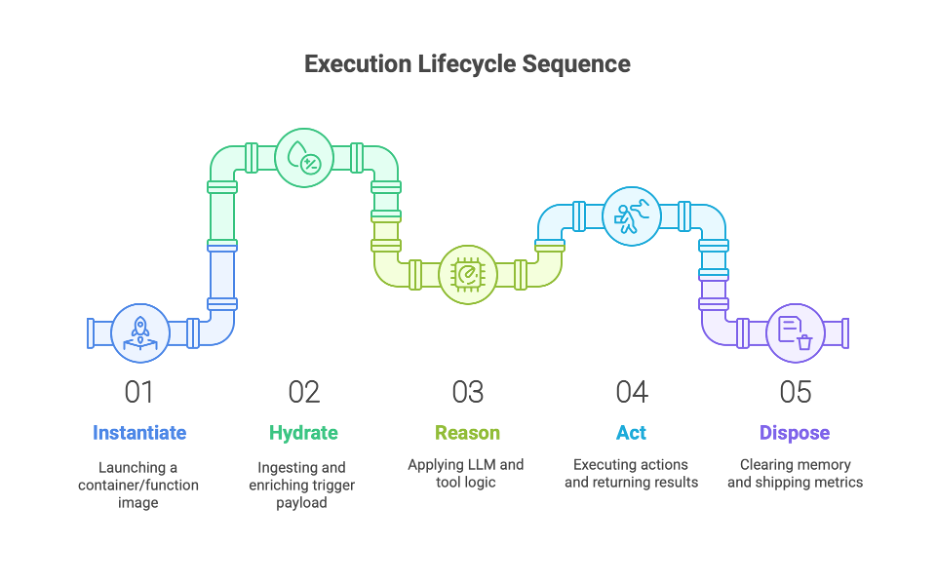

A Technical View Lean Agents can be understood by dissecting their five internal strata and their execution lifecycle:

| Lever | Responsibility | Engineering Considerations |

| Invocation Surface | Receives an external trigger (HTTP, gRPC, message bus). | Idempotent request schema; built‑in retry semantics. |

| Policy Gate | Validates scope, identity, and rate limits before code is executed. | MCP compliance check, ABAC rules. |

| Context Hydration | Pulls just‑enough information to ground the prompt. | Tiered fetch order: scratch cache → vector store → transactional DB. |

| Reasoning Engine | Runs LLM calls, tool usage, and deterministic functions. | Pluggable model adapters; isolated function‑calling sandbox. |

| Emission Layer | Persists results, posts follow‑up events, emits logs. | Immutable log stream; atomic write guarantees for side‑effects. |

Execution Lifecycle

Why Enterprises Care

Elastic Economics Traditional AI services keep GPUs warm 24 × 7. Lean Agents idle until invoked, aligning cost strictly to usage. Finance teams gain precision forecasting because spend now tracks actual task volume instead of peak provisioning.

Provable Auditability Every invocation produces a cryptographically signed trace input, context lookup, model call, output anchored to the organization’s policy ledger. This immutable lineage simplifies regulatory reporting and internal forensics.

Proximity & Latency Because agents are containerized micro‑tasks, they can be deployed closer to the data source (edge nodes). Millisecond‑scale round trips enable real‑time actuation in manufacturing lines or trading systems.

Blast‑Radius Containment A malfunctioning Lean Agent affects only its single task instance. The failure domain is one container, not an entire conversational assistant, enabling safer experimentation and faster rollback.

Sustainable Footprint Short‑lived execution translates into measurable reductions in energy consumption and carbon emissions a growing board‑level KPI.

Governance Alignment The MCP registry turns agents into first‑class, version‑controlled artefacts. Risk, security, and FinOps teams gain the same visibility they have for micro‑services, closing the governance gap that plagues ad‑hoc prompt automations.

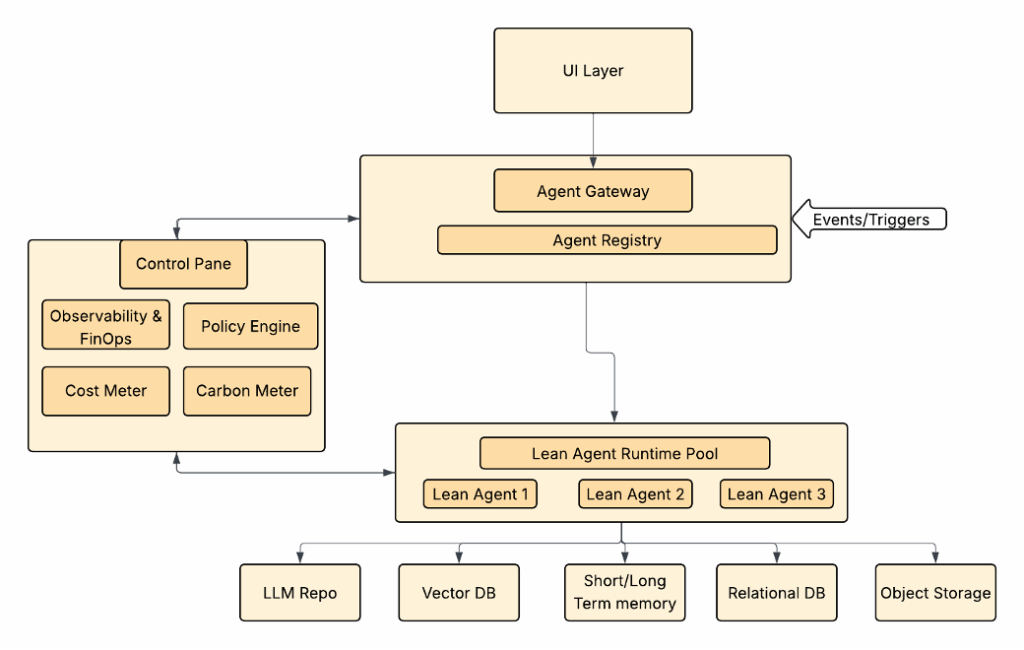

Logical Architecture

Key Architectural Components

| Layer | Responsibilities |

| Interaction Layer | REST / GraphQL / gRPC endpoints, chat UI, webhook listeners |

| Agent Gateway | Authentication, authorisation, request shaping, rate‑limiting |

| MCP Control Plane | Agent registry, lifecycle metadata, policy evaluation, cost telemetry |

| Trigger Queue & Event Mesh | Asynchronous invocation |

| Runtime Pool | Auto‑scaling containers / functions that host agent execution |

| Model‑Serving Substrate | High‑performance inference for LLMs and embeddings |

| Context Layer | Durable knowledge bases, embeddings, transactional data |

| Observability & FinOps | Distributed tracing, metrics, cost attribution |

GPU & Compute Sizing (Illustrative)

| Scenario | Hardware Class | Engineering Rationale |

| Real‑time inference | High‑end GPU | Maximises throughput with minimal batching |

| Edge / Branch deployment | Low‑power GPU or CPU | Fits constrained power envelopes while keeping latency local |

| Development / CI | Single workstation‑grade GPU | Provides economical local testing; no multi‑GPU overhead |

| Distributed fine‑tuning | Multi‑node GPU cluster | Enables parameter‑efficient tuning across large datasets |

Risks, Challenges & Mitigations

| Challenge | Technical Detail | Potential Mitigation Strategy |

| Agent Sprawl | Hundreds of untracked agent images accumulate exhausting registry storage | Enforce TTL and ownership labels; automate garbage collection via Control‑Plane cron jobs |

| Context Vacuum | Stateless design may starve the LLM of historical signals, degrading answer quality. | Employ hierarchical context retrieval: (1) hot in‑memory cache, (2) short‑range vector search, (3) cold relational/graph query. Fall back gracefully if tiers are empty. |

| GPU Over‑Subscription | Simultaneous bursts saturate GPU memory, causing OOM errors and tail‑latency spikes | Implement queue‑based admission control; enable MIG/partitioning; reserve headroom via resource quotas; autoscale on GPU‑util metrics. |

| Prompt Injection & Jailbreaks | Malicious inputs force the agent to leak data or execute unauthorized calls | Insert prompt‑firewall middleware with pattern matching and policy‑based response filtering; maintain RLHF trained refusal models; log red‑team traffic for regression testing. |

| Hidden Cost Shock | Silent model‑parameter growth or prompt bloat multiplies token usage unnoticed. | Token‑level metering per invoke; budget alerts; dynamic prompt compression; apply parameter‑efficient tuning instead of full re‑training. |

| Data‑Residency Conflict | Embeddings stored in a cross‑border vector store violate jurisdiction rules. | Partition vector indices by region; encrypt at rest with regional KMS; enforce data‑gravity checks in Control Plane before hydration. |

Governance & Compliance Framework

| Control Domain | Objective | Potential Implementation Patterns |

| Identity & Access | Ensure only authorised entities invoke or modify agents. | Short‑lived OAuth/JWT tokens; agent‑to‑agent mTLS; Just‑In‑Time privilege grants. |

| Policy Enforcement | Align agent behaviour with legal, ethical, and business rules | Declarative policies in OPA evaluated at Gateway and Control Plane; deny‑by‑default posture |

| Traceability & Lineage | Provide full replay of inputs, reasoning, and outputs | Immutable append‑only logs |

| Data Governance | Prevent misuse of sensitive or regulated data. | Dynamic classification tags propagated through the context Layer; automatic mask filters before LLM calls. |

| Model Safety & Monitoring | Detect drift, toxicity, or degraded accuracy. | Shadow‑mode canary agents; statistical quality gates; continuous evaluation pipelines with golden datasets. |

| Cost & Capacity Management | Track and optimise resource utilisation. | GPU‑seconds and token‑usage meters funnelled to FinOps dashboards; predictive autoscaling based on historical patterns.

|

| Lifecycle & Retirement | Guarantee decommissioning of stale agents. | Versioned manifests with expiry dates; automated dependency graph checks; controlled archival to cold storage. |

Conclusion

Architecting for Lean Autonomy Lean Agents provide a pathway to highly granular, cost‑controlled, and carbon‑aware intelligence. By treating every agent as a disposable micro‑service with policy‑bound superpowers, engineering teams unlock safe experimentation without sacrificing governance.

Every enterprise has two choices: engineer a disciplined Lean Agent mesh now or battle an ungoverned swarm later. The blueprint is here to assemble build crew and start shipping.